3D点云补齐是一种输入某物体部分点云,输出完整点云的任务。在自动驾驶,机器人领域具有广阔的应用价值。本次复现的论文是来自CVPR2020的一篇文章:PF-Net: Point Fractal Network for 3D Point Cloud Completion。

论文地址:https://arxiv.org/pdf/2003.00...

为解决3D点云补齐,论文作者提出了Point Fractal Network(FP-Net),主要贡献有:

(1) 与其他方法不同,本方法输入为部分点云,输出为另一部分点云,这样可以保持输入点云的原有信息,使网络专注于点云补齐;

(2) 提出Multi-Resolution Encoder(MRE) 抽取多个分辨率的点云数据,可以提取点云的细节(low-leve)与框架(high-level),增强网络的几何信息提取能力;

(3) 提出Point Pyramid Decoder(PPD),增强补齐能力。

本项目使用飞桨动态图模式进行论文复现。

方法简述

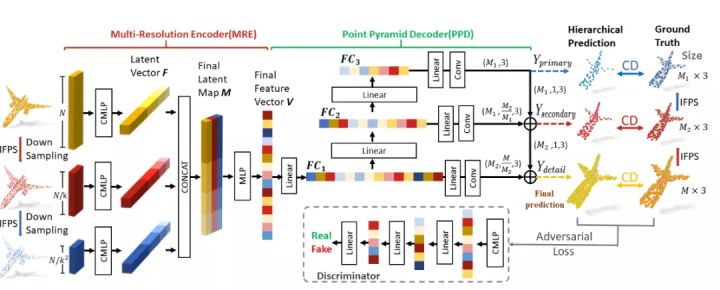

如图一所示,作者依照encoder-decoder的架构,提出基于多分辨率数据的表征和重建架构,输入端使用iterative farthest point sampling(IFPS)方法,采样三个分辨率的部分点云,然后将其编码为隐变量向量V,之后使用特征金字塔的思想,按照不同的分辨率解码隐变量向量,生成三个分辨率的补齐点云。

模型实现

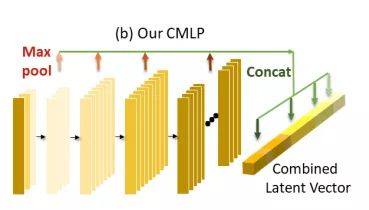

首先实现FP-Net的模型,其由MRE, PPD两部分组成。MRE首先将三个不同分辨率的部分点云作为输入,送入Combined Multi-layer Proception (CMLP),其结构如图二所示。

与基于PointNet结构的网络相比,CMLP网络对点云分类任务具有一定的精度提升,因此选择其作为特征抽取的骨干模块,其具体实现如下:

class Convlayer(fluid.dygraph.Layer):

def __init__(self, point_scales):

super(Convlayer, self).__init__()

self.point_scales = point_scales

self.conv1 = Conv2D(1, 64, (1, 3))

self.conv2 = Conv2D(64, 64, 1)

self.conv3 = Conv2D(64, 128, 1)

self.conv4 = Conv2D(128, 256, 1)

self.conv5 = Conv2D(256, 512, 1)

self.conv6 = Conv2D(512, 1024, 1)

self.maxpool = Pool2D(pool_size=(self.point_scales, 1), pool_stride=1)

self.bn1 = BatchNorm(64, act='relu')

self.bn2 = BatchNorm(64, act='relu')

self.bn3 = BatchNorm(128, act='relu')

self.bn4 = BatchNorm(256, act='relu')

self.bn5 = BatchNorm(512, act='relu')

self.bn6 = BatchNorm(1024, act='relu')

def forward(self, x):

x = fluid.layers.unsqueeze(x, 1)

x = self.bn1(self.conv1(x))

x = self.bn2(self.conv2(x))

x_128 = self.bn3(self.conv3(x))

x_256 = self.bn4(self.conv4(x_128))

x_512 = self.bn5(self.conv5(x_256))

x_1024 = self.bn6(self.conv6(x_512))

x_128 = fluid.layers.squeeze(input=self.maxpool(x_128), axes=[2])

x_256 = fluid.layers.squeeze(input=self.maxpool(x_256), axes=[2])

x_512 = fluid.layers.squeeze(input=self.maxpool(x_512), axes=[2])

x_1024 = fluid.layers.squeeze(input=self.maxpool(x_1024), axes=[2])

L = [x_1024, x_512, x_256, x_128]

x = fluid.layers.concat(L, 1)

return x 在这里分别使用1024,512,256,128四种size表征输入的部分点云,最后拼合成为一个向量作为此输入分辨率的合并隐变量(combined latent vector)。之后针对三种分辨率输入,分别将其表征为三个合并隐变量后使用多层感知器融合为最终的隐变量向量。

代码实现如下:

class Latentfeature(fluid.dygraph.Layer):

def __init__(self, num_scales, each_scales_size, point_scales_list):

super(Latentfeature, self).__init__()

self.num_scales = num_scales

self.each_scales_size = each_scales_size

self.point_scales_list = point_scales_list

self.Convlayers1 = Convlayer(point_scales=self.point_scales_list[0])

self.Convlayers2 = Convlayer(point_scales=self.point_scales_list[1])

self.Convlayers3 = Convlayer(point_scales=self.point_scales_list[2])

self.conv1 = Conv1D(prefix='lf', num_channels=3, num_filters=1, size_k=1, act=None)

self.bn1 = BatchNorm(1, act='relu')

def forward(self, x):

outs = [self.Convlayers1(x[0]), self.Convlayers2(x[1]), self.Convlayers3(x[2])]

latentfeature = fluid.layers.concat(outs, 2)

latentfeature = fluid.layers.transpose(latentfeature, perm=[0, 2, 1])

latentfeature = self.bn1(self.conv1(latentfeature))

latentfeature = fluid.layers.squeeze(latentfeature, axes=[1])

return latentfeature

PaddlePaddle暂时没有提供Conv1D(1维卷积层)的实现,在这里使用Conv2D(2维卷积层)加unsqueeze层替代其功能,具体实现如下:

class Conv1D(fluid.dygraph.Layer):

def __init__(self,

prefix,

num_channels=3,

num_filters=1,

size_k=1,

padding=0,

groups=1,

act=None):

super(Conv1D, self).__init__()

fan_in = num_channels * size_k * 1

k = 1. / math.sqrt(fan_in)

param_attr = ParamAttr(

name=prefix + "_w",

initializer=fluid.initializer.Uniform(

low=-k, high=k))

bias_attr = ParamAttr(

name=prefix + "_b",

initializer=fluid.initializer.Uniform(

low=-k, high=k))

self._conv2d = fluid.dygraph.Conv2D(

num_channels=num_channels,

num_filters=num_filters,

filter_size=(1, size_k),

stride=1,

padding=(0, padding),

groups=groups,

act=act,

param_attr=param_attr,

bias_attr=bias_attr)

def forward(self, x):

x = fluid.layers.unsqueeze(input=x, axes=[2])

x = self._conv2d(x)

x = fluid.layers.squeeze(input=x, axes=[2])

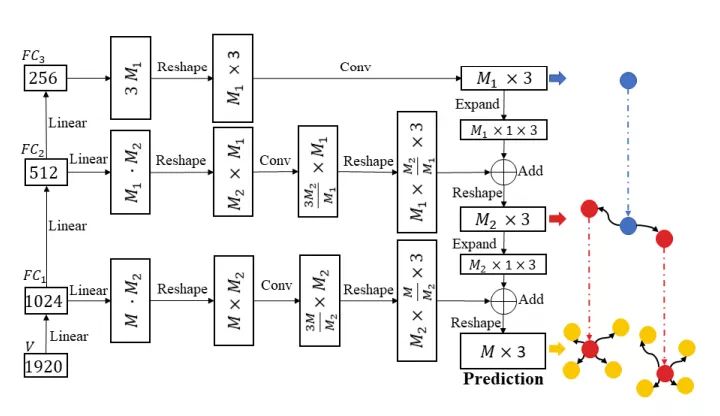

return x 有了隐变量向量之后,接下来就是介绍decoder部分,也就是PPD部分。它利用了借用金字塔的思想,生成多个分辨率的点云补齐结果。具体地讲,如图三所示,使用隐变量向量依次输出1024,512,256维的点云表征并分别使用Conv1D层处理其表征,最后输出不同分辨率的点云补齐结果。

其代码实现如下:

class PFNetG(fluid.dygraph.Layer):

def __init__(self, num_scales, each_scales_size, point_scales_list, crop_point_num):

super(PFNetG, self).__init__()

self.crop_point_num = crop_point_num

self.latentfeature = Latentfeature(num_scales, each_scales_size, point_scales_list)

self.fc1 = Linear(input_dim=1920, output_dim=1024, act='relu')

self.fc2 = Linear(input_dim=1024, output_dim=512, act='relu')

self.fc3 = Linear(input_dim=512, output_dim=256, act='relu')

self.fc1_1 = Linear(input_dim=1024, output_dim=128 * 512, act='relu')

self.fc2_1 = Linear(input_dim=512, output_dim=64 * 128, act='relu')

self.fc3_1 = Linear(input_dim=256, output_dim=64 * 3)

self.conv1_1 = Conv1D(prefix='g1_1', num_channels=512, num_filters=512, size_k=1, act='relu')

self.conv1_2 = Conv1D(prefix='g1_2', num_channels=512, num_filters=256, size_k=1, act='relu')

self.conv1_3 = Conv1D(prefix='g1_3', num_channels=256, num_filters=int((self.crop_point_num * 3) / 128),

size_k=1, act=None)

self.conv2_1 = Conv1D(prefix='g2_1', num_channels=128, num_filters=6, size_k=1, act=None)

def forward(self, x):

x = self.latentfeature(x)

x_1 = self.fc1(x) # 1024

x_2 = self.fc2(x_1) # 512

x_3 = self.fc3(x_2) # 256

pc1_feat = self.fc3_1(x_3)

pc1_xyz = fluid.layers.reshape(pc1_feat, [-1, 64, 3], inplace=False)

pc2_feat = self.fc2_1(x_2)

pc2_feat_reshaped = fluid.layers.reshape(pc2_feat, [-1, 128, 64], inplace=False)

pc2_xyz = self.conv2_1(pc2_feat_reshaped) # 6x64 center2

pc3_feat = self.fc1_1(x_1)

pc3_feat_reshaped = fluid.layers.reshape(pc3_feat, [-1, 512, 128], inplace=False)

pc3_feat = self.conv1_1(pc3_feat_reshaped)

pc3_feat = self.conv1_2(pc3_feat)

pc3_xyz = self.conv1_3(pc3_feat) # 12x128 fine

pc1_xyz_expand = fluid.layers.unsqueeze(pc1_xyz, axes=[2])

pc2_xyz = fluid.layers.transpose(pc2_xyz, perm=[0, 2, 1])

pc2_xyz_reshaped1 = fluid.layers.reshape(pc2_xyz, [-1, 64, 2, 3], inplace=False)

pc2_xyz = fluid.layers.elementwise_add(pc1_xyz_expand, pc2_xyz_reshaped1)

pc2_xyz_reshaped2 = fluid.layers.reshape(pc2_xyz, [-1, 128, 3], inplace=False)

pc2_xyz_expand = fluid.layers.unsqueeze(pc2_xyz_reshaped2, axes=[2])

pc3_xyz = fluid.layers.transpose(pc3_xyz, perm=[0, 2, 1])

pc3_xyz_reshaped1 = fluid.layers.reshape(pc3_xyz, [-1, 128, int(self.crop_point_num / 128), 3], inplace=False)

pc3_xyz = fluid.layers.elementwise_add(pc2_xyz_expand, pc3_xyz_reshaped1)

pc3_xyz_reshaped2 = fluid.layers.reshape(pc3_xyz, [-1, self.crop_point_num, 3], inplace=False)

return pc1_xyz, pc2_xyz_reshaped2, pc3_xyz_reshaped2 # center1 ,center2 ,fine 训练

接下来介绍训练的相关实现细节。首先需要将输入部分点云采样生成3个不同分辨率的点云,这里使用IFPS方法。它是一种非常常用的采样算法,由于能够保证对样本的均匀采样,被广泛使用与3D视觉中。

实现细节如下:

def farthest_point_sample_numpy(xyz, npoint, RAN=True):

B, N, C = xyz.shape

centroids = np.zeros((B, npoint))

distance = np.ones((B, N)) * 1e10

if RAN:

farthest = np.random.randint(low=0, high=1, size=(B,))

else:

farthest = np.random.randint(low=1, high=2, size=(B,))

batch_indices = np.arange(start=0, stop=B)

for i in range(npoint):

centroids[:, i] = farthest

centroid = xyz[batch_indices, farthest, :].view().reshape(B, 1, 3)

dist = np.sum((xyz - centroid) ** 2, -1)

mask = dist < distance

distance[mask] = dist[mask]

farthest = np.argmax(distance, -1)

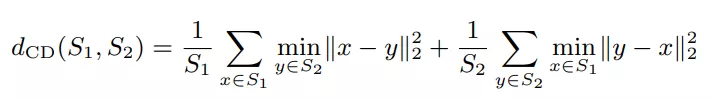

return centroids.astype('int64') 接着需要定义损失函数,这里使用chamfer distance (CD)作为loss,其定义如下:

其中和为两组点云,具体实现如下:

def chamfer_distance(array1, array2):

batch_size, num_point, num_features = array1.shape

dist = 0

for i in range(batch_size):

av_dist1 = array2samples_distance(array1[i], array2[i])

av_dist2 = array2samples_distance(array2[i], array1[i])

dist = dist + (av_dist1 + av_dist2) / batch_size

return dist

def array2samples_distance(array1, array2):

num_point, num_features = array1.shape

expanded_array1 = fluid.layers.expand(array1, [num_point, 1])

array2_expand = fluid.layers.unsqueeze(array2, [1])

expanded_array2 = fluid.layers.expand(array2_expand, [1, num_point, 1])

expanded_array2_reshaped = fluid.layers.reshape(expanded_array2, [-1, num_features], inplace=False)

distances = (expanded_array1 - expanded_array2_reshaped) * (expanded_array1 - expanded_array2_reshaped)

distances = fluid.layers.reduce_sum(distances, dim=1)

distances_reshaped = fluid.layers.reshape(distances, [num_point, num_point])

distances = fluid.layers.reduce_min(distances_reshaped, dim=1)

distances = fluid.layers.mean(distances) 接下来需要准备数据集。这里使用ShapeNet_part数据集训练测试网络。同时使用fluid.io.DataLoader.from_generator提供的方法获取数据集中的数据,具体实现请参照代码:

dset = PartDataset(root=root, classification=True, class_choice=None, num_point=opt.pnum, mode='train')

train_loader = fluid.io.DataLoader.from_generator(capacity=10, iterable=True)

train_loader.set_sample_list_generator(dset.get_reader(opt.batchSize), places=place) 实验结论

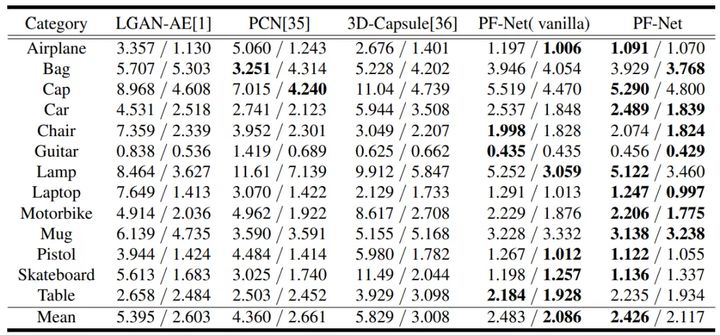

原文提出了两种训练方法,一种是直接使用各个分辨率的ground truth与生成的补齐点云计算CD作为loss,称为FP-Net (vanilla);另一种在此基础上训练一个判别器(discriminator)作为adversarial loss,称为FP-Net。对于补齐网络部分的loss,其结果如下。与PCN, LGAN-AE和3D-Capsule相比,PF-Net整体达到了state-of-the-art。

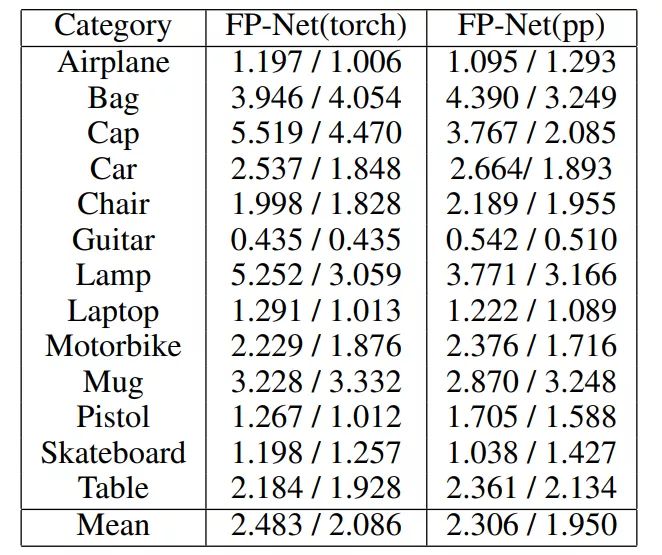

由于时间关系,本项目实现了PF-Net (vanilla)部分的代码。复现结果如下:

其中,第一列为预测到GT的CD,第二列为GT到预测的CD。可以看到PaddlePaddle(pp)复现模型与Pytorch(torch)模型表现相当。

个人心得

本次复现营,我基于自己的研究兴趣选择了3D视觉文章作为主题复现文章。这对我是一个挑战,因为这的确是第一次复现文章。我采取了比较谨慎的策略,分别完成了模型网络的搭建,数据集准备,损失函数等模块的复现。对于每一个子功能都与Pytorch源码进行了精度对齐,保证子功能无误后才进行下一步工作。好在需要使用的层和功能PaddlePaddle基本都有相关实现,这使得复现工作变得简单不少。当然由于时间的限制,一些内容如多线程训练等还没有实现,今后会继续探索PaddlePaddle。

如在使用过程中有问题,可加入飞桨官方QQ群进行交流:1108045677。

如果您想详细了解更多飞桨的相关内容,请参阅以下文档。

·飞桨官网地址

https://www.paddlepaddle.org.cn/

·飞桨开源框架项目地址

GitHub: https://github.com/PaddlePadd...