在docker环境中测试不是很方便,所以我直接在本机搭建SDK环境,测试模型如下

resnet34(ONNX)

ssdlite_mobilenet_v2(tf object detection)

suqeezenet(tflite)

非线性数据拟合(待)

1、SDK环境搭建

参考: AI610-DOC-1002-r0p0-eac0/Zhouyi_Compass_r0p0-00eac0_ReleaseNote.pdf的安装部分

conda create -n r329 python=3.6

source activate r329

cd AI610-SDK-1003-r0p0-eac0

./pip_install.sh

./env_setup.sh

或者直接在~/.bashrc中设置

设置完成之后的打印log

Total errors: 0, warnings: 0

+ aipu_simulator_z1 --version

1.1.0

+ aipu_simulator_z2 --version

2.1.0

+ aipucc --version

1.5.0

+ aipudbg --version

1.2.0

+ echo 'env setup done'

env setup done2、resnet34测试

2.0 原始模型文件

2.1 矫正集

2.2 cfg 文件

onnx_resnet_34_build.cfg

onnx_resnet_34_run.cfg

2.3 simulator 执行结果

模型量化:

aipubuild config/onnx_resnet_34_build.cfg模型推理:

aipubuild config/onnx_resnet_34_run.cfg结果比较:

r329) mod@archlinux resnet34 git:[resnet34] $ python3 compare_class.py

[TEST FAIL] Class Check FAILED! Class number for output is 722, Class number for ref is 230.

You detect ping-pong ball, but you should detect Shetland sheepdog, Shetland sheep dog, Shetland from ref label.

Detect picture save to result.jpeg

Show input picture...

3、ssdlite_mobilenet_v2测试

参考AI610-DOC-1001-r0p0-eac0/Zhouyi_Compass_Software_Programming_Guide_61010011_0200_00_en.pdf

3.1 cfg 文件

build:

[Common]

mode = build

[Parser]

model_type = TensorFlow

model_name = ssdlite_mobilenet_v2

detection_postprocess = SSD

model_domain = object_detection

input_model = ./model/ssdlite_mobilenet_v2.pb

input = image_tensor

input_shape = [1, 224, 224, 3]

output = detection_boxes

output_dir = ./

[AutoQuantizationTool]

quantize_method = SYMMETRIC

quant_precision = int8

ops_per_channel = DepthwiseConv

reverse_rgb = False

label_id_offset =

dataset_name =

detection_postprocess = SSD

anchor_generator = MULTIPLE_GRID

log = True

calibration_data = ./dataset/dataset.npy

calibration_label = ./dataset/label.npy

[GBuilder]

outputs = aipu.bin

target = Z1_0701

run:

[Common]

mode = run

[Parser]

model_type = tflite

model_name = mobilenet_v2

detection_postprocess =

model_domain = image_classification

input_model = ./model/model.tflite

input = input

input_shape = [1, 224, 224, 3]

output = MobilenetV2/Predictions/Softmax

output_dir = ./

[AutoQuantizationTool]

quantize_method = SYMMETRIC

quant_precision = int8

ops_per_channel = DepthwiseConv

reverse_rgb = False

label_id_offset =

dataset_name =

detection_postprocess =

anchor_generator =

log = False

calibration_data = ./dataset/dataset.npy

calibration_label = ./dataset/label.npy

[GBuilder]

inputs=./model/input.bin

simulator=aipu_simulator_z1

outputs=output_ssdlite_mobilenet_v2.bin

profile= True

target=Z1_0701

模型量化:

aipubuild config/ssdlite_mobilenet_v2_build.cfg以下算子不支持

(参考Zhouyi_Compass_Operators_Specification_Application_Note_61010017_001_en.pdf)

[I] [Parser]: Parser done!

[I] Parse model complete

[I] Quantizing model....

[I] AQT start: model_name:ssdlite_mobilenet_v2, calibration_method:MEAN, batch_size:1

[I] ==== read ir ================

[I] float32 ir txt: /tmp/AIPUBuilder_1626345645.0744958/ssdlite_mobilenet_v2.txt

[I] float32 ir bin2: /tmp/AIPUBuilder_1626345645.0744958/ssdlite_mobilenet_v2.bin

[E] unsupported op: Merge

[E] unsupported op: Exit

[E] unsupported op: TensorArraySizeV3

[E] unsupported op: Range

[E] unsupported op: TensorArrayGatherV34、suqeezenet

4.0 模型

4.1 量化矫正数据

4.2 config

仿真结果

(r329) mod@archlinux suqeezenet git:[squeezenet] $ python3 quant_predict.py

predict first 5 label:

index 1000, prob 0, name: ear

index 328, prob 0, name: lycaenid

index 341, prob 0, name: sorrel

index 340, prob 0, name: guinea pig

index 339, prob 0, name: beaver

true first 5 label:

index 232, prob 83, name: Shetland sheepdog

index 231, prob 83, name: Old English sheepdog

index 158, prob 41, name: Blenheim spaniel

index 170, prob 40, name: redbone

index 161, prob 39, name: Rhodesian ridgeback

Detect picture save to result.jpeg

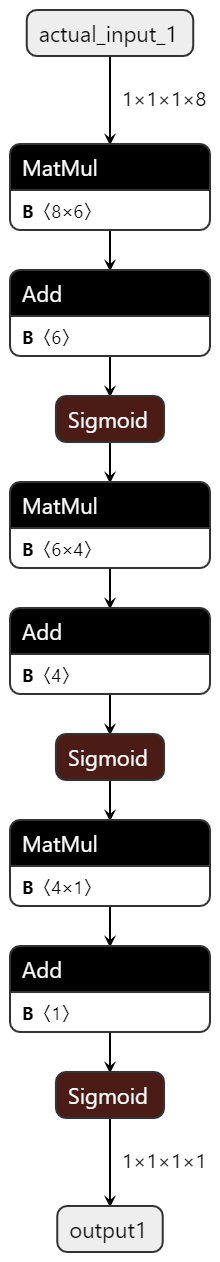

5、非线性数据拟合(sigmoid)

参考:AI610-SDK-1003-r0p0-eac0\customized-op-example

测试模型如下:

导出ONNX模型的代码:

import torch

from torch.autograd import Variable

import numpy as np

xy = np.loadtxt('./diabetes.csv',delimiter=',',dtype=np.float32)

# x_data:0 ~ cols ; y_data:cols - 1

x_data = Variable(torch.from_numpy(xy[:,0:-1]))

y_data = Variable(torch.from_numpy(xy[:,[-1]]))

print(xy.data.shape)

print(x_data.data.shape)

print(y_data.data.shape)

class Model(torch.nn.Module):

def __init__(self):

super(Model, self).__init__()

self.l1 = torch.nn.Linear(8, 6)

self.l2 = torch.nn.Linear(6, 4)

self.l3 = torch.nn.Linear(4, 1)

self.sigmoid = torch.nn.Sigmoid()

def forward(self,x):

x = self.sigmoid(self.l1(x))

x = self.sigmoid(self.l2(x))

y_pred = self.sigmoid(self.l3(x))

return y_pred

# our model

model = Model()

# print(model)

# exit(0)

cirterion = torch.nn.BCELoss(reduction='mean')

optimizer = torch.optim.SGD(model.parameters(),lr=0.01)

# train

for epoch in range(100000):

y_pred = model(x_data)

loss = cirterion(y_pred,y_data)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if(epoch % 5000 == 0):

print("loss ",epoch,loss.data)

# test model

# -0.294118,0.487437,0.180328,-0.292929,0,0.00149028,-0.53117,-0.0333333, 0

h_our = Variable(torch.tensor([[-0.294118,0.487437,0.180328,-0.292929,0,0.00149028,-0.53117,-0.0333333]]))

print("predict : ",h_our.data[0],model.forward(h_our).data[0][0])

# -0.882353,-0.145729,0.0819672,-0.414141,0,-0.207153,-0.766866,-0.666667, 1

h_our = Variable(torch.tensor([[-0.882353,-0.145729,0.0819672,-0.414141,0,-0.207153,-0.766866,-0.666667]]))

print("predict : ",h_our.data[0],model.forward(h_our).data[0][0])

# 保存网络

dummy_input = torch.randn(1, 1, 1, 8)

input_names = [ "actual_input_1" ]

output_names = [ "output1" ]

torch.onnx.export(model, dummy_input, "logistic.onnx", verbose=True, input_names=input_names, output_names=output_names)

# predict : tensor([-0.2941, 0.4874, 0.1803, -0.2929, 0.0000, 0.0015, -0.5312, -0.0333]) tensor(0.3109)

# predict : tensor([-0.8824, -0.1457, 0.0820, -0.4141, 0.0000, -0.2072, -0.7669, -0.6667]) tensor(0.9757)