在 xr806 上用 ncnn 跑神经网络 mnist

0x0 介绍 xr806 和 ncnn

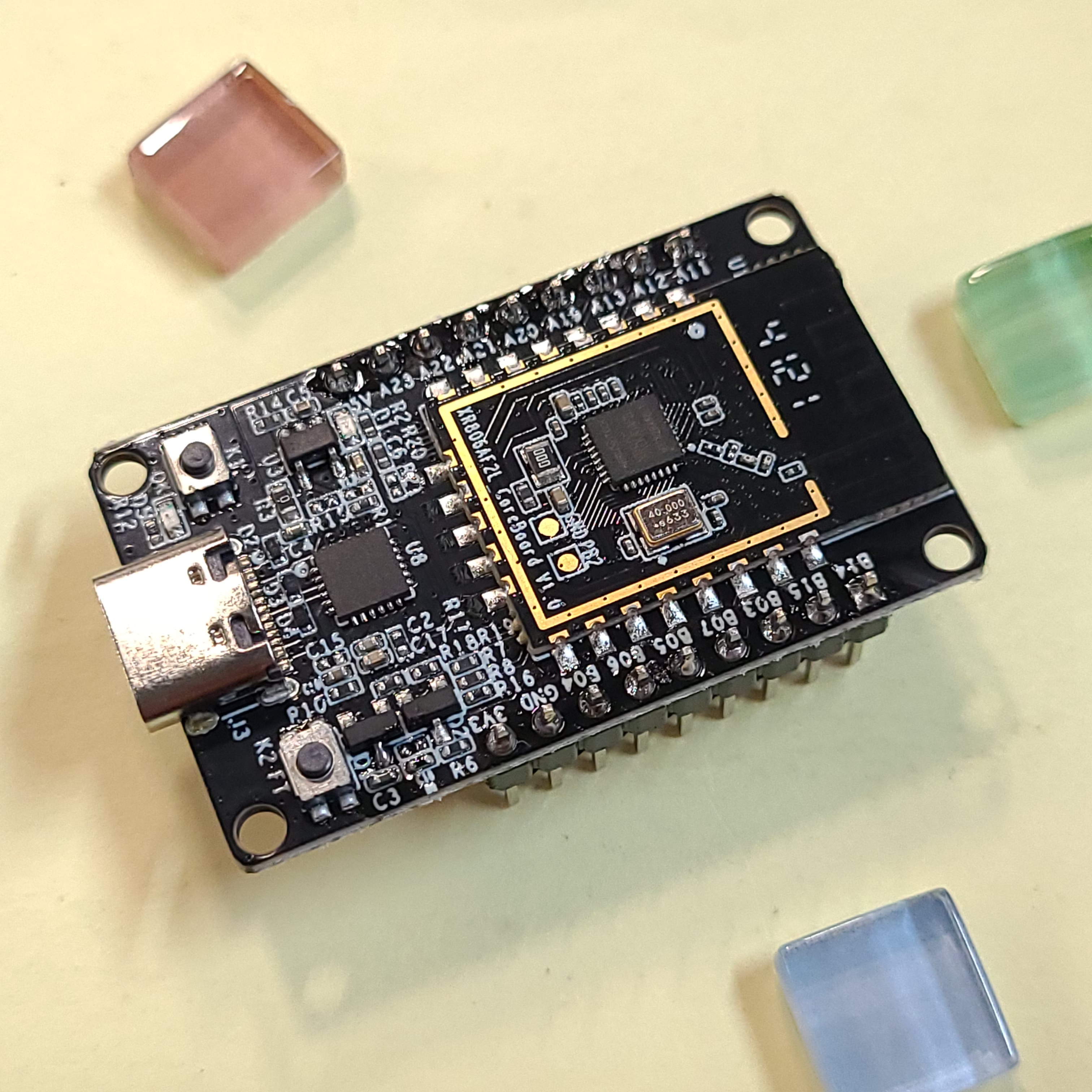

XR806是全志科技旗下子公司广州芯之联研发设计的一款支持WiFi和BLE的高集成度无线MCU芯片,支持鸿蒙L0系统

https://github.com/Tencent/ncnn

ncnn是腾讯开源的高性能神经网络推理框架,无第三方依赖,跨平台,具备非常好的可移植性,允许零拷贝加载模型节省内存

https://aijishu.com/e/1120000...

又能从极术社区白嫖开发板玩啦!

这次要把 ncnn 移植到这款 MCU 芯片

给白嫖就给移植,就像乐鑫科技送的 esp32c3 ^^:D

0x1 配环境,编译,烧录

配代理

export http_proxy="http://127.0.0.1:4567"

export https_proxy="http://127.0.0.1:4567"下载 repo 工具,配 PATH

mkdir -p bin

curl https://storage.googleapis.com/git-repo-downloads/repo > bin/repo

chmod a+rx bin/repo

PATH="`pwd`/bin:$PATH"下载 hb 工具,配 PATH

pip install --user ohos-build

PATH="$HOME/.local/bin:$PATH"下载 openharmony 源码

有时候会遇到错误 error.GitError: manifest rev-list ('^e263a2d3e4557381c32fa31ce24384048d07d682', 'HEAD', '--'): fatal: bad object HEAD,多次重试即可

repo init -u https://gitee.com/openharmony-sig/manifest.git -b OpenHarmony_1.0.1_release --no-repo-verify -m devboard_xr806.xml

repo sync -c

repo forall -c 'git lfs pull'下载 arm toolchain

wget -c https://developer.arm.com/-/media/Files/downloads/gnu-rm/10-2020q4/gcc-arm-none-eabi-10-2020-q4-major-x86_64-linux.tar.bz2

tar -xf gcc-arm-none-eabi-10-2020-q4-major-x86_64-linux.tar.bz2更新 toolchain 信息

不要用最新版本的工具链,会编译失败

sed -i "s@~/tools/gcc-arm-none-eabi-10-2020-q4-major/bin/arm-none-eabi-@`pwd`/gcc-arm-none-eabi-10-2020-q4-major/bin/arm-none-eabi-@g" device/xradio/xr806/liteos_m/config.gni

sed -i "s@~/tools/gcc-arm-none-eabi-10-2020-q4-major/bin@`pwd`/gcc-arm-none-eabi-10-2020-q4-major/bin@g" device/xradio/xr806/xr_skylark/gcc.mk修正 SDKconfig.gni 生成,修正 copy 脚本

sed -i "s@open('\.{0}/@open('{0}/@g" device/xradio/xr806/xr_skylark/config.py

sed -i "s@open('\.{0}/@open('{0}/@g" device/xradio/xr806/libcopy.py编译默认的固件

menuconfig 直接 EXIT,后面再选择 wifi_skylark

cd device/xradio/xr806/xr_skylark

cp project/demo/audio_demo/gcc/deconfig .config

make build_clean

make menuconfig

make lib -j

cd -

hb set

hb build -f烧录固件

usb 连接 xr806 开发板,此时会出现 /dev/ttyUSB0 设备(多个设备也可能是别的编号)

打开 device/xradio/xr806/xr_skylark/tools/settings.ini,修改固件路径,默认是 strImagePath = ../out/xr_system.img,iBaud 保持默认 iBaud = 921600

root 权限执行 phoenixMC 工具,开始烧录

cd device/xradio/xr806/xr_skylark/tools

su

./phoenixMC串口查看输出

root 权限执行 tio,添加 -m INLCRNL 参数将 \n 自动调整为 \r\n 防止输出乱掉

su

tio -m INLCRNL /dev/ttyUSB0按下 xr806 上的 reset 按钮(usb接口右边的按钮),tio 里会输出信息

0x2 编译出 hello world

打开 device/xradio/xr806/BUILD.gn,启用 deps += "ohosdemo:ohosdemo"

打开 device/xradio/xr806/ohosdemo/BUILD.gn,启用 deps = "hello_demo:app_hello"

重新上一节编译默认的固件操作,串口会每秒打印 hello world

0x3 精简和编译 ncnn

配置 cmake toolchain

下载最新 ncnn 源码

git clone https://github.com/Tencent/ncnn.git在 toolchains 目录新建 xr806.toolchain.cmake,设置 toolchain 目录和编译器路径

为避免引入架构相关的代码,处理器设为 xtensa

从前面生成的 device/xradio/xr806/liteos_m/SDKconfig.gni 中摘抄有用的目标编译参数,设为默认编译参数

set(CMAKE_SYSTEM_NAME Generic)

set(CMAKE_SYSTEM_PROCESSOR xtensa)

set(CMAKE_C_COMPILER "/home/nihui/osd/xr806/gcc-arm-none-eabi-10-2020-q4-major/bin/arm-none-eabi-gcc")

set(CMAKE_CXX_COMPILER "/home/nihui/osd/xr806/gcc-arm-none-eabi-10-2020-q4-major/bin/arm-none-eabi-g++")

set(CMAKE_FIND_ROOT_PATH "/home/nihui/osd/xr806/gcc-arm-none-eabi-10-2020-q4-major/arm-none-eabi")

set(CMAKE_TRY_COMPILE_TARGET_TYPE STATIC_LIBRARY)

set(CMAKE_FIND_ROOT_PATH_MODE_PROGRAM NEVER)

set(CMAKE_FIND_ROOT_PATH_MODE_LIBRARY ONLY)

set(CMAKE_FIND_ROOT_PATH_MODE_INCLUDE ONLY)

set(CMAKE_FIND_ROOT_PATH_MODE_PACKAGE ONLY)

set(CMAKE_C_FLAGS "-mcpu=cortex-m33 -mtune=cortex-m33 -march=armv8-m.main+dsp -mfpu=fpv5-sp-d16 -mfloat-abi=softfp -mcmse -mthumb -fno-common")

set(CMAKE_CXX_FLAGS "-mcpu=cortex-m33 -mtune=cortex-m33 -march=armv8-m.main+dsp -mfpu=fpv5-sp-d16 -mfloat-abi=softfp -mcmse -mthumb -fno-common")

# cache flags

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS}" CACHE STRING "c flags")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS}" CACHE STRING "c++ flags")精简 ncnn 功能,减小二进制体积

参考文档 https://github.com/Tencent/nc... 在 xr806.toolchain.cmake 追加 ncnn cmake 编译选项

去除 bf16,int8,图像处理,文件加载模型,多线程,平台相关功能,C接口,c++ rtti exception

去除 mnist 模型中用不到的算子

开启 NCNN_SIMPLESTL 以便在没有 c++ stl 的 xr806 上使用 c++ stl 特性

option(NCNN_INSTALL_SDK "" ON)

option(NCNN_PIXEL_ROTATE "" OFF)

option(NCNN_PIXEL_AFFINE "" OFF)

option(NCNN_PIXEL_DRAWING "" OFF)

option(NCNN_BUILD_BENCHMARK "" OFF)

option(NCNN_BUILD_TESTS "" OFF)

option(NCNN_BUILD_TOOLS "" OFF)

option(NCNN_BUILD_EXAMPLES "" OFF)

option(NCNN_DISABLE_RTTI "" ON)

option(NCNN_DISABLE_EXCEPTION "" ON)

option(NCNN_BF16 "" OFF)

option(NCNN_INT8 "" OFF)

option(NCNN_THREADS "" OFF)

option(NCNN_OPENMP "" OFF)

option(NCNN_STDIO "" OFF)

option(NCNN_STRING "" OFF)

option(NCNN_C_API "" OFF)

option(NCNN_PLATFORM_API "" OFF)

option(NCNN_RUNTIME_CPU "" OFF)

option(NCNN_SIMPLESTL "" ON)

option(WITH_LAYER_absval "" OFF)

option(WITH_LAYER_argmax "" OFF)

option(WITH_LAYER_batchnorm "" OFF)

option(WITH_LAYER_bias "" OFF)

option(WITH_LAYER_bnll "" OFF)

option(WITH_LAYER_concat "" OFF)

option(WITH_LAYER_convolution "" ON)

option(WITH_LAYER_crop "" OFF)

option(WITH_LAYER_deconvolution "" OFF)

option(WITH_LAYER_dropout "" OFF)

option(WITH_LAYER_eltwise "" OFF)

option(WITH_LAYER_elu "" OFF)

option(WITH_LAYER_embed "" OFF)

option(WITH_LAYER_exp "" OFF)

option(WITH_LAYER_flatten "" ON)

option(WITH_LAYER_innerproduct "" ON)

option(WITH_LAYER_input "" ON)

option(WITH_LAYER_log "" OFF)

option(WITH_LAYER_lrn "" OFF)

option(WITH_LAYER_memorydata "" ON)

option(WITH_LAYER_mvn "" OFF)

option(WITH_LAYER_pooling "" ON)

option(WITH_LAYER_power "" OFF)

option(WITH_LAYER_prelu "" OFF)

option(WITH_LAYER_proposal "" OFF)

option(WITH_LAYER_reduction "" OFF)

option(WITH_LAYER_relu "" ON)

option(WITH_LAYER_reshape "" ON)

option(WITH_LAYER_roipooling "" OFF)

option(WITH_LAYER_scale "" OFF)

option(WITH_LAYER_sigmoid "" OFF)

option(WITH_LAYER_slice "" OFF)

option(WITH_LAYER_softmax "" OFF)

option(WITH_LAYER_split "" ON)

option(WITH_LAYER_spp "" OFF)

option(WITH_LAYER_tanh "" OFF)

option(WITH_LAYER_threshold "" OFF)

option(WITH_LAYER_tile "" OFF)

option(WITH_LAYER_rnn "" OFF)

option(WITH_LAYER_lstm "" OFF)

option(WITH_LAYER_binaryop "" ON)

option(WITH_LAYER_unaryop "" OFF)

option(WITH_LAYER_convolutiondepthwise "" OFF)

option(WITH_LAYER_padding "" ON)

option(WITH_LAYER_squeeze "" OFF)

option(WITH_LAYER_expanddims "" OFF)

option(WITH_LAYER_normalize "" OFF)

option(WITH_LAYER_permute "" OFF)

option(WITH_LAYER_priorbox "" OFF)

option(WITH_LAYER_detectionoutput "" OFF)

option(WITH_LAYER_interp "" OFF)

option(WITH_LAYER_deconvolutiondepthwise "" OFF)

option(WITH_LAYER_shufflechannel "" OFF)

option(WITH_LAYER_instancenorm "" OFF)

option(WITH_LAYER_clip "" OFF)

option(WITH_LAYER_reorg "" OFF)

option(WITH_LAYER_yolodetectionoutput "" OFF)

option(WITH_LAYER_quantize "" OFF)

option(WITH_LAYER_dequantize "" OFF)

option(WITH_LAYER_yolov3detectionoutput "" OFF)

option(WITH_LAYER_psroipooling "" OFF)

option(WITH_LAYER_roialign "" OFF)

option(WITH_LAYER_packing "" ON)

option(WITH_LAYER_requantize "" OFF)

option(WITH_LAYER_cast "" ON)

option(WITH_LAYER_hardsigmoid "" OFF)

option(WITH_LAYER_selu "" OFF)

option(WITH_LAYER_hardswish "" OFF)

option(WITH_LAYER_noop "" OFF)

option(WITH_LAYER_pixelshuffle "" OFF)

option(WITH_LAYER_deepcopy "" OFF)

option(WITH_LAYER_mish "" OFF)

option(WITH_LAYER_statisticspooling "" OFF)

option(WITH_LAYER_swish "" OFF)

option(WITH_LAYER_gemm "" ON)

option(WITH_LAYER_groupnorm "" OFF)

option(WITH_LAYER_layernorm "" OFF)

option(WITH_LAYER_softplus "" OFF)

option(WITH_LAYER_gru "" OFF)

option(WITH_LAYER_multiheadattention "" OFF)

option(WITH_LAYER_gelu "" OFF)

option(WITH_LAYER_convolution1d "" OFF)

option(WITH_LAYER_pooling1d "" OFF)

option(WITH_LAYER_convolutiondepthwise1d "" OFF)

option(WITH_LAYER_convolution3d "" OFF)

option(WITH_LAYER_convolutiondepthwise3d "" OFF)

option(WITH_LAYER_pooling3d "" OFF)进一步精简 ncnn 代码,减小二进制体积

打开 src/layer/fused_activation.h,删除除 relu 激活外的其他激活函数实现,避免调用 exp log 等 xr806 上没有的数学函数

打开 src/layer/binaryop.cpp,删除 forward 中除 ADD 外的其他二元操作,减小 binaryop 算子二进制体积

编译 xr806 平台的 ncnn

mkdir build-xr806

cd build-xr806

cmake -DCMAKE_TOOLCHAIN_FILE=../toolchains/xr806.toolchain.cmake -DCMAKE_BUILD_TYPE=Release ..

make -j4

make install编译出的 ncnn 库和头文件在 build-xr806/install 中,libncnn.a 大小约为 234KB

0x4 实现 mnist 手写数字识别

配置 c++ 工程

仿照前面 hello world demo,新建 ncnn demo 工程,main.c 重命名为 main.cpp

将前面编译出的 build-xr806/install 目录拷贝到 ncnn_demo 中

BUILD.gn 中新加 cflags_cc 是 C++ 编译参数,include_dirs 增加 ncnn 头文件目录

import("//device/xradio/xr806/liteos_m/config.gni")

static_library("app_ncnn") {

configs = []

sources = [

"src/main.cpp",

]

cflags = board_cflags

cflags_cc = board_cflags

include_dirs = board_include_dirs

include_dirs += [

"//kernel/liteos_m/kernel/arch/include",

"src/ncnn/include",

]

}实现 ncnn mnist

参考 https://github.com/Tencent/ncnn 的相关教程,从训练的模型转换出 ncnn 模型

使用 ncnnoptimize 优化模型架构,使用 ncnn2mem 将模型转换为可嵌入程序的静态数组

为了后续能直接引用加载模型,使用 fp32 类型

ncnnoptimize mnist_1.param mnist_1.bin mnist_1_opt.param mnist_1_opt.bin 0

ncnn2mem mnist_1_opt.param mnist_1_opt.bin mnist_1.id.h mnist_1_bin.h引用加载模型,直接使用程序 rodata 数据,加载不会再申请堆内存,适合 xr806 这样的运行内存较小的设备

#include "ncnn/benchmark.h"

#include "ncnn/cpu.h"

#include "ncnn/net.h"

#include "ncnn/mat.h"

#include "mnist_1_bin.h"

ncnn::Net net;

net.load_param(mnist_1_opt_param_bin);

net.load_model(mnist_1_opt_bin);预先读取 28x28 灰度图的像素数据,存放在 const unsigned char IN_IMG[] 数组中

在推理前后使用 ncnn::get_current_time api 获得当前 ms 时间戳,算出推理耗时

ncnn::Mat in = ncnn::Mat::from_pixels(IN_IMG, ncnn::Mat::PIXEL_GRAY, 28, 28);

ncnn::Mat out;

double start = ncnn::get_current_time();

ncnn::Extractor ex = net.create_extractor();

ex.input(0, in);

ex.extract(17, out);

double end = ncnn::get_current_time();

float time_ms = end - start;输出 10 分类结果,找到概率最高的识别结果

int gussed = -1;

float guss_exp = -10000000;

for (int i = 0; i < 10; i++)

{

printf("%d: %.2f\n", i, out[i]);

if (out[i] > guss_exp)

{

gussed = i;

guss_exp = out[i];

}

}

printf("I think it is number %d!\n", gussed);以上代码封装好后,在 main.cpp MainThread 调用

0x5 编译含有 ncnn mnist 的固件,新的问题和应对

链接失败,找不到 operator new 等 C++ 底层实现

menuconfig 中的 C++ support 其实并没什么用,只要编译 ncnn 时开启 NCNN_SIMPLESTL 选项即可

链接失败,找不到 exp pow log 等数学函数实现

似乎是没有 libm,就直接把 ncnn 中使用的代码删除吧,主要就是 src/fused_activation.h 的 sigmoid 这些,模型没用到就不需要

链接失败,找不到 ncnn 的实现

没有链接 libncnn.a,应该是 BUILD.gn 没写好,但我也不知道怎么写,于是直接修改 device/xradio/xr806/libcopy.py

在 APPlib = filenames_filter(FnList,'libapp_','.a') 后面添加

os.system("cp /home/nihui/osd/xr806/device/xradio/xr806/ohosdemo/ncnn_demo/src/ncnn/lib/libncnn.a /home/nihui/osd/xr806/device/xradio/xr806/xr_skylark/lib/ohos/")

APPlib.append("libncnn.a")修复 image map

在重复前面编译过程时,发生 bin0 bin1 重叠问题,打开 device/xradio/xr806/xr_skylark/project/demo/wlan_ble_demo/image/xr806/image_wlan_ble.cfg,将自动生成的 device/xradio/xr806/xr_skylark/project/demo/wlan_ble_demo/image/xr806/image_auto_cal.cfg 文件内容覆盖前者,再次编译解决

无法烧录,救活 xr806

在烧录含有 ncnn mnist 固件后,加载 mnist 模型发生 malloc 失败,此后出现 cpu exception 6 并显示一大堆崩坏的指针,死机,也无法烧录新固件

参考 https://xr806.docs.aw-ol.com/... 最后的方法,用镊子接触 GNB 和 PN2,并按下 reset 按钮,再烧录即可成功

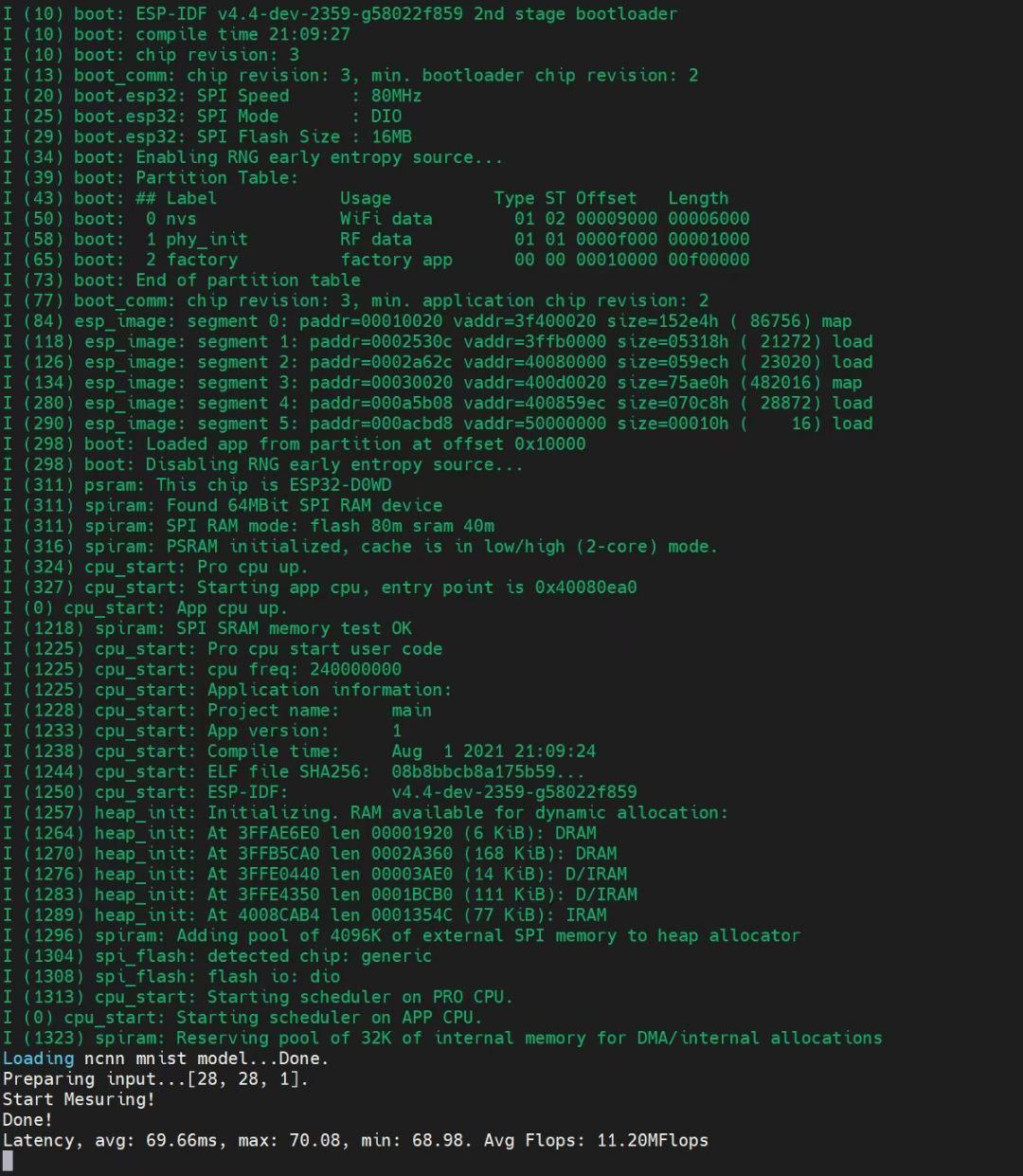

去除 wifi 蓝牙模块

因为资源实在紧张,要去除 wifi 蓝牙

又因为对 openharmony 不熟悉,于是暴力删除 device/xradio/xr806/adapter/hals/communication 文件夹

此时编译出错,找到 build/lite/config/component/lite_component.gni

修改,不让添加 communication/wifi_lite

# add component features

foreach(feature_label, features) {

print(feature_label)

if (feature_label != "//device/xradio/xr806/adapter/hals/communication/wifi_lite/wifiservice:wifiservice") {

deps += [ feature_label ]

}

}menuconfig 去掉 wifi 蓝牙的相关功能

再次编译解决,烧录固件后,不再 malloc 失败,可以正常推理 ncnn mnist

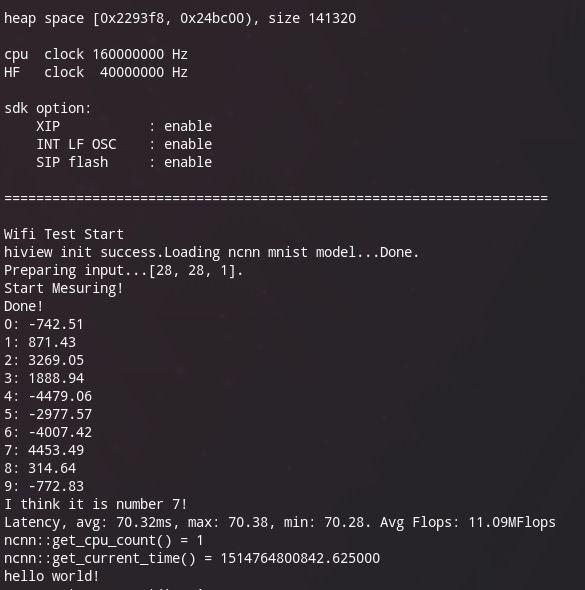

0x6 xr806 ncnn mnist 推理实测

整理的项目

https://github.com/nihui/ncnn...

https://github.com/nihui/ncnn...

耗时非常稳定,测得算力几乎持平 esp32c3