15.3 UE XR

15.3.1 UE XR概述

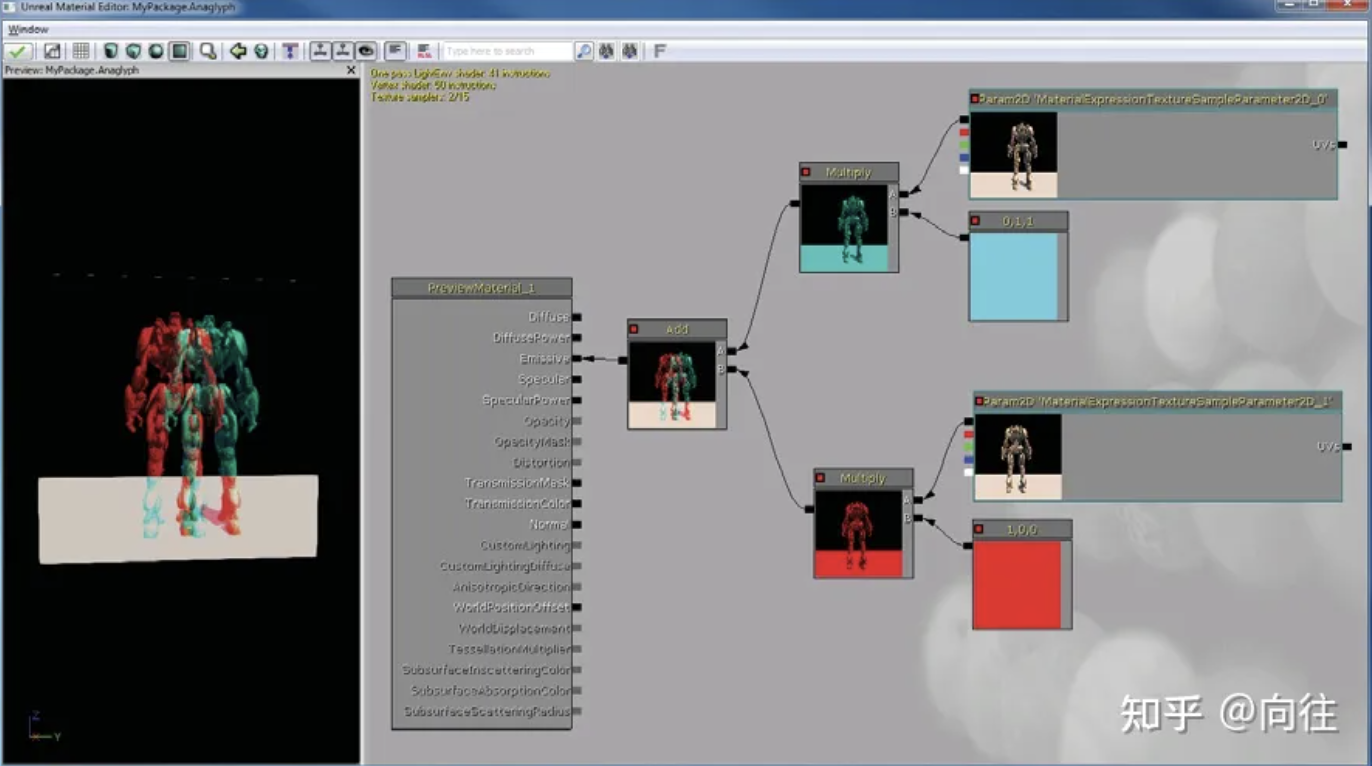

早在UE3时代,就已经通过节点图支持了VR的渲染,其中渲染管线的不同组件可以在多个配置中重新排列、修改和重新连接。根据所支持的节点类型,有时可以以产生特定VR技术效果的方式配置节点。下图显示了一个示例,它描述了虚幻引擎的材质编辑界面,该界面被配置为渲染红色青色立体图像作为后处理效果。

使用UE3的“材质编辑器”(Material Editor)配置虚幻引擎以支持红青色立体感,可以以这种方式支持其它立体编码,例如通过隔行扫描用于偏振立体显示的图像。

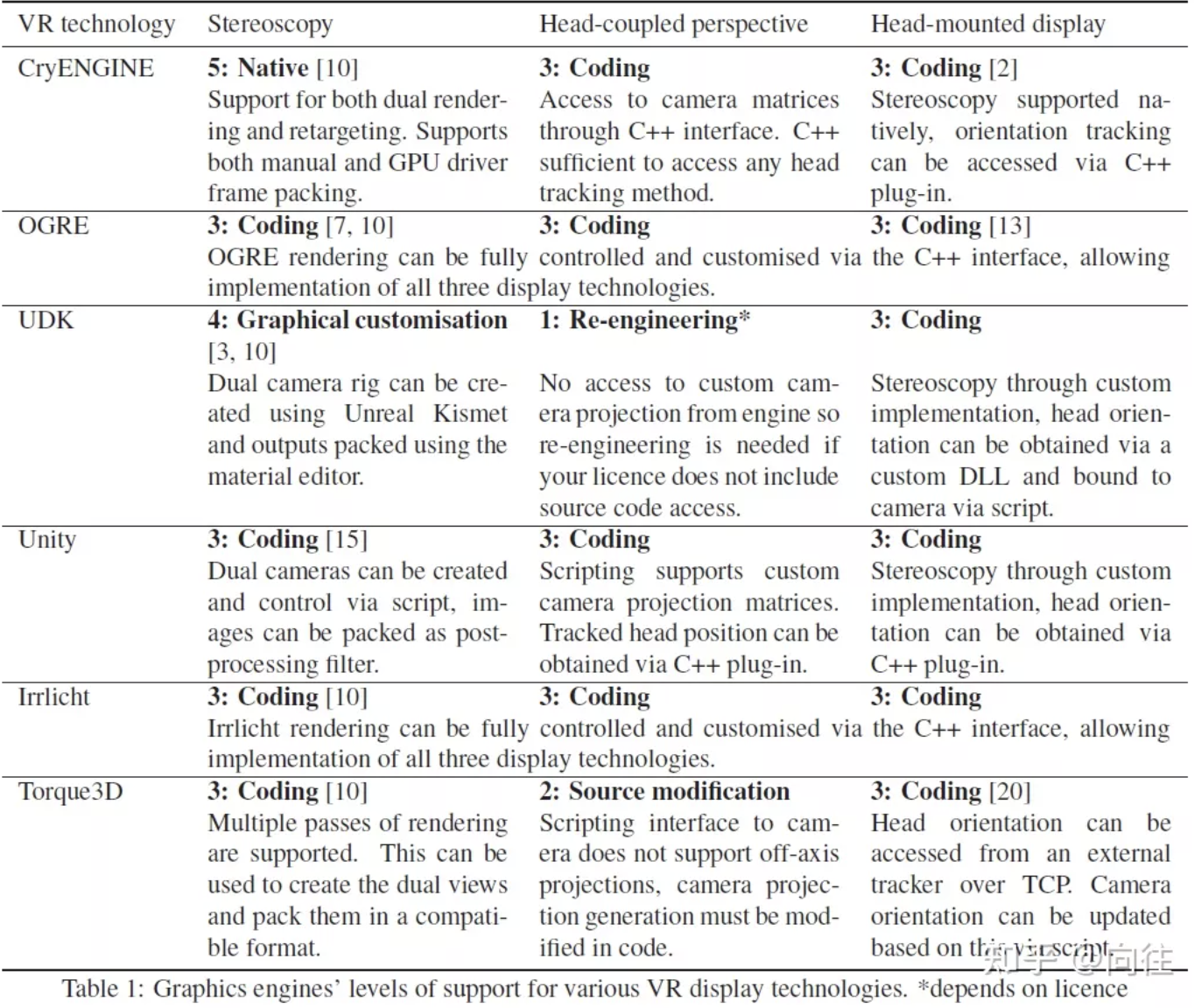

以下是2013前后的游戏引擎对VR的支持情况表:

时至今日,UE4.27及之后的版本已经支持AR、VR、MR等技术,支持Google、Apple、微软、Maigic Leap、Oculus、SteamVR、三星等公司及其旗下的众多XR平台,当然也包括OpenXR等标准接口。

15.3.2 UE XR源码分析

剖析虚幻渲染体系(12)- 移动端专题Part 1(UE移动端渲染分析)已经详细地剖析过UE的移动端源码,顺带分析了XR的部分渲染技术。下面针对XR的某些要点渲染进行剖析。本节以UE 4.27.2为剖析的蓝本。

15.3.2.1 Multi-View

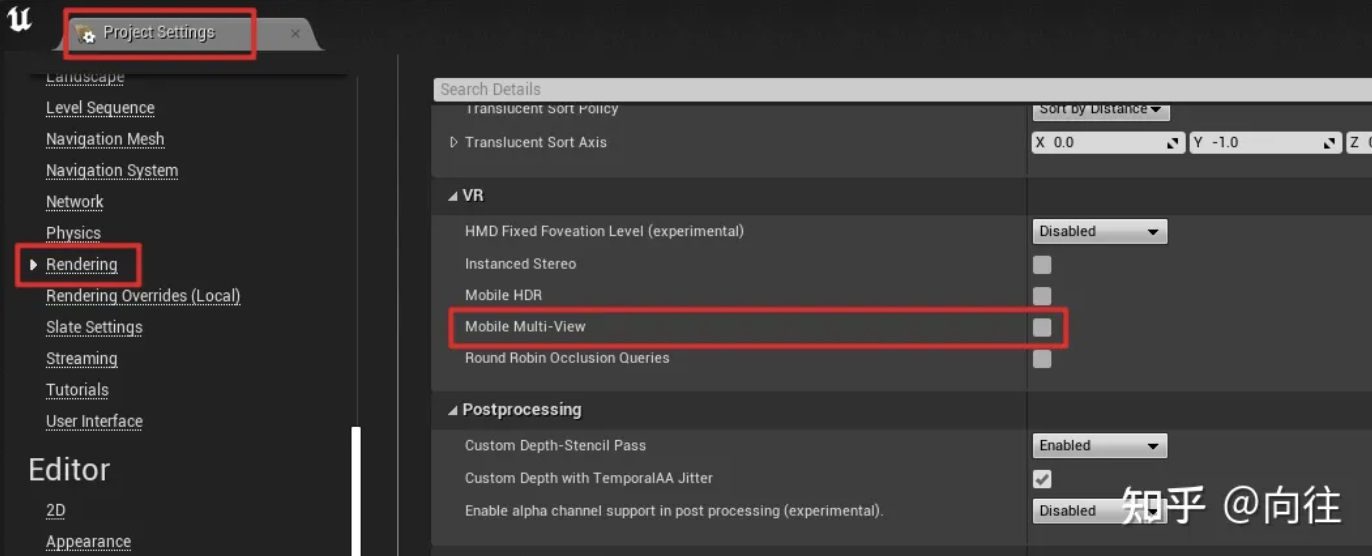

UE的Multi-View可由下面界面设置开启或关闭:

在代码中,由控制台变量vr.MobileMultiView保存其值,而涉及到该控制台变量的主要代码如下:

// MobileShadingRenderer.cpp

FRHITexture* FMobileSceneRenderer::RenderForward(FRHICommandListImmediate& RHICmdList, const TArrayView<const FViewInfo*> ViewList)

{

(...)

// 获取控制台变量

static const auto CVarMobileMultiView = IConsoleManager::Get().FindTConsoleVariableDataInt(TEXT("vr.MobileMultiView"));

const bool bIsMultiViewApplication = (CVarMobileMultiView && CVarMobileMultiView->GetValueOnAnyThread() != 0);

(...)

// 如果scenecolor不是多视图,但应用程序是多视图,则需要由于着色器而渲染为单视图多视图。

SceneColorRenderPassInfo.MultiViewCount = View.bIsMobileMultiViewEnabled ? 2 : (bIsMultiViewApplication ? 1 : 0);

(...)

}

// VulkanRenderTarget.cpp

FVulkanRenderTargetLayout::FVulkanRenderTargetLayout(const FGraphicsPipelineStateInitializer& Initializer)

{

(...)

FRenderPassCompatibleHashableStruct CompatibleHashInfo;

(...)

MultiViewCount = Initializer.MultiViewCount;

(...)

CompatibleHashInfo.MultiViewCount = MultiViewCount;

(...)

}

// VulkanRHI.cpp

static VkRenderPass CreateRenderPass(FVulkanDevice& InDevice, const FVulkanRenderTargetLayout& RTLayout)

{

(...)

// 0b11 for 2, 0b1111 for 4, and so on

uint32 MultiviewMask = ( 0b1 << RTLayout.GetMultiViewCount() ) - 1;

(...)

const uint32_t ViewMask[2] = { MultiviewMask, MultiviewMask };

const uint32_t CorrelationMask = MultiviewMask;

VkRenderPassMultiviewCreateInfo MultiviewInfo;

if (RTLayout.GetIsMultiView())

{

FMemory::Memzero(MultiviewInfo);

MultiviewInfo.sType = VK_STRUCTURE_TYPE_RENDER_PASS_MULTIVIEW_CREATE_INFO;

MultiviewInfo.pNext = nullptr;

MultiviewInfo.subpassCount = NumSubpasses;

MultiviewInfo.pViewMasks = ViewMask;

MultiviewInfo.dependencyCount = 0;

MultiviewInfo.pViewOffsets = nullptr;

MultiviewInfo.correlationMaskCount = 1;

MultiviewInfo.pCorrelationMasks = &CorrelationMask;

CreateInfo.pNext = &MultiviewInfo;

}

(...)

}以上是针对Vulkan图形API的处理,对于OpenGL ES,具体教程可参考Using multiview rendering,在UE也是另外的处理代码:

// OpenGLES.cpp

void FOpenGLES::ProcessExtensions(const FString& ExtensionsString)

{

(...)

// 检测是否支持Multi-View扩展

const bool bMultiViewSupport = ExtensionsString.Contains(TEXT("GL_OVR_multiview"));

const bool bMultiView2Support = ExtensionsString.Contains(TEXT("GL_OVR_multiview2"));

const bool bMultiViewMultiSampleSupport = ExtensionsString.Contains(TEXT("GL_OVR_multiview_multisampled_render_to_texture"));

if (bMultiViewSupport && bMultiView2Support && bMultiViewMultiSampleSupport)

{

glFramebufferTextureMultiviewOVR = (PFNGLFRAMEBUFFERTEXTUREMULTIVIEWOVRPROC)((void*)eglGetProcAddress("glFramebufferTextureMultiviewOVR"));

glFramebufferTextureMultisampleMultiviewOVR = (PFNGLFRAMEBUFFERTEXTUREMULTISAMPLEMULTIVIEWOVRPROC)((void*)eglGetProcAddress("glFramebufferTextureMultisampleMultiviewOVR"));

bSupportsMobileMultiView = (glFramebufferTextureMultiviewOVR != NULL) && (glFramebufferTextureMultisampleMultiviewOVR != NULL);

}

(...)

}

// OpenGLES.h

struct FOpenGLES : public FOpenGLBase

{

(...)

static FORCEINLINE bool SupportsMobileMultiView() { return bSupportsMobileMultiView; }

(...)

}

// OpenGLDevice.cpp

static void InitRHICapabilitiesForGL()

{

(...)

GSupportsMobileMultiView = FOpenGL::SupportsMobileMultiView();

(...)

}

// OpenGLRenderTarget.cpp

GLuint FOpenGLDynamicRHI::GetOpenGLFramebuffer(uint32 NumSimultaneousRenderTargets, FOpenGLTextureBase** RenderTargets, const uint32* ArrayIndices, const uint32* MipmapLevels, FOpenGLTextureBase* DepthStencilTarget)

{

(...)

if PLATFORM_ANDROID && !PLATFORM_LUMINGL4

static const auto CVarMobileMultiView = IConsoleManager::Get().FindTConsoleVariableDataInt(TEXT("vr.MobileMultiView"));

// 如果启用并支持,请分配移动多视图帧缓冲区。

// 多视图不支持读取缓冲区,显式禁用并仅绑定GL_DRAW_FRAMEBUFFER.

const bool bRenderTargetsDefined = (RenderTargets != nullptr) && RenderTargets[0];

const bool bValidMultiViewDepthTarget = !DepthStencilTarget || DepthStencilTarget->Target == GL_TEXTURE_2D_ARRAY;

const bool bUsingArrayTextures = (bRenderTargetsDefined) ? (RenderTargets[0]->Target == GL_TEXTURE_2D_ARRAY && bValidMultiViewDepthTarget) : false;

const bool bMultiViewCVar = CVarMobileMultiView && CVarMobileMultiView->GetValueOnAnyThread() != 0;

if (bUsingArrayTextures && FOpenGL::SupportsMobileMultiView() && bMultiViewCVar)

{

FOpenGLTextureBase* const RenderTarget = RenderTargets[0];

glBindFramebuffer(GL_FRAMEBUFFER, 0);

glBindFramebuffer(GL_DRAW_FRAMEBUFFER, Framebuffer);

FOpenGLTexture2D* RenderTarget2D = (FOpenGLTexture2D*)RenderTarget;

const uint32 NumSamplesTileMem = RenderTarget2D->GetNumSamplesTileMem();

if (NumSamplesTileMem > 1)

{

glFramebufferTextureMultisampleMultiviewOVR(GL_DRAW_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, RenderTarget->GetResource(), 0, NumSamplesTileMem, 0, 2);

VERIFY_GL(glFramebufferTextureMultisampleMultiviewOVR);

if (DepthStencilTarget)

{

glFramebufferTextureMultisampleMultiviewOVR(GL_DRAW_FRAMEBUFFER, GL_DEPTH_ATTACHMENT, DepthStencilTarget->GetResource(), 0, NumSamplesTileMem, 0, 2);

VERIFY_GL(glFramebufferTextureMultisampleMultiviewOVR);

}

}

else

{

glFramebufferTextureMultiviewOVR(GL_DRAW_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, RenderTarget->GetResource(), 0, 0, 2);

VERIFY_GL(glFramebufferTextureMultiviewOVR);

if (DepthStencilTarget)

{

glFramebufferTextureMultiviewOVR(GL_DRAW_FRAMEBUFFER, GL_DEPTH_ATTACHMENT, DepthStencilTarget->GetResource(), 0, 0, 2);

VERIFY_GL(glFramebufferTextureMultiviewOVR);

}

}

FOpenGL::CheckFrameBuffer();

FOpenGL::ReadBuffer(GL_NONE);

FOpenGL::DrawBuffer(GL_COLOR_ATTACHMENT0);

GetOpenGLFramebufferCache().Add(FOpenGLFramebufferKey(NumSimultaneousRenderTargets, RenderTargets, ArrayIndices, MipmapLevels, DepthStencilTarget, PlatformOpenGLCurrentContext(PlatformDevice)), Framebuffer + 1);

return Framebuffer;

}

#endif

(...)

}对应的Shader代码需要添加相应的关键字或语句:

// OpenGLShaders.cpp

void OPENGLDRV_API GLSLToDeviceCompatibleGLSL(...)

{

// Whether we need to emit mobile multi-view code or not.

const bool bEmitMobileMultiView = (FCStringAnsi::Strstr(GlslCodeOriginal.GetData(), "gl_ViewID_OVR") != nullptr);

(...)

if (bEmitMobileMultiView)

{

MoveHashLines(GlslCode, GlslCodeOriginal);

if (GSupportsMobileMultiView)

{

AppendCString(GlslCode, "\n\n");

AppendCString(GlslCode, "#extension GL_OVR_multiview2 : enable\n");

AppendCString(GlslCode, "\n\n");

}

else

{

// Strip out multi-view for devices that don't support it.

AppendCString(GlslCode, "#define gl_ViewID_OVR 0\n");

}

}

(...)

}

void OPENGLDRV_API GLSLToDeviceCompatibleGLSL(...)

{

(...)

if (bEmitMobileMultiView && GSupportsMobileMultiView && TypeEnum == GL_VERTEX_SHADER)

{

AppendCString(GlslCode, "\n\n");

AppendCString(GlslCode, "layout(num_views = 2) in;\n");

AppendCString(GlslCode, "\n\n");

}

(...)

}在shader代码中,用

MOBILE_MULTI_VIEW指定是否启用了移动端多视图:

// MobileBasePassVertexShader.usf

void Main(

FVertexFactoryInput Input

, out FMobileShadingBasePassVSOutput Output

#if INSTANCED_STEREO

, uint InstanceId : SV_InstanceID

, out uint LayerIndex : SV_RenderTargetArrayIndex

#elif MOBILE_MULTI_VIEW

// 表明了移动端多视图的视图索引。

, in uint ViewId : SV_ViewID

#endif

)

{

(...)

#elif MOBILE_MULTI_VIEW

// 根据ViewId解析视图,获得解析后的结果。

#if COMPILER_GLSL_ES3_1

const int MultiViewId = int(ViewId);

ResolvedView = ResolveView(uint(MultiViewId));

Output.BasePassInterpolants.MultiViewId = float(MultiViewId);

#else

ResolvedView = ResolveView(ViewId);

Output.BasePassInterpolants.MultiViewId = float(ViewId);

#endif

#else

(...)

}

// InstancedStereo.ush

ViewState ResolveView(uint ViewIndex)

{

if (ViewIndex == 0)

{

return GetPrimaryView();

}

else

{

return GetInstancedView();

}

}

// ShaderCompiler.cpp

ENGINE_API void GenerateInstancedStereoCode(FString& Result, EShaderPlatform ShaderPlatform)

{

(...)

// 定义ViewState

Result = "struct ViewState\r\n";

Result += "{\r\n";

for (int32 MemberIndex = 0; MemberIndex < StructMembers.Num(); ++MemberIndex)

{

const FShaderParametersMetadata::FMember& Member = StructMembers[MemberIndex];

FString MemberDecl;

GenerateUniformBufferStructMember(MemberDecl, StructMembers[MemberIndex], ShaderPlatform);

Result += FString::Printf(TEXT("\t%s;\r\n"), *MemberDecl);

}

Result += "};\r\n";

// 定义GetPrimaryView

Result += "ViewState GetPrimaryView()\r\n";

Result += "{\r\n";

Result += "\tViewState Result;\r\n";

for (int32 MemberIndex = 0; MemberIndex < StructMembers.Num(); ++MemberIndex)

{

const FShaderParametersMetadata::FMember& Member = StructMembers[MemberIndex];

Result += FString::Printf(TEXT("\tResult.%s = View.%s;\r\n"), Member.GetName(), Member.GetName());

}

Result += "\treturn Result;\r\n";

Result += "}\r\n";

// 定义GetInstancedView

Result += "ViewState GetInstancedView()\r\n";

Result += "{\r\n";

Result += "\tViewState Result;\r\n";

for (int32 MemberIndex = 0; MemberIndex < StructMembers.Num(); ++MemberIndex)

{

const FShaderParametersMetadata::FMember& Member = StructMembers[MemberIndex];

Result += FString::Printf(TEXT("\tResult.%s = InstancedView.%s;\r\n"), Member.GetName(), Member.GetName());

}

Result += "\treturn Result;\r\n";

Result += "}\r\n";

(...)

}15.3.2.2 Fixed Foveation

固定注视点渲染也可以在UE的工程设置的VR页面中开启,对应的控制台变量是

vr.VRS.HMDFixedFoveationLevel。UE相关的处理代码如下:

// VariableRateShadingImageManager.cpp

FRDGTextureRef FVariableRateShadingImageManager::GetVariableRateShadingImage(FRDGBuilder& GraphBuilder, const FSceneViewFamily& ViewFamily, const TArray<TRefCountPtr<IPooledRenderTarget>>* ExternalVRSSources, EVRSType VRSTypesToExclude)

{

// 如果RHI不支持VRS,应该立即返回。

if (!GRHISupportsAttachmentVariableRateShading || !GRHIVariableRateShadingEnabled || !GRHIAttachmentVariableRateShadingEnabled)

{

return nullptr;

}

// 始终要确保更新每一帧,即使不会生成任何VRS图像。

Tick();

if (EnumHasAllFlags(VRSTypesToExclude, EVRSType::All))

{

return nullptr;

}

FVRSImageGenerationParameters VRSImageParams;

const bool bIsStereo = IStereoRendering::IsStereoEyeView(*ViewFamily.Views[0]) && GEngine->XRSystem.IsValid();

VRSImageParams.bInstancedStereo |= ViewFamily.Views[0]->IsInstancedStereoPass();

VRSImageParams.Size = FIntPoint(ViewFamily.RenderTarget->GetSizeXY());

UpdateFixedFoveationParameters(VRSImageParams);

UpdateEyeTrackedFoveationParameters(VRSImageParams, ViewFamily);

EVRSGenerationFlags GenFlags = EVRSGenerationFlags::None;

// 设置XR foveation VRS生成的生成标志。

if (bIsStereo && !EnumHasAnyFlags(VRSTypesToExclude, EVRSType::XRFoveation) && !EnumHasAnyFlags(VRSTypesToExclude, EVRSType::EyeTrackedFoveation))

{

EnumAddFlags(GenFlags, EVRSGenerationFlags::StereoRendering);

if (!EnumHasAnyFlags(VRSTypesToExclude, EVRSType::FixedFoveation) && VRSImageParams.bGenerateFixedFoveation)

{

EnumAddFlags(GenFlags, EVRSGenerationFlags::HMDFixedFoveation);

}

if (!EnumHasAllFlags(VRSTypesToExclude, EVRSType::EyeTrackedFoveation) && VRSImageParams.bGenerateEyeTrackedFoveation)

{

EnumAddFlags(GenFlags, EVRSGenerationFlags::HMDEyeTrackedFoveation);

}

if (VRSImageParams.bInstancedStereo)

{

EnumAddFlags(GenFlags, EVRSGenerationFlags::SideBySideStereo);

}

}

if (GenFlags == EVRSGenerationFlags::None)

{

if (ExternalVRSSources == nullptr || ExternalVRSSources->Num() == 0)

{

// Nothing to generate.

return nullptr;

}

else

{

// If there's one external VRS image, just return that since we're not building anything here.

if (ExternalVRSSources->Num() == 1)

{

const FIntVector& ExtSize = (*ExternalVRSSources)[0]->GetDesc().GetSize();

check(ExtSize.X == VRSImageParams.Size.X / GRHIVariableRateShadingImageTileMinWidth && ExtSize.Y == VRSImageParams.Size.Y / GRHIVariableRateShadingImageTileMinHeight);

return GraphBuilder.RegisterExternalTexture((*ExternalVRSSources)[0]);

}

// If there is more than one external image, we'll generate a final one by combining, so fall through.

}

}

// 获取FOV

IHeadMountedDisplay* HMDDevice = (GEngine->XRSystem == nullptr) ? nullptr : GEngine->XRSystem->GetHMDDevice();

if (HMDDevice != nullptr)

{

HMDDevice->GetFieldOfView(VRSImageParams.HMDFieldOfView.X, VRSImageParams.HMDFieldOfView.Y);

}

const uint64 Key = CalculateVRSImageHash(VRSImageParams, GenFlags);

FActiveTarget* ActiveTarget = ActiveVRSImages.Find(Key);

if (ActiveTarget == nullptr)

{

// 渲染VRS

return GraphBuilder.RegisterExternalTexture(RenderShadingRateImage(GraphBuilder, Key, VRSImageParams, GenFlags));

}

ActiveTarget->LastUsedFrame = GFrameNumber;

return GraphBuilder.RegisterExternalTexture(ActiveTarget->Target);

}

// 渲染PC端的VRS

TRefCountPtr<IPooledRenderTarget> FVariableRateShadingImageManager::RenderShadingRateImage(...)

{

(...)

}

// 渲染移动端的VRS

TRefCountPtr<IPooledRenderTarget> FVariableRateShadingImageManager::GetMobileVariableRateShadingImage(const FSceneViewFamily& ViewFamily)

{

if (!(IStereoRendering::IsStereoEyeView(*ViewFamily.Views[0]) && GEngine->XRSystem.IsValid()))

{

return TRefCountPtr<IPooledRenderTarget>();

}

FIntPoint Size(ViewFamily.RenderTarget->GetSizeXY());

const bool bStereo = GEngine->StereoRenderingDevice.IsValid() && GEngine->StereoRenderingDevice->IsStereoEnabled();

IStereoRenderTargetManager* const StereoRenderTargetManager = bStereo ? GEngine->StereoRenderingDevice->GetRenderTargetManager() : nullptr;

FTexture2DRHIRef Texture;

FIntPoint TextureSize(0, 0);

// 如果支持,为VR注视点分配可变分辨率纹理。

if (StereoRenderTargetManager && StereoRenderTargetManager->NeedReAllocateShadingRateTexture(MobileHMDFixedFoveationOverrideImage))

{

bool bAllocatedShadingRateTexture = StereoRenderTargetManager->AllocateShadingRateTexture(0, Size.X, Size.Y, GRHIVariableRateShadingImageFormat, 0, TexCreate_None, TexCreate_None, Texture, TextureSize);

if (bAllocatedShadingRateTexture)

{

MobileHMDFixedFoveationOverrideImage = CreateRenderTarget(Texture, TEXT("ShadingRate"));

}

}

return MobileHMDFixedFoveationOverrideImage;

}shader代码如下:

// VariableRateShading.usf

(...)

uint GetFoveationShadingRate(float FractionalOffset, float FullCutoffSquared, float HalfCutoffSquared)

{

if (FractionalOffset > HalfCutoffSquared)

{

return SHADING_RATE_4x4;

}

if (FractionalOffset > FullCutoffSquared)

{

return SHADING_RATE_2x2;

}

return SHADING_RATE_1x1;

}

uint GetFixedFoveationRate(uint2 PixelPositionIn)

{

const float2 PixelPosition = float2((float)PixelPositionIn.x, (float)PixelPositionIn.y);

const float FractionalOffset = GetFractionalOffsetFromEyeOrigin(PixelPosition);

return GetFoveationShadingRate(FractionalOffset, FixedFoveationFullRateCutoffSquared, FixedFoveationHalfRateCutoffSquared);

}

uint GetEyetrackedFoveationRate(uint2 PixelPositionIn)

{

return SHADING_RATE_1x1;

}

////////////////////////////////////////////////////////////////////////////////////////////////////

// Return the ideal combination of two specified shading rate values.

////////////////////////////////////////////////////////////////////////////////////////////////////

// 组合两个着色率,其实就是取大的那个。

uint CombineShadingRates(uint Rate1, uint Rate2)

{

return max(Rate1, Rate2);

}

// 生成着色率纹理

[numthreads(THREADGROUP_SIZEX, THREADGROUP_SIZEY, 1)]

void GenerateShadingRateTexture(uint3 DispatchThreadId : SV_DispatchThreadID)

{

const uint2 TexelCoord = DispatchThreadId.xy;

uint ShadingRateOut = 0;

if ((ShadingRateAttachmentGenerationFlags & HMD_FIXED_FOVEATION) != 0)

{

ShadingRateOut = CombineShadingRates(ShadingRateOut, GetFixedFoveationRate(TexelCoord));

}

if ((ShadingRateAttachmentGenerationFlags & HMD_EYETRACKED_FOVEATION) != 0)

{

ShadingRateOut = CombineShadingRates(ShadingRateOut, GetEyetrackedFoveationRate(TexelCoord));

}

// Conservative combination, just return the max of the two.

RWOutputTexture[TexelCoord] = ShadingRateOut;

}由此可知,实现固定注视点需要借助VRS的特性。

15.3.2.3 OpenXR

OpenXR是UE内置的插件,可在插件界面中搜索并开启:

OpenXR的插件代码在:Engine\Plugins\Runtime\OpenXR。OpenXR的标准接口如下:

// OpenXRCore.h

/** List all OpenXR global entry points used by Unreal. */

#define ENUM_XR_ENTRYPOINTS_GLOBAL(EnumMacro) \

EnumMacro(PFN_xrEnumerateApiLayerProperties,xrEnumerateApiLayerProperties) \

EnumMacro(PFN_xrEnumerateInstanceExtensionProperties,xrEnumerateInstanceExtensionProperties) \

EnumMacro(PFN_xrCreateInstance,xrCreateInstance)

/** List all OpenXR instance entry points used by Unreal. */

#define ENUM_XR_ENTRYPOINTS(EnumMacro) \

EnumMacro(PFN_xrDestroyInstance,xrDestroyInstance) \

EnumMacro(PFN_xrGetInstanceProperties,xrGetInstanceProperties) \

EnumMacro(PFN_xrPollEvent,xrPollEvent) \

EnumMacro(PFN_xrResultToString,xrResultToString) \

EnumMacro(PFN_xrStructureTypeToString,xrStructureTypeToString) \

EnumMacro(PFN_xrGetSystem,xrGetSystem) \

EnumMacro(PFN_xrGetSystemProperties,xrGetSystemProperties) \

EnumMacro(PFN_xrEnumerateEnvironmentBlendModes,xrEnumerateEnvironmentBlendModes) \

EnumMacro(PFN_xrCreateSession,xrCreateSession) \

EnumMacro(PFN_xrDestroySession,xrDestroySession) \

EnumMacro(PFN_xrEnumerateReferenceSpaces,xrEnumerateReferenceSpaces) \

EnumMacro(PFN_xrCreateReferenceSpace,xrCreateReferenceSpace) \

EnumMacro(PFN_xrGetReferenceSpaceBoundsRect,xrGetReferenceSpaceBoundsRect) \

EnumMacro(PFN_xrCreateActionSpace,xrCreateActionSpace) \

EnumMacro(PFN_xrLocateSpace,xrLocateSpace) \

EnumMacro(PFN_xrDestroySpace,xrDestroySpace) \

EnumMacro(PFN_xrEnumerateViewConfigurations,xrEnumerateViewConfigurations) \

EnumMacro(PFN_xrGetViewConfigurationProperties,xrGetViewConfigurationProperties) \

EnumMacro(PFN_xrEnumerateViewConfigurationViews,xrEnumerateViewConfigurationViews) \

EnumMacro(PFN_xrEnumerateSwapchainFormats,xrEnumerateSwapchainFormats) \

EnumMacro(PFN_xrCreateSwapchain,xrCreateSwapchain) \

EnumMacro(PFN_xrDestroySwapchain,xrDestroySwapchain) \

EnumMacro(PFN_xrEnumerateSwapchainImages,xrEnumerateSwapchainImages) \

EnumMacro(PFN_xrAcquireSwapchainImage,xrAcquireSwapchainImage) \

EnumMacro(PFN_xrWaitSwapchainImage,xrWaitSwapchainImage) \

EnumMacro(PFN_xrReleaseSwapchainImage,xrReleaseSwapchainImage) \

EnumMacro(PFN_xrBeginSession,xrBeginSession) \

EnumMacro(PFN_xrEndSession,xrEndSession) \

EnumMacro(PFN_xrRequestExitSession,xrRequestExitSession) \

EnumMacro(PFN_xrWaitFrame,xrWaitFrame) \

EnumMacro(PFN_xrBeginFrame,xrBeginFrame) \

EnumMacro(PFN_xrEndFrame,xrEndFrame) \

EnumMacro(PFN_xrLocateViews,xrLocateViews) \

EnumMacro(PFN_xrStringToPath,xrStringToPath) \

EnumMacro(PFN_xrPathToString,xrPathToString) \

EnumMacro(PFN_xrCreateActionSet,xrCreateActionSet) \

EnumMacro(PFN_xrDestroyActionSet,xrDestroyActionSet) \

EnumMacro(PFN_xrCreateAction,xrCreateAction) \

EnumMacro(PFN_xrDestroyAction,xrDestroyAction) \

EnumMacro(PFN_xrSuggestInteractionProfileBindings,xrSuggestInteractionProfileBindings) \

EnumMacro(PFN_xrAttachSessionActionSets,xrAttachSessionActionSets) \

EnumMacro(PFN_xrGetCurrentInteractionProfile,xrGetCurrentInteractionProfile) \

EnumMacro(PFN_xrGetActionStateBoolean,xrGetActionStateBoolean) \

EnumMacro(PFN_xrGetActionStateFloat,xrGetActionStateFloat) \

EnumMacro(PFN_xrGetActionStateVector2f,xrGetActionStateVector2f) \

EnumMacro(PFN_xrGetActionStatePose,xrGetActionStatePose) \

EnumMacro(PFN_xrSyncActions,xrSyncActions) \

EnumMacro(PFN_xrEnumerateBoundSourcesForAction,xrEnumerateBoundSourcesForAction) \

EnumMacro(PFN_xrGetInputSourceLocalizedName,xrGetInputSourceLocalizedName) \

EnumMacro(PFN_xrApplyHapticFeedback,xrApplyHapticFeedback) \

EnumMacro(PFN_xrStopHapticFeedback,xrStopHapticFeedback)完成的OpenXR接口参见XR Spec。UE中涉及的重要类型和接口如下:

// OpenXRAR.h

// OpenXR系统

class FOpenXRARSystem :

public FARSystemSupportBase,

public IOpenXRARTrackedMeshHolder,

public IOpenXRARTrackedGeometryHolder,

public FGCObject,

public TSharedFromThis<FOpenXRARSystem, ESPMode::ThreadSafe>

{

public:

FOpenXRARSystem();

virtual ~FOpenXRARSystem();

void SetTrackingSystem(TSharedPtr<FXRTrackingSystemBase, ESPMode::ThreadSafe> InTrackingSystem);

virtual void OnARSystemInitialized();

virtual bool OnStartARGameFrame(FWorldContext& WorldContext);

virtual void OnStartARSession(UARSessionConfig* SessionConfig);

virtual void OnPauseARSession();

virtual void OnStopARSession();

virtual FARSessionStatus OnGetARSessionStatus() const;

virtual bool OnIsSessionTrackingFeatureSupported(EARSessionType SessionType, EARSessionTrackingFeature SessionTrackingFeature) const;

(...)

private:

// FOpenXRHMD实例

FOpenXRHMD* TrackingSystem;

class IOpenXRCustomAnchorSupport* CustomAnchorSupport = nullptr;

FARSessionStatus SessionStatus;

class IOpenXRCustomCaptureSupport* QRCapture = nullptr;

class IOpenXRCustomCaptureSupport* CamCapture = nullptr;

class IOpenXRCustomCaptureSupport* SpatialMappingCapture = nullptr;

class IOpenXRCustomCaptureSupport* SceneUnderstandingCapture = nullptr;

class IOpenXRCustomCaptureSupport* HandMeshCapture = nullptr;

TArray<IOpenXRCustomCaptureSupport*> CustomCaptureSupports;

(...)

};

// IHeadMountedDisplayModule.h

// 头戴式显示模块的公共接口.

class IHeadMountedDisplayModule : public IModuleInterface, public IModularFeature

{

public:

static FName GetModularFeatureName();

virtual FString GetModuleKeyName() const = 0;

virtual void GetModuleAliases(TArray<FString>& AliasesOut) const;

float GetModulePriority() const;

static inline IHeadMountedDisplayModule& Get();

static inline bool IsAvailable();

virtual void StartupModule() override;

virtual bool PreInit();

virtual bool IsHMDConnected();

virtual uint64 GetGraphicsAdapterLuid();

virtual FString GetAudioInputDevice();

virtual FString GetAudioOutputDevice();

virtual TSharedPtr< class IXRTrackingSystem, ESPMode::ThreadSafe > CreateTrackingSystem() = 0;

virtual TSharedPtr< IHeadMountedDisplayVulkanExtensions, ESPMode::ThreadSafe > GetVulkanExtensions();

virtual bool IsStandaloneStereoOnlyDevice();

};

// IOpenXRHMDPlugin.h

// 此模块的公共接口。在大多数情况下,此接口仅对该插件中的同级模块公开。

class OPENXRHMD_API IOpenXRHMDPlugin : public IHeadMountedDisplayModule

{

public:

static inline IOpenXRHMDPlugin& Get()

{

return FModuleManager::LoadModuleChecked< IOpenXRHMDPlugin >( "OpenXRHMD" );

}

static inline bool IsAvailable();

virtual bool IsExtensionAvailable(const FString& Name) const = 0;

virtual bool IsExtensionEnabled(const FString& Name) const = 0;

virtual bool IsLayerAvailable(const FString& Name) const = 0;

virtual bool IsLayerEnabled(const FString& Name) const = 0;

};

// OpenXRHMD.cpp

class FOpenXRHMDPlugin : public IOpenXRHMDPlugin

{

public:

FOpenXRHMDPlugin();

~FOpenXRHMDPlugin();

// 创建追踪系统(FOpenXRHMD实例)

virtual TSharedPtr< class IXRTrackingSystem, ESPMode::ThreadSafe > CreateTrackingSystem() override

{

if (!RenderBridge)

{

if (!InitRenderBridge())

{

return nullptr;

}

}

// 加载IOpenXRARModule。

auto ARModule = FModuleManager::LoadModulePtr<IOpenXRARModule>("OpenXRAR");

// 创建AR系统。

auto ARSystem = ARModule->CreateARSystem();

// 创建FOpenXRHMD实例.

auto OpenXRHMD = FSceneViewExtensions::NewExtension<FOpenXRHMD>(Instance, System, RenderBridge, EnabledExtensions, ExtensionPlugins, ARSystem);

if (OpenXRHMD->IsInitialized())

{

// 初始化ARSystem.

ARModule->SetTrackingSystem(OpenXRHMD);

OpenXRHMD->GetARCompositionComponent()->InitializeARSystem();

return OpenXRHMD;

}

return nullptr;

}

(...)

private:

void *LoaderHandle;

// XR系统句柄

XrInstance Instance;

XrSystemId System;

TSet<FString> AvailableExtensions;

TSet<FString> AvailableLayers;

TArray<const char*> EnabledExtensions;

TArray<const char*> EnabledLayers;

// IOpenXRHMDPlugin

TArray<IOpenXRExtensionPlugin*> ExtensionPlugins;

TRefCountPtr<FOpenXRRenderBridge> RenderBridge;

TSharedPtr< IHeadMountedDisplayVulkanExtensions, ESPMode::ThreadSafe > VulkanExtensions;

// 初始化系统的各类接口

bool InitRenderBridge();

bool InitInstanceAndSystem();

bool InitInstance();

bool InitSystem();

(...)

};

// XRTrackingSystemBase.h

class HEADMOUNTEDDISPLAY_API FXRTrackingSystemBase : public IXRTrackingSystem

{

public:

FXRTrackingSystemBase(IARSystemSupport* InARImplementation);

virtual ~FXRTrackingSystemBase();

virtual bool DoesSupportPositionalTracking() const override { return false; }

virtual bool HasValidTrackingPosition() override { return DoesSupportPositionalTracking(); }

virtual uint32 CountTrackedDevices(EXRTrackedDeviceType Type = EXRTrackedDeviceType::Any) override;

virtual bool IsTracking(int32 DeviceId) override;

virtual bool GetTrackingSensorProperties(int32 DeviceId, FQuat& OutOrientation, FVector& OutPosition, FXRSensorProperties& OutSensorProperties) override;

virtual EXRTrackedDeviceType GetTrackedDeviceType(int32 DeviceId) const override;

virtual TSharedPtr< class IXRCamera, ESPMode::ThreadSafe > GetXRCamera(int32 DeviceId = HMDDeviceId) override;

virtual bool GetRelativeEyePose(int32 DeviceId, EStereoscopicPass Eye, FQuat& OutOrientation, FVector& OutPosition) override;

virtual void SetTrackingOrigin(EHMDTrackingOrigin::Type NewOrigin) override;

virtual EHMDTrackingOrigin::Type GetTrackingOrigin() const override;

virtual FTransform GetTrackingToWorldTransform() const override;

virtual bool GetFloorToEyeTrackingTransform(FTransform& OutFloorToEye) const override;

virtual void UpdateTrackingToWorldTransform(const FTransform& TrackingToWorldOverride) override;

virtual void CalibrateExternalTrackingSource(const FTransform& ExternalTrackingTransform) override;

virtual void UpdateExternalTrackingPosition(const FTransform& ExternalTrackingTransform) override;

virtual class IXRLoadingScreen* GetLoadingScreen() override final;

virtual void GetMotionControllerData(UObject* WorldContext, const EControllerHand Hand, FXRMotionControllerData& MotionControllerData) override;

(...)

protected:

TSharedPtr< class FDefaultXRCamera, ESPMode::ThreadSafe > XRCamera;

FTransform CachedTrackingToWorld;

FTransform CalibratedOffset;

mutable class IXRLoadingScreen* LoadingScreen;

(...)

};

// HeadMountedDisplayBase.h

class HEADMOUNTEDDISPLAY_API FHeadMountedDisplayBase : public FXRTrackingSystemBase, public IHeadMountedDisplay, public IStereoRendering

{

public:

FHeadMountedDisplayBase(IARSystemSupport* InARImplementation);

virtual ~FHeadMountedDisplayBase();

virtual IStereoLayers* GetStereoLayers() override;

virtual bool GetHMDDistortionEnabled(EShadingPath ShadingPath) const override;

virtual void OnLateUpdateApplied_RenderThread(FRHICommandListImmediate& RHICmdList, const FTransform& NewRelativeTransform) override;

virtual void CalculateStereoViewOffset(const enum EStereoscopicPass StereoPassType, FRotator& ViewRotation, const float WorldToMeters, FVector& ViewLocation) override;

virtual void InitCanvasFromView(FSceneView* InView, UCanvas* Canvas) override;

virtual bool IsSpectatorScreenActive() const override;

virtual class ISpectatorScreenController* GetSpectatorScreenController() override;

virtual class ISpectatorScreenController const* GetSpectatorScreenController() const override;

virtual FVector2D GetEyeCenterPoint_RenderThread(EStereoscopicPass Eye) const;

virtual FIntRect GetFullFlatEyeRect_RenderThread(FTexture2DRHIRef EyeTexture) const { return FIntRect(0, 0, 1, 1); }

virtual void CopyTexture_RenderThread(FRHICommandListImmediate& RHICmdList, FRHITexture2D* SrcTexture, FIntRect SrcRect, FRHITexture2D* DstTexture, FIntRect DstRect, bool bClearBlack, bool bNoAlpha) const {}

(...)

protected:

mutable TSharedPtr<class FDefaultStereoLayers, ESPMode::ThreadSafe> DefaultStereoLayers;

TUniquePtr<FDefaultSpectatorScreenController> SpectatorScreenController;

(...)

};

// OpenXRHMD.h

// OpenXR头显接口。

class FOpenXRHMD

: public FHeadMountedDisplayBase

, public FXRRenderTargetManager

, public FSceneViewExtensionBase

, public FOpenXRAssetManager

, public TStereoLayerManager<FOpenXRLayer>

{

public:

virtual bool EnumerateTrackedDevices(TArray<int32>& OutDevices, EXRTrackedDeviceType Type = EXRTrackedDeviceType::Any) override;

virtual bool GetRelativeEyePose(int32 InDeviceId, EStereoscopicPass InEye, FQuat& OutOrientation, FVector& OutPosition) override;

virtual bool GetIsTracked(int32 DeviceId);

// 获取HMD的当前姿态。

virtual bool GetCurrentPose(int32 DeviceId, FQuat& CurrentOrientation, FVector& CurrentPosition) override;

virtual bool GetPoseForTime(int32 DeviceId, FTimespan Timespan, FQuat& CurrentOrientation, FVector& CurrentPosition, bool& bProvidedLinearVelocity, FVector& LinearVelocity, bool& bProvidedAngularVelocity, FVector& AngularVelocityRadPerSec);

virtual void SetBaseRotation(const FRotator& BaseRot) override;

virtual FRotator GetBaseRotation() const override;

virtual void SetBaseOrientation(const FQuat& BaseOrient) override;

virtual FQuat GetBaseOrientation() const override;

virtual void SetTrackingOrigin(EHMDTrackingOrigin::Type NewOrigin) override;

virtual EHMDTrackingOrigin::Type GetTrackingOrigin() const override;

(...)

public:

FOpenXRHMD(const FAutoRegister&, XrInstance InInstance, XrSystemId InSystem, TRefCountPtr<FOpenXRRenderBridge>& InRenderBridge, TArray<const char*> InEnabledExtensions, TArray<class IOpenXRExtensionPlugin*> InExtensionPlugins, IARSystemSupport* ARSystemSupport);

virtual ~FOpenXRHMD();

// 开始RHI线程的渲染。

void OnBeginRendering_RHIThread(const FPipelinedFrameState& InFrameState, FXRSwapChainPtr ColorSwapchain, FXRSwapChainPtr DepthSwapchain);

// 结束RHI线程的渲染。

void OnFinishRendering_RHIThread();

(...)

private:

TArray<const char*> EnabledExtensions;

TArray<class IOpenXRExtensionPlugin*> ExtensionPlugins;

XrInstance Instance;

XrSystemId System;

// 渲染桥接器

TRefCountPtr<FOpenXRRenderBridge> RenderBridge;

// 渲染模块

IRendererModule* RendererModule;

TArray<FHMDViewMesh> HiddenAreaMeshes;

TArray<FHMDViewMesh> VisibleAreaMeshes;

(...)

};

// OpenXRHMD_RenderBridge.h

// OpenXR渲染桥接器

class FOpenXRRenderBridge : public FXRRenderBridge

{

public:

virtual void* GetGraphicsBinding() = 0;

// 创建交换链。

virtual FXRSwapChainPtr CreateSwapchain(...) = 0;

FXRSwapChainPtr CreateSwapchain(...);

// 呈现渲染的图像。

virtual bool Present(int32& InOutSyncInterval) override

{

bool bNeedsNativePresent = true;

if (OpenXRHMD)

{

OpenXRHMD->OnFinishRendering_RHIThread();

bNeedsNativePresent = !OpenXRHMD->IsStandaloneStereoOnlyDevice();

}

InOutSyncInterval = 0; // VSync off

return bNeedsNativePresent;

}

(...)

private:

FOpenXRHMD* OpenXRHMD;

};

#ifdef XR_USE_GRAPHICS_API_D3D11

FOpenXRRenderBridge* CreateRenderBridge_D3D11(XrInstance InInstance, XrSystemId InSystem);

#endif

#ifdef XR_USE_GRAPHICS_API_D3D12

FOpenXRRenderBridge* CreateRenderBridge_D3D12(XrInstance InInstance, XrSystemId InSystem);

#endif

#ifdef XR_USE_GRAPHICS_API_OPENGL

FOpenXRRenderBridge* CreateRenderBridge_OpenGL(XrInstance InInstance, XrSystemId InSystem);

#endif

#ifdef XR_USE_GRAPHICS_API_VULKAN

FOpenXRRenderBridge* CreateRenderBridge_Vulkan(XrInstance InInstance, XrSystemId InSystem);

// OpenXRHMD_RenderBridge.cpp

// D3D11的渲染桥接器

class FD3D11RenderBridge : public FOpenXRRenderBridge

{

public:

FD3D11RenderBridge(XrInstance InInstance, XrSystemId InSystem);

virtual FXRSwapChainPtr CreateSwapchain(...) override final;

(...)

};

// D3D12的渲染桥接器

class FD3D12RenderBridge : public FOpenXRRenderBridge

{

public:

FD3D12RenderBridge(XrInstance InInstance, XrSystemId InSystem);

virtual FXRSwapChainPtr CreateSwapchain(...) override final

(...)

};

// OpenGL的渲染桥接器

class FOpenGLRenderBridge : public FOpenXRRenderBridge

{

public:

FOpenGLRenderBridge(XrInstance InInstance, XrSystemId InSystem);

virtual FXRSwapChainPtr CreateSwapchain(...) override

final

(...)

};

// Vulkan的渲染桥接器

class FVulkanRenderBridge : public FOpenXRRenderBridge

{

public:

FVulkanRenderBridge(XrInstance InInstance, XrSystemId InSystem);

virtual FXRSwapChainPtr CreateSwapchain(...) override final

(...)

};

由上面可知,OpenXR涉及的类型比较多,主要包含FOpenXRARSystem、FOpenXRHMDPlugin、FOpenXRHMD、FOpenXRRenderBridge等继承树类型。它们各自的继承关系可由以下UML图表达:

classDiagram-v2

IARSystemSupport <|-- FARSystemSupportBase

FARSystemSupportBase <|-- FOpenXRARSystem

class FOpenXRARSystem{

FOpenXRHMD* TrackingSystem;

}

IHeadMountedDisplayModule <|-- IOpenXRHMDPlugin

IOpenXRHMDPlugin <|-- FOpenXRHMDPlugin

class FOpenXRHMDPlugin{

XrInstance Instance;

XrSystemId System;

IOpenXRExtensionPlugin* ExtensionPlugins;

FOpenXRRenderBridge* RenderBridge;

}

IXRTrackingSystem <|-- FXRTrackingSystemBase

FXRTrackingSystemBase <|-- FHeadMountedDisplayBase

IHeadMountedDisplay <|-- FHeadMountedDisplayBase

IStereoRendering <|-- FHeadMountedDisplayBase

FHeadMountedDisplayBase <|-- FOpenXRHMD

FXRRenderTargetManager <|-- FOpenXRHMD

FSceneViewExtensionBase <|-- FOpenXRHMD

class FOpenXRHMD{

XrInstance Instance;

XrSystemId System;

FOpenXRRenderBridge* RenderBridge;

IRendererModule* RendererModule;

}

FRHIResource <|-- FRHICustomPresent

FRHICustomPresent <|-- FXRRenderBridge

FXRRenderBridge <|-- FOpenXRRenderBridge

FOpenXRRenderBridge <|-- FD3D11RenderBridge

FOpenXRRenderBridge <|-- FD3D12RenderBridge

FOpenXRRenderBridge <|-- FOpenGLRenderBridge

FOpenXRRenderBridge <|-- FVulkanRenderBridge将它们关联起来:

classDiagram-v2

IARSystemSupport <|-- FARSystemSupportBase

FARSystemSupportBase <|-- FOpenXRARSystem

FOpenXRARSystem *-- FOpenXRHMD

IHeadMountedDisplayModule <|-- IOpenXRHMDPlugin

IOpenXRHMDPlugin <|-- FOpenXRHMDPlugin

FOpenXRHMDPlugin ..> FOpenXRARSystem

FOpenXRHMDPlugin --> FOpenXRRenderBridge

FOpenXRHMD --> FOpenXRRenderBridge

IXRTrackingSystem <|-- FXRTrackingSystemBase

FXRTrackingSystemBase <|-- FHeadMountedDisplayBase

IHeadMountedDisplay <|-- FHeadMountedDisplayBase

IStereoRendering <|-- FHeadMountedDisplayBase

FHeadMountedDisplayBase <|-- FOpenXRHMD

FRHIResource <|-- FRHICustomPresent

FRHICustomPresent <|-- FXRRenderBridge

FXRRenderBridge <|-- FOpenXRRenderBridge那么,以上的重要类型怎么和UE的主循环关联起来呢?答案就在下面:

// UnrealEngine.cpp

bool UEngine::InitializeHMDDevice()

{

(...)

// 获取HMD的模块列表.

FName Type = IHeadMountedDisplayModule::GetModularFeatureName();

IModularFeatures& ModularFeatures = IModularFeatures::Get();

TArray<IHeadMountedDisplayModule*> HMDModules = ModularFeatures.GetModularFeatureImplementations<IHeadMountedDisplayModule>(Type);

(...)

for (auto HMDModuleIt = HMDModules.CreateIterator(); HMDModuleIt; ++HMDModuleIt)

{

IHeadMountedDisplayModule* HMDModule = *HMDModuleIt;

(...)

if(HMDModule->IsHMDConnected())

{

// 通过XR模块创建追踪系统实例(即IXRTrackingSystem实例,如果是OpenXR,则是FOpenXRHMD), 并将实例保存到UEngine的XRSystem变量中。

XRSystem = HMDModule->CreateTrackingSystem();

if (XRSystem.IsValid())

{

HMDModuleSelected = HMDModule;

break;

}

}

(...)

}以上创建和初始化代码不仅对OpenXR有效,也对其它类型的XR(如FAppleARKitModule、FGoogleARCoreBaseModule、FGoogleVRHMDPlugin、FOculusHMDModule、FSteamVRPlugin等等)有效。

15.3.2.4 Oculus VR

Oculus的XR插件源码是:https://github.com/Oculus-VR/UnrealEngine/tree/4.27。当然,UE 4.27的官方版本已经内置了Oculus插件代码,目录是:Engine\Plugins\Runtime\Oculus\。插件内继承或实现了UE的一些重要的XR类型:

// IOculusHMDModule.h

// 此模块的公共接口。在大多数情况下,此接口仅对该插件中的同级模块公开。

class IOculusHMDModule : public IHeadMountedDisplayModule

{

public:

static inline IOculusHMDModule& Get();

static inline bool IsAvailable();

// 获取HMD的当前方向和位置。如果位置跟踪不可用,DevicePosition将为零向量.

virtual void GetPose(FRotator& DeviceRotation, FVector& DevicePosition, FVector& NeckPosition, bool bUseOrienationForPlayerCamera = false, bool bUsePositionForPlayerCamera = false, const FVector PositionScale = FVector::ZeroVector) = 0;

// 报告原始传感器数据。如果HMD不支持任何参数,则将其设置为零。

virtual void GetRawSensorData(FVector& AngularAcceleration, FVector& LinearAcceleration, FVector& AngularVelocity, FVector& LinearVelocity, float& TimeInSeconds) = 0;

// 返回用户配置。

virtual bool GetUserProfile(struct FHmdUserProfile& Profile)=0;

virtual void SetBaseRotationAndBaseOffsetInMeters(FRotator Rotation, FVector BaseOffsetInMeters, EOrientPositionSelector::Type Options) = 0;

virtual void GetBaseRotationAndBaseOffsetInMeters(FRotator& OutRotation, FVector& OutBaseOffsetInMeters) = 0;

virtual void SetBaseRotationAndPositionOffset(FRotator BaseRot, FVector PosOffset, EOrientPositionSelector::Type Options) = 0;

virtual void GetBaseRotationAndPositionOffset(FRotator& OutRot, FVector& OutPosOffset) = 0;

virtual class IStereoLayers* GetStereoLayers() = 0;

};总体上,结构和OpenXR比较类似,本文就不再累述,有兴趣的同学可到插件目录下研读源码。更多可参阅:

Getting Started Developing Unreal Engine Apps for Quest

Oculus Integration for Unreal Engine Basics

Developing for Oculus

15.3.3 UE VR优化

15.3.3.1 帧率优化

大部分VR应用都会执行自己的流程来控制VR帧率。因此,需要在虚幻引擎4中禁用多个会影响VR应用的一般项目设置。设置以下步骤,禁用虚幻引擎的一般帧率设置:

- 在编辑器主菜单中,选择编辑->项目设置,打开项目设置窗口。

- 在项目设置窗口中,在引擎部分中选择一般设置。

- 在帧率部分下:

- 禁用平滑帧率。

- 禁用使用固定帧率。

- 将自定义时间步设置为None。

15.3.3.2 体验优化

模拟症是一种在沉浸式体验中影响用户的晕动症。下表介绍的最佳实践能够限制用户在VR中体验到的不适感。

- 保持帧率: 低帧率可能导致模拟症。尽可能地优化项目,就能改善用户的体验。Oculus Quest 1和2、HTC Vive、Valve Index、PSVR、HoloLens 2、的目标帧率是90,而ARKit、ARCore的目标帧率是60。

- 用户测试: 让不同的用户进行测试,监控他们在VR应用中体验到的不适感,以避免出现模拟症。

- 让用户控制摄像机: 电影摄像机和其他使玩家无法控制摄像机移动的设计是沉浸式体验不适感的罪魁祸首。应当尽量避免使用头部摇动和摄像机抖动等摄像机效果,如果用户无法控制它们,就可能产生不适感。

- FOV必须和设备匹配: FOV值是通过设备的SDK和内部配置设置的,并且与头显和镜头的物理几何体匹配。因此,FOV无法在虚幻引擎中更改,用户也不得修改。如果FOV值经过了更改,那么在你转动头部时,世界场景就会产生扭曲,并引起不适感。

- 使用较暗的光照和颜色,并避免产生拖尾:在设计VR元素时,你使用的光照与颜色应当比平常更为暗淡。在VR中,强烈鲜明的光照会导致用户更快出现模拟症。使用偏冷的色调和昏暗的光照,就能避免用户产生不适感,还能避免屏幕中的亮色和暗色区域之间产生拖尾。

- 移动速度不应该变化: 用户一开始就应当是全速移动,而不是逐渐加快至全速。

- 避免使用会大幅影响用户所见内容的后期处理效果: 避免使用景深和动态模糊等后期处理效果,以免用户产生不适感。

15.3.3.3 其它优化

避免使用以下VR中存在问题的渲染技术:

- 屏幕空间反射(SSR): 虽然SSR能够在VR中生效,但其产生的反射可能与真实世界中的反射不匹配。除了SSR之外,你还可以使用反射探头,它们的开销较低,也较不容易出现反射匹配的问题。

- 屏幕空间全局光照: 在HMD中,屏幕空间技巧可能会使两眼显示的内容出现差异。这些差异可能导致用户产生不适感。

- 光线追踪: VR应用目前使用的光线追踪无法维持必要的分辨率和帧率,难以提供舒适的VR体验。

- 2D用户界面或广告牌Sprites: 2D用户界面或广告牌Sprite不支持立体渲染,因为它们在立体环境下表现不佳,可以改用3D世界场景中的控件组件。

- 法线贴图:在VR中观看法线贴图或物体时,会发现它们并没有产生之前的效果,因为法线贴图没有考虑到双目显示或动态视差。因此,在VR设备下观看时,法线贴图通常是扁平的。然而,并不意味着不应该或不需要使用法线贴图,只不过需要更仔细地评估,传输进法线贴图的数据是否可以用几何体表现出来。可以使用视差贴图代替:视差贴图是法线贴图的升级版,它考虑到了法线贴图未能考虑的深度提示。视差贴图着色器可以更好地显示深度信息,让物体看起来拥有更多细节。因为无论你从哪个角度观看,视差贴图总是会自行修正,展示出你的视角下正确的深度信息。视差贴图最适合用于鹅卵石路面,以及带有精妙细节的表面。

UE的其它VR优化:

- 不使用动态光照和阴影。

- 不大量使用半透明。

- 可见批次中的实例。如实例化群组中的一个元素为可见,则整个群组均会被绘制。

- 为所有内容设置 LOD。

- 简化材质复杂程度,减少每个物体的材质数量。

- 烘焙重要性不高的内容。

- 不使用能包含玩家的大型几何体。

- 尽量使用预计算的可见体积域。

- 启用VR实例化立体 / 移动VR多视图。

- 禁用后处理。由于VR的渲染要求较高,因此需要禁用诸多默认开启的高级后期处理功能,否则项目可能出现严重的性能问题。执行以下步骤完成项目设置。

- 在关卡中添加一个后期处理(PP)体积域。

- 选择PP体积域,然后在Post Process Volume部分启用 Unbound 选项,使PP体积域中的设置应用到整个关卡。

- 打开Post Process Volume的Settings,前往每个部分将启用的PP设置禁用:先点击属性,然后将默认值(通常为 1.0)改为0即可禁用功能。

执行此操作时,无需点击每个部分并将所有属性设为 0。可先行禁用开销较大的功能,如镜头光晕(Lens Flares)、屏幕空间反射(Screen Space reflections)、临时抗锯齿(Temporal AA)、屏幕空间环境遮挡(SSAO)、光晕(Bloom)和其他可能对性能产生影响的功能。

- 针对平台设置合理的内存桶。使用者可以对拥有不同内存性能的不同平台运行UE4项目的方式进行指定,并添加 内存桶 指定其将使用的选项。要添加此性能,首先需要打开文本编辑程序中的项目 Engine.ini 文件(使用 Android/AndroidEngine.ini、IOS/IOSEngine.ini,或任意 PlatformNameEngine.ini 文件以平台为基础进行设置)。为了方便使用,其中已经有一些默认设置,以下是AndroidEngine.ini的示例参数设置:

[PlatformMemoryBuckets] LargestMemoryBucket_MinGB=8 LargerMemoryBucket_MinGB=6 DefaultMemoryBucket_MinGB=4 SmallerMemoryBucket_MinGB=3 ; for now, we require 3gb SmallestMemoryBucket_MinGB=3可以在 DeviceProfiles.ini 中指定哪个内存桶与哪个设备设置相关联。例如,要调整纹理流送池使用的内存量,应向DeviceProfiles.ini文件添加以下信息:

[Mobile DeviceProfile] +CVars_Default=r.Streaming.PoolSize=180 +CVars_Smaller=r.Streaming.PoolSize=150 +CVars_Smallest=r.Streaming.PoolSize=70 +CVars_Tiniest=r.Streaming.PoolSize=16其中"Mobile"可以替换成要添加设备描述的平台名。使用内存桶还可指定要使用的渲染设置。在下例中,使用 场景设置 的纹理的 TextureLODGroup 已完成设置,UE4检测到使用最小内存桶的设备时将把 MaxLODSize 从1024调整为256,减少自身LOD群组设为"场景"的纹理所需要的内存。

[Mobile DeviceProfile] +TextureLODGroups=(Group=TEXTUREGROUP_World, MaxLODSize=1024, OptionalMaxLODSize=1024, OptionalLODBias=1, MaxLODSize_Smaller=1024, MaxLODSize_Smallest=1024, MaxLODSize_Tiniest=256, LODBias=0, LODBias_Smaller=0, LODBias_Smallest=1, MinMagFilter=aniso, MipFilter=point)

- 选择合适的线程同步方式。UE支持以下几种线程同步方式:

- r.GTSyncType 0:游戏线程与渲染线程同步(旧行为,默认)。

- r.GTSyncType 1:游戏线程与RHI线程同步(相当于采用并行渲染前的UE4)。

- r.GTSyncType 2:游戏线程与交换链同步,显示+/-以毫秒为单位表示的偏移。为实现此模式同步,引擎通过调用Present()时传入驱动程序的索引跟踪显示的帧。此索引是从平台帧翻转统计数据检索的,它指示每帧翻转的精确时间。引擎用户使用这些值来预测下一帧应于何时翻转,然后基于该时间启动下一个游戏线程帧。

另外,rhi.SyncSlackMS决定应用到预测的下一次垂直同步时间的偏移。减小该值将缩小输入延迟,但是会缩短引擎管线,更容易出现由卡顿造成的掉帧。相似地,增大该值会延长该引擎管线,赋予游戏更多应对卡顿的弹性,但是会增大输入延迟。一般来说,使用这个新的帧同步系统的游戏应在维持可接受帧率的情况下尽可能缩小rhi.SyncSlackMS。例如,更新率为30 Hz的游戏具有以下CVar设置:

- rhi.SyncInterval 2

- r.GTSyncType 2

- r.OneFrameThreadLag 1

- r.Vsync 1

- rhi.SyncSlackMS 0

它将拥有的最佳输入延迟为约66ms(两个30Hz帧)。如果将rhi.SyncSlackMS增大至10,则最佳输入延迟为约76ms。r.GTSyncType 2也适用于更新率为60Hz的游戏(即,rhi.SyncInterval 设置为1),但是采用此设置的好处不易察觉,由于与30hz相比,帧率为两倍,输入延迟会降低一半。

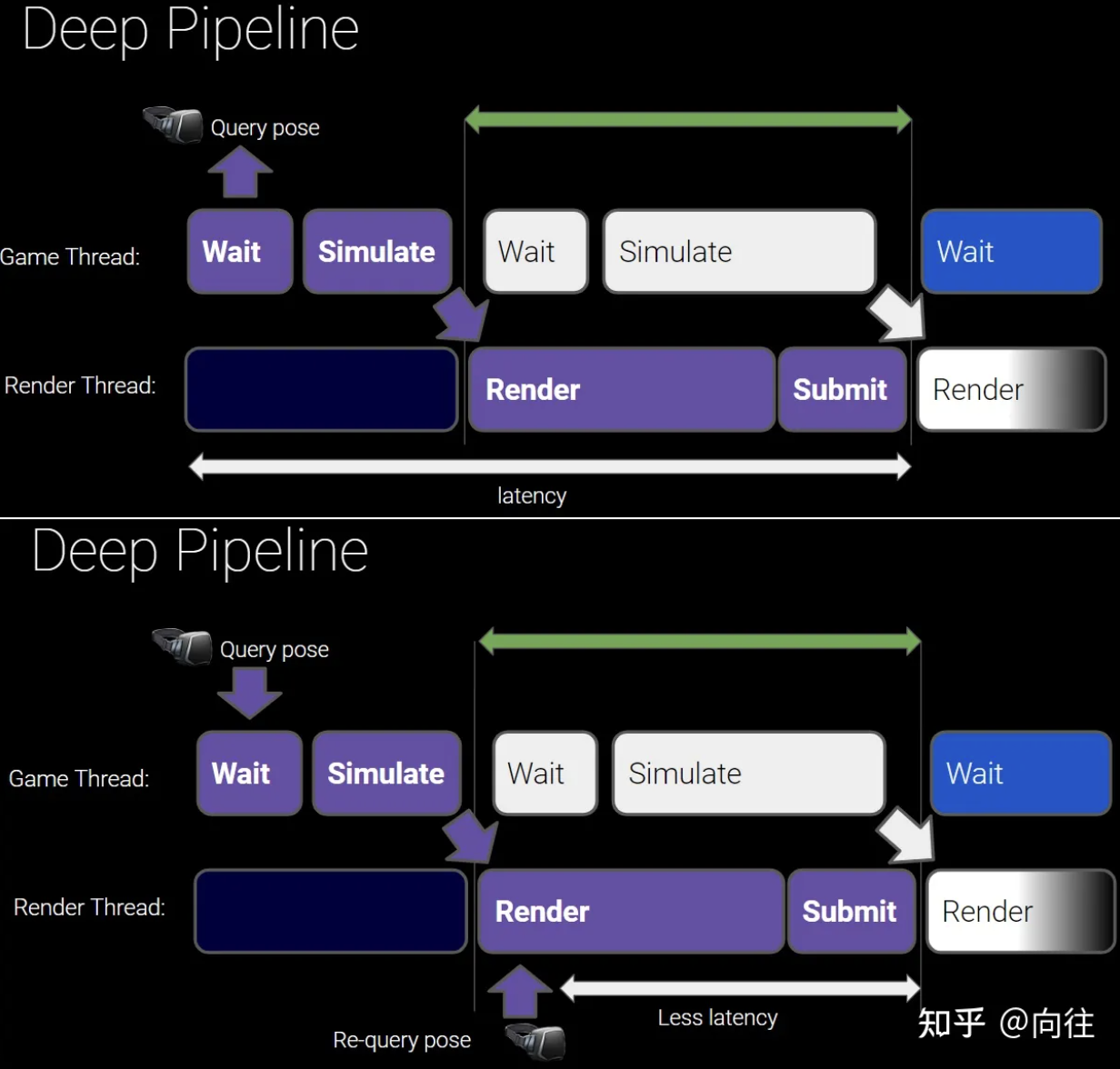

- 在渲染线程重新获取HMD的姿态,以减少延迟。

上:在模拟开始时在本机上查询姿势,并使用该姿势进行渲染,头戴式显示器可能会感受到"迟缓"或缓慢,因为现在在查询设备位置和显示结果帧之间可能会有两帧时间;下:在渲染之前重新查询姿势并使用更新后的姿势来计算渲染的变换,就可以解决这个问题。

- 其它:开启VSync、开启DynRes、准确使用组合器(Compositor)等等。

更多UE的XR优化可参阅:

- Unreal Engine XR(4.27)

- VR Best Practices

- VR Performance And Profiling

- VR Performance Features

- VR Performance Tools

- Device Profiles

- Low Latency Frame Syncing

- Profiling Stereo Rendering

- 12.6.5 XR优化

- Getting Started Developing Unreal Engine Apps for Quest

- Unreal Advanced Rendering

15.3.4 UE VR性能检测

在UE4中可通过以下方式获取游戏中的整体数据。

stat unit:可显示整体游戏线程、绘制线程和 GPU 时间,以及整体的帧时。这最适用于收集以下信息:整体总帧时是否处于理想区间、游戏线程时间,但不可用于收集绘制线程和 GPU 时间。 startfpschart / stopfpschart:如果需要了解 90Hz 以上花费的时间百分比,可运行这些命令。它将捕捉并聚合开始和结束之间窗口上的数据,并转存带有桶装帧率信息的文件。注意,游戏有时会报告略低于90Hz,但实际却为90。最好检查80+的桶(bucket),确定在帧率上消耗的实际时间。 stat gpu:与GPU分析工具提供数据相似,玩家可在游戏中观察并监控这些数据,适用于快速检查GPU工作的开销。

如果需要在游戏进程中收集数据(例如用于图表中),实时数据则尤其实用。实时显示可用于分析在控制台变量或精度设置上启用的功能,或立即知晓结果在编辑器中进行优化。数据在代码中被声明为浮点计数器,如:

DECLARE_FLOAT_COUNTER_STAT(TEXT("Postprocessing"), Stat_GPU_Postprocessing, STATGROUP_GPU);渲染线程代码块可与

SCOPED_GPU_STAT 宏一同被 instrument,工作原理与

SCOPED_DRAW_EVENT相似,如:

SCOPED_GPU_STAT(RHICmdList, Stat_GPU_Postprocessing);与绘制事件不同,GPU 数据为累积式。可为相同数据添加多个条目,它们将被聚合。为被显示标记的内容应被包含在包罗 [unaccounted] 数据中。如该数据较高,则说明尚有内容未包含在显式数据中,需要添加更多宏进行追踪。

此外,Oculus和SteamVR均有用于了解性能的第三方工具,建议使用这些工具查看实际的帧时和合成器开销,或者借助RenderDoc等第三方调试软件。

Oculus HMD内置的性能分析工具。

15.4 本篇总结

本篇主要阐述了XR的各类渲染技术,以及UE的XR集成的渲染流程和主要算法,使得读者对此模块有着大致的理解,至于更多技术细节和原理,需要读者自己去研读UE源码发掘。推荐几个比较完整、全面、深入的XR课程和书籍:

- Stanford Course: EE 267

- Berkeley Course: CS 184

- VIRTUAL REALITY (Steven M. LaValle)

参考文献

- Unreal Engine Source

- Rendering and Graphics

- Materials

- Graphics Programming

- Unreal Engine XR(4.27)

- VR Best Practices

- VR Performance And Profiling

- VR Performance Features

- VR Performance Tools

- Device Profiles

- Low Latency Frame Syncing

- Profiling Stereo Rendering

- VR industrial applications―A singapore perspective

- HMD based VR Service Framework

- X3D

- Virtual Reality Capabilities of Graphics Engines

- Virtual Reality and Getting the Best from Your Next-Gen Engine

- Introduction to PowerVR Ray Tracing

- Diving into VR World with Oculus

- Advanced VR Rendering

- Augmented Reality 2.0: Developing experiences for Google Tango and beyond

- Audio For Cinematic VR

- 10 Tips for VR in the Retail/Trade/Event Space

- Panel: Lessons from IEEE Virtual Reality

- Architecting Archean: Simultaneously Building for Room-Scale and Mobile VR, AR, and All Input Devices

- Creating Mixed Realities with HoloLens, Tango, and Beyond

- Advanced VR Rendering Performance

- Five Years of VR: An Oral History from Oculus Rift to Quest 2

- A Year in VR: A Look Back at VR's Launch

- AAA on a VR Budget & Timeline: Tech-Centric Postmortem

- A Game Designer's Overview of the Neuroscience of VR

- 'Obduction', from 2D to VR: A Postmortem and Lessons Learned

- Conemarching in VR: Developing a Fractal experience at 90 FPS

- CocoVR - Spherical Multiprojection

- Digging for Fire: Virtual Reality Gaming 2019

- Make Your Game Run on Quest (and Look Pretty Too))

- 2020 AUGMENTED AND VIRTUAL REALITY SURVEY REPORT

- Entertainment VR/AR: Building an Expansive Star Wars World in Mobile VR

- Q-VR: System-Level Design for Future Mobile Collaborative Virtual Reality

- Virtual Reality and Virtual Reality System Components

- Towards a Better Understanding of VR Sickness

- CalVR: An Advanced Open Source Virtual Reality Software Framework

- Cloud VR Oriented Bearer Network White Paper

- xR, AR, VR, MR: What’s the Difference in Reality?

- Unity XR

- How to maximize AR and VR performance with advanced stereo rendering

- NVIDIA XR SOLUTIONS

- Qualcomm XR (VR/AR))

- Qualcomm XR-VR-AR

- Snapdragon XR2 5G Platform

- Snapdragon XR2 HMD Reference Design

- XR Today

- Google VR

- Oculus Quest

- Develop for the Quest Platform

- Getting Started Developing Unreal Engine Apps for Quest

- Unreal Advanced Rendering

- Tone Mapping in Unreal Engine

- HTC XR

- Pico VR

- VR Compositor Layers

- VR Timewarp, Spacewarp, Reprojection, And Motion Smoothing Explained

- Asynchronous TimeWarp (ATW))

- Asynchronous SpaceWarp 2.0

- Oculus VR Rendering

- EYEBALLS TO ARCHITECTURE:HOW AR AND VR ARE UPENDING COMPUTER GRAPHICS

- Effective Design of Educational Virtual Reality Applications for Medicine using Knowledge-Engineering Techniques

- Extended Reality (XR) Experience for Healthcare Experience for Healthcare)

- A Study of Networking Performance in a Multi-user VR Environment

- GAMEWORKS VR

- VIRTUAL REALITY

- Real-Time ray casting for virtual reality

- AUGMENTED & VIRTUAL REALITY GLOSSARY 2018

- Virtual Hands in VR: Motion Capture, Synthesis, and Perception

- The World’s Leading VR Solution Company

- Intro to Virtual Reality

- Intro to Virtual Reality 2

- Stanford Course:EE 267

- EE 267: Introduction and Overview

- The Human Visual System

- The Graphics Pipeline and OpenGL IV: Stereo Rendering, Depth of Field Rendering, Multi-pass Rendering

- Head Mounted Display Optics I

- Head Mounted Display Optics II

- THE NEW REALTY FOR MOBILE GAMING The VR/ AR Opportunity

- eXtended Reality

- OpenXR

- XR Spec

- OpenXR Ecosystem Update Bringing to Life the Dream of Portable Native XR

- QuickTime VR – An Image-Based Approach to Virtual Environment Navigation

- Capture, Reconstruction, and Representation of the Visual Real World for Virtual Reality

- High-Fidelity Facial and Speech Animation for VR HMDs

- Temporally Adaptive Shading Reuse for Real-Time Rendering and Virtual Reality

- The Mobile Future of eXtended Reality (XR))

- Virtual Reality/Augmented Reality White Paper 2017

- VR/AR 行业研究报告

- VIRTUAL REALITY by Steven M. LaValle

- Berkeley Course: CS184

- Using multiview rendering

- Getting Started Developing Unreal Engine Apps for Quest

- Oculus Integration for Unreal Engine Basics

- Developing for Oculus

作者:向往

文章来源:https://zhuanlan.zhihu.com/p/561335027