转载自:AI人工智能初学者

作者:ChaucerG

1 轻量化网络简介

前面所提网络在向着越来越大、越来越深的方向发展,但在实际应用中计算性能有限,但又有着极强的业务需求。对于效率问题,可以想到的方法通常是对模型进行压缩与剪枝,降低网络的参数量,从而降低计算量加快推理速度。相较于对模型进行后处理的方法,轻量化模型设计则是另辟蹊径。

轻量化模型主要是设计更加高效的网络计算方式,在降低网络参数的同时,不损失性能。

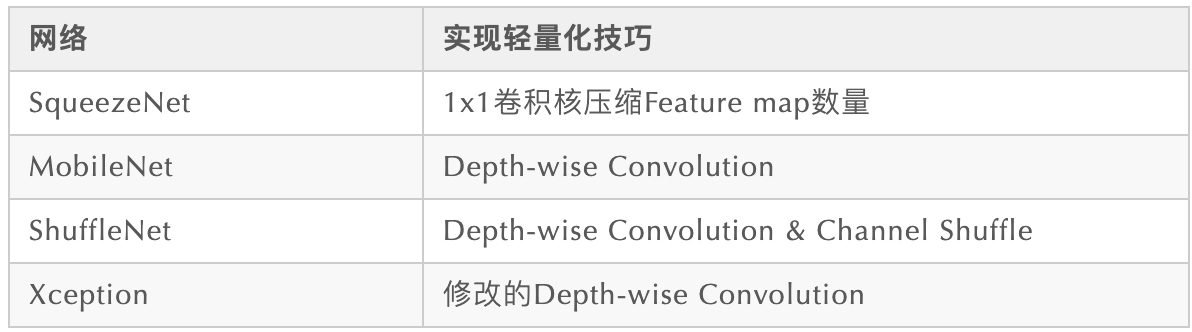

常用的四个轻量化模型系列:_SqueezeNet、MobileNet、ShuffleNet、Xception_。这些模型在实际场景中都得到了广泛的应用。

1.1 SqueezeNet

SqueezeNet是一个人工设计的轻量化网络,它在ImageNet上实现了和AlexNet相同水平的正确率,但是只使用了1/50的参数。更进一步,使用模型压缩技术,可以将SqueezeNet压缩到0.5MB,这是AlexNet的1/510。

引入了两个术语CNN微结构和CNN宏结构。

- CNN微结构:由层或几个卷积层组成的小模块,如inception模块。

- CNN宏结构:由层或模块组成的完整的网络结构,此时深度是一个重要的参数。

网络结构的设计策略:

- (1)代替3x3的滤波器为1x1,这样会减少9倍的参数。

- (2)减少输入到3x3滤波器的输入通道,这样可以进一步减少参数,本文使用squeeze层来实现。

- (3)降采样操作延后,可以给卷积层更大的激活特征图,意味着保留的信息更多,可以提升准确率。

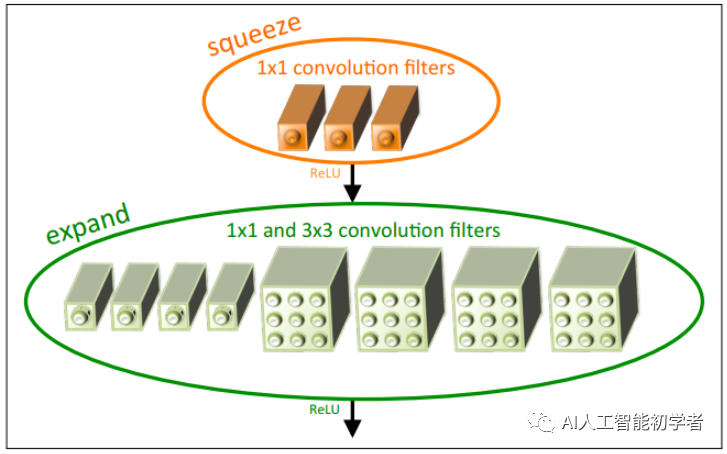

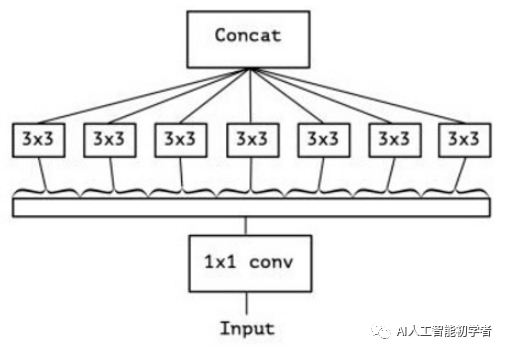

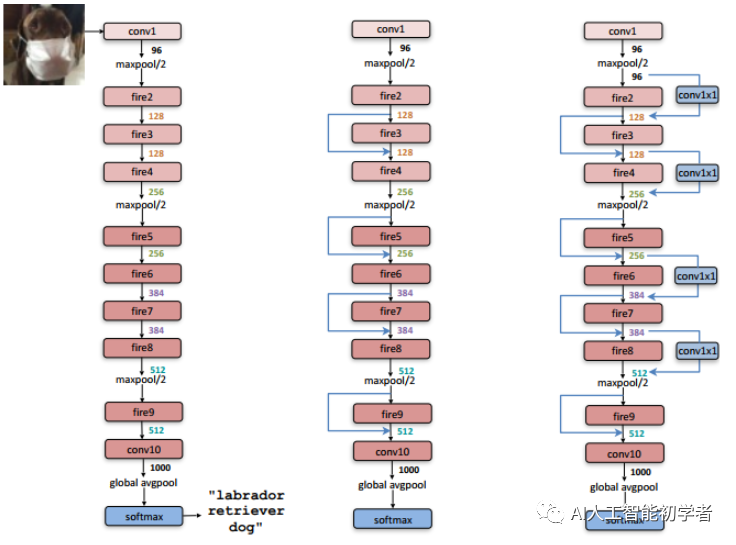

策略是减少参数的方案,是在限制参数预算的情况下最大化准确率。如下图所示作者引入了Fire模块来构造CNN,此模块成功地应用了上述的3个策略。

模块由squeeze层和expand层组成,squeeze层由1x1的卷积层组成,,可以减少输入expand层的特征图的输入通道。expand层由1x1和3x3的卷积混合而成,<,称为扩展了特征图。

PyTorch实现Fire模块如下:

class Fire(nn.Module):

def __init__(self, inplanes, squeeze_planes, expand1x1_planes, expand3x3_planes):

super(Fire, self).__init__()

self.inplanes = inplanes

self.squeeze = nn.Conv2d(inplanes, squeeze_planes, kernel_size=1)

self.squeeze_activation = nn.ReLU(inplace=True)

self.expand1x1 = nn.Conv2d(squeeze_planes, expand1x1_planes, kernel_size=1)

self.expand1x1_activation = nn.ReLU(inplace=True)

self.expand3x3 = nn.Conv2d(squeeze_planes, expand3x3_planes, kernel_size=3, padding=1)

self.expand3x3_activation = nn.ReLU(inplace=True)

def forward(self, x):

x = self.squeeze_activation(self.squeeze(x))

return torch.cat([

self.expand1x1_activation(self.expand1x1(x)),

self.expand3x3_activation(self.expand3x3(x))

], 1) 整体结构以普通的卷积层(conv1)开始,接着连接8个Fire(2-9)模块,最后以卷积层(conv10)结束。每个Fire模块的filter数量逐渐增加,并且在conv1,Fire4,Fire8和conv10后使用步长为2的max-pooling,这种相对延迟的pooling符合了策略(3)。如下作者对比了添加跳跃层的squeezenet:

class SqueezeNet(nn.Module):

def __init__(self, version=1.0, num_classes=1000):

super(SqueezeNet, self).__init__()

if version not in [1.0, 1.1]:

raise ValueError("Unsupported SqueezeNet version {version}: 1.0 or 1.1 expected".format(version=version))

self.num_classes = num_classes

if version == 1.0:

self.features = nn.Sequential(

nn.Conv2d(3, 96, kernel_size=7, stride=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2, ceil_mode=True),

Fire(96, 16, 64, 64),

Fire(128, 16, 64, 64),

Fire(128, 32, 128, 128),

nn.MaxPool2d(kernel_size=3, stride=2, ceil_mode=True),

Fire(256, 32, 128, 128),

Fire(256, 48, 192, 192),

Fire(384, 48, 192, 192),

Fire(384, 64, 256, 256),

nn.MaxPool2d(kernel_size=3, stride=2, ceil_mode=True),

Fire(512, 64, 256, 256),

)

else:

self.features = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=3, stride=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2, ceil_mode=True),

Fire(64, 16, 64, 64),

Fire(128, 16, 64, 64),

nn.MaxPool2d(kernel_size=3, stride=2, ceil_mode=True),

Fire(128, 32, 128, 128),

Fire(256, 32, 128, 128),

nn.MaxPool2d(kernel_size=3, stride=2, ceil_mode=True),

Fire(256, 48, 192, 192),

Fire(384, 48, 192, 192),

Fire(384, 64, 256, 256),

Fire(512, 64, 256, 256),

)

# Final convolution is initialized differently form the rest

final_conv = nn.Conv2d(512, self.num_classes, kernel_size=1)

self.classifier = nn.Sequential(

nn.Dropout(p=0.5), final_conv, nn.ReLU(inplace=True),

nn.AdaptiveAvgPool2d((1, 1))

)

for m in self.modules():

if isinstance(m, nn.Conv2d):

if m is final_conv:

init.normal_(m.weight, mean=0.0, std=0.01)

else:

init.kaiming_uniform_(m.weight)

if m.bias is not None:

init.constant_(m.bias, 0)

def forward(self, x):

x = self.features(x)

x = self.classifier(x)

return x.view(x.size(0), self.num_classes) 1.2 MobileNet

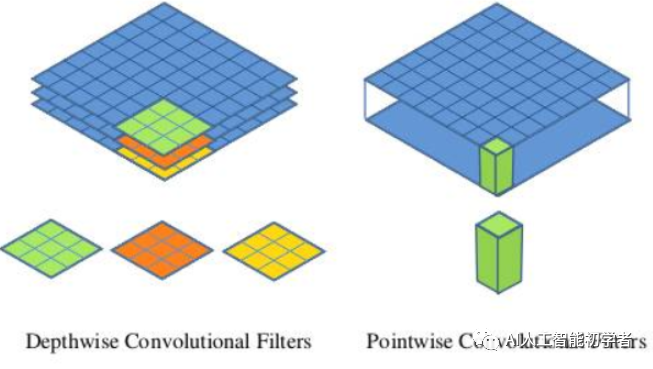

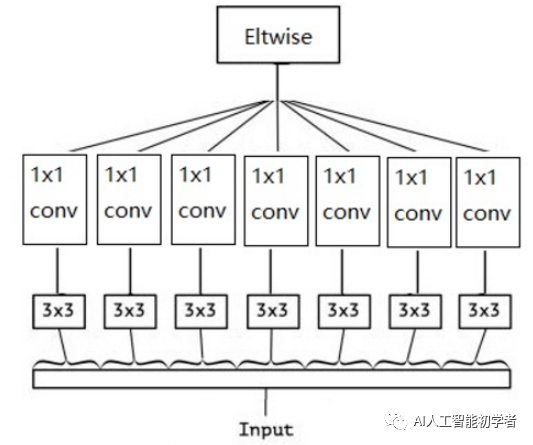

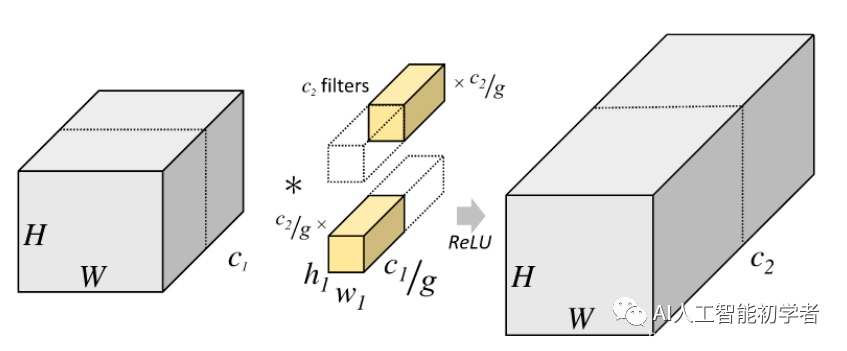

mobilenet做的一个工作相当于把卷积核拆分,并提出了逐点卷积depthwise separable convolutions。如下图:

简单解释一下depthwise separable convolutions:假设输入的feature map有C1层,先对每一层先进行3x3的卷积,得到新的C1层的feature map,对新的feature map进行1x1卷积,得到输出的C2层feature map。所以整个过程参数为:3x3xC1+1x1xC1xC2。

class MobileNet(nn.Module):

def __init__(self):

super(MobileNet, self).__init__()

def conv_bn(inp, oup, stride):

return nn.Sequential(

nn.Conv2d(inp, oup, 3, stride, 1, bias=False),

nn.BatchNorm2d(oup),

nn.ReLU(inplace=True)

)

def conv_dw(inp, oup, stride):

return nn.Sequential(

nn.Conv2d(inp, inp, 3, stride, 1, groups=inp, bias=False),

nn.BatchNorm2d(inp),

nn.ReLU(inplace=True),

nn.Conv2d(inp, oup, 1, 1, 0, bias=False),

nn.BatchNorm2d(oup),

nn.ReLU(inplace=True),

)

self.model = nn.Sequential(

conv_bn( 3, 32, 2),

conv_dw( 32, 64, 1),

conv_dw( 64, 128, 2),

conv_dw(128, 128, 1),

conv_dw(128, 256, 2),

conv_dw(256, 256, 1),

conv_dw(256, 512, 2),

conv_dw(512, 512, 1),

conv_dw(512, 512, 1),

conv_dw(512, 512, 1),

conv_dw(512, 512, 1),

conv_dw(512, 512, 1),

conv_dw(512, 1024, 2),

conv_dw(1024, 1024, 1),

nn.AvgPool2d(7), )

self.fc = nn.Linear(1024, 1000)

def forward(self, x):

x = self.model(x)

x = x.view(-1, 1024)

x = self.fc(x)

return x 后续的Mobilenet v2针对Mobilenet v1,作者进一步提出了改进方案,作者发现在通道数少的情况下不应该接relu这个激活函数,会造成大量节点变0的情况,因此作者提出了类似于resnet的残差概念,将前面还没有置零的部分直接加和到下一层。除此之外,在通道数较少的层仅仅采用线性函数,而取消了非线性的relu激活。

1.3 ShuffleNet

ShuffleNet是由2017年07月发布的轻量级网络,设计用于移动端设备,在MobileNet之后的网络架构。主要的创新点在于使用了分组卷积(group convolution)和通道打乱(channel shuffle)。

分组卷积(group convolution)

分组卷积最早由AlexNet中使用。由于当时的硬件资源有限,训练AlexNet时卷积操作不能全部放在同一个GPU处理,因此作者把特征图分给多个GPU分别进行处理,最后把特征图结果进行连接。

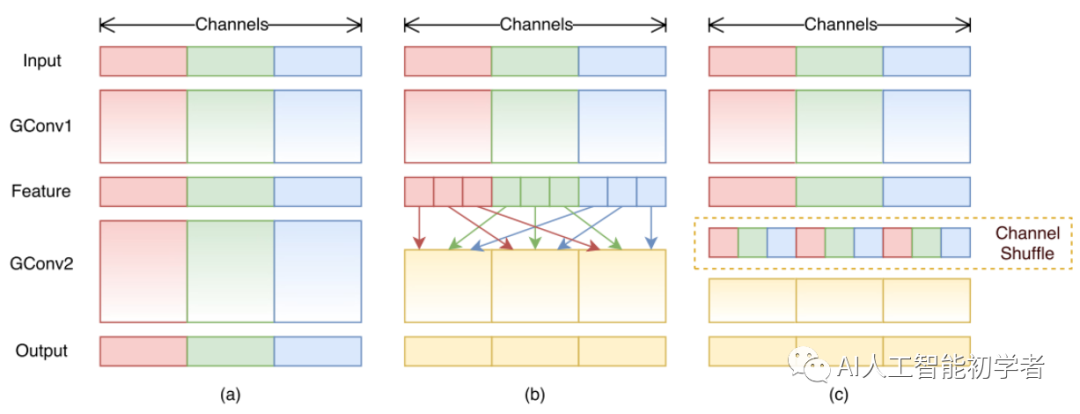

通道打乱(channel shuffle)

通道打乱并不是随机打乱,而是有规律地打乱。

- :表示的是使用分组卷积处理未加打乱的特征图。每一个输出通道都对应组内的输入通道。

- :对组卷积GConv1处理后的特征图channel进行如上方式的打乱,此时大部分输出通道会对应不同组的输入通道;

- 是与的等价实现,将通道调整到合适的位置。

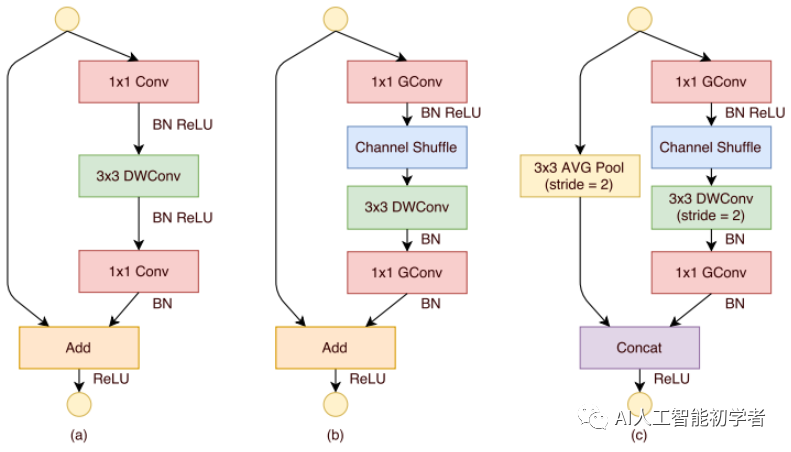

ShuffleNet单元

- :是ResNet提出的bottleneck层,只是将3x3的卷积替换为depthwise(DW)卷积。

- :是1x1卷积变为pointwise分组卷积(GConv),并且加入了通道打乱。按照DW卷积的原论文,在3x3的DW后面不添加非线性因素。最后的点卷积用来匹配相加操作。

- :加入了stride=2去减少特征图尺寸,旁路加入平均池化层来维持相同的尺寸,最终在channel水平上连接特征图。

以下是基于PyTorch实现的ShuffleNet:

import torch

import torch.nn as nn

import torch.nn.functional as F

class ShuffleBlock(nn.Module):

def __init__(self, groups):

super(ShuffleBlock, self).__init__()

self.groups = groups

def forward(self, x):

'''Channel shuffle: [N,C,H,W] -> [N,g,C/g,H,W] -> [N,C/g,g,H,w] -> [N,C,H,W]'''

N,C,H,W = x.size()

g = self.groups

# 维度变换之后必须要使用.contiguous()使得张量在内存连续之后才能调用view函数

return x.view(N,g,int(C/g),H,W).permute(0,2,1,3,4).contiguous().view(N,C,H,W)

class Bottleneck(nn.Module):

def __init__(self, in_planes, out_planes, stride, groups):

super(Bottleneck, self).__init__()

self.stride = stride

# bottleneck层中间层的channel数变为输出channel数的1/4

mid_planes = int(out_planes/4)

g = 1 if in_planes==24 else groups

# 作者提到不在stage2的第一个pointwise层使用组卷积,因为输入channel数量太少,只有24

self.conv1 = nn.Conv2d(in_planes, mid_planes, kernel_size=1, groups=g, bias=False)

self.bn1 = nn.BatchNorm2d(mid_planes)

self.shuffle1 = ShuffleBlock(groups=g)

self.conv2 = nn.Conv2d(mid_planes, mid_planes, kernel_size=3, stride=stride, padding=1, groups=mid_planes, bias=False)

self.bn2 = nn.BatchNorm2d(mid_planes)

self.conv3 = nn.Conv2d(mid_planes, out_planes, kernel_size=1, groups=groups, bias=False)

self.bn3 = nn.BatchNorm2d(out_planes)

self.shortcut = nn.Sequential()

if stride == 2:

self.shortcut = nn.Sequential(nn.AvgPool2d(3, stride=2, padding=1))

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = self.shuffle1(out)

out = F.relu(self.bn2(self.conv2(out)))

out = self.bn3(self.conv3(out))

res = self.shortcut(x)

out = F.relu(torch.cat([out,res], 1)) if self.stride==2 else F.relu(out+res)

return out

class ShuffleNet(nn.Module):

def __init__(self, cfg):

super(ShuffleNet, self).__init__()

out_planes = cfg['out_planes']

num_blocks = cfg['num_blocks']

groups = cfg['groups']

self.conv1 = nn.Conv2d(3, 24, kernel_size=1, bias=False)

self.bn1 = nn.BatchNorm2d(24)

self.in_planes = 24

self.layer1 = self._make_layer(out_planes[0], num_blocks[0], groups)

self.layer2 = self._make_layer(out_planes[1], num_blocks[1], groups)

self.layer3 = self._make_layer(out_planes[2], num_blocks[2], groups)

self.linear = nn.Linear(out_planes[2], 10)

def _make_layer(self, out_planes, num_blocks, groups):

layers = []

for i in range(num_blocks):

if i == 0:

layers.append(Bottleneck(self.in_planes, out_planes-self.in_planes, stride=2, groups=groups))

else:

layers.append(Bottleneck(self.in_planes, out_planes, stride=1, groups=groups))

self.in_planes = out_planes

return nn.Sequential(*layers)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = self.layer1(out)

out = self.layer2(out)

out = self.layer3(out)

out = F.avg_pool2d(out, 4)

out = out.view(out.size(0), -1)

out = self.linear(out)

return out

def ShuffleNetG2():

cfg = {

'out_planes': [200,400,800],

'num_blocks': [4,8,4],

'groups': 2

}

return ShuffleNet(cfg)

def ShuffleNetG3():

cfg = {

'out_planes': [240,480,960],

'num_blocks': [4,8,4],

'groups': 3

}

return ShuffleNet(cfg)

def test():

net = ShuffleNetG2()

x = torch.randn(1,3,32,32)

y = net(x)

print(y)

test() 1.4 Xception

Xception并不是真正意义上的轻量化模型,借鉴depth-wise convolution,而depth-wise convolution又是上述几个轻量化模型的关键点,其思想非常值得借鉴。

Xception是基于Inception-V3,并结合了depth-wise convolution,这样做的好处是提高网络效率,以及在同等参数量的情况下,在大规模数据集上,效果要优于Inception-V3。这也提供了另外一种“轻量化”的思路:在硬件资源给定的情况下,尽可能的增加网络效率和性能,也可以理解为充分利用硬件资源。

以下为基于PyTorch实现的Xception代码:

import math

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.utils.model_zoo as model_zoo

from modeling.sync_batchnorm.batchnorm import SynchronizedBatchNorm2d

def fixed_padding(inputs, kernel_size, dilation):

kernel_size_effective = kernel_size + (kernel_size - 1) * (dilation - 1)

pad_total = kernel_size_effective - 1

pad_beg = pad_total // 2

pad_end = pad_total - pad_beg

padded_inputs = F.pad(inputs, (pad_beg, pad_end, pad_beg, pad_end))

return padded_inputs

class SeparableConv2d(nn.Module):

def __init__(self, inplanes, planes, kernel_size=3, stride=1, dilation=1, bias=False, BatchNorm=None):

super(SeparableConv2d, self).__init__()

self.conv1 = nn.Conv2d(inplanes, inplanes, kernel_size, stride, 0, dilation, groups=inplanes, bias=bias)

self.bn = BatchNorm(inplanes)

self.pointwise = nn.Conv2d(inplanes, planes, 1, 1, 0, 1, 1, bias=bias)

def forward(self, x):

x = fixed_padding(x, self.conv1.kernel_size[0], dilation=self.conv1.dilation[0])

x = self.conv1(x)

x = self.bn(x)

x = self.pointwise(x)

return x

class Block(nn.Module):

def __init__(self, inplanes, planes, reps, stride=1, dilation=1, BatchNorm=None,

start_with_relu=True, grow_first=True, is_last=False):

super(Block, self).__init__()

if planes != inplanes or stride != 1:

self.skip = nn.Conv2d(inplanes, planes, 1, stride=stride, bias=False)

self.skipbn = BatchNorm(planes)

else:

self.skip = None

self.relu = nn.ReLU(inplace=True)

rep = []

filters = inplanes

if grow_first:

rep.append(self.relu)

rep.append(SeparableConv2d(inplanes, planes, 3, 1, dilation, BatchNorm=BatchNorm))

rep.append(BatchNorm(planes))

filters = planes

for i in range(reps - 1):

rep.append(self.relu)

rep.append(SeparableConv2d(filters, filters, 3, 1, dilation, BatchNorm=BatchNorm))

rep.append(BatchNorm(filters))

if not grow_first:

rep.append(self.relu)

rep.append(SeparableConv2d(inplanes, planes, 3, 1, dilation, BatchNorm=BatchNorm))

rep.append(BatchNorm(planes))

if stride != 1:

rep.append(self.relu)

rep.append(SeparableConv2d(planes, planes, 3, 2, BatchNorm=BatchNorm))

rep.append(BatchNorm(planes))

if stride == 1 and is_last:

rep.append(self.relu)

rep.append(SeparableConv2d(planes, planes, 3, 1, BatchNorm=BatchNorm))

rep.append(BatchNorm(planes))

if not start_with_relu:

rep = rep[1:]

self.rep = nn.Sequential(*rep)

def forward(self, inp):

x = self.rep(inp)

if self.skip is not None:

skip = self.skip(inp)

skip = self.skipbn(skip)

else:

skip = inp

x = x + skip

return x

class AlignedXception(nn.Module):

def __init__(self, output_stride, BatchNorm,

pretrained=True):

super(AlignedXception, self).__init__()

if output_stride == 16:

entry_block3_stride = 2

middle_block_dilation = 1

exit_block_dilations = (1, 2)

elif output_stride == 8:

entry_block3_stride = 1

middle_block_dilation = 2

exit_block_dilations = (2, 4)

else:

raise NotImplementedError

# Entry flow

self.conv1 = nn.Conv2d(3, 32, 3, stride=2, padding=1, bias=False)

self.bn1 = BatchNorm(32)

self.relu = nn.ReLU(inplace=True)

self.conv2 = nn.Conv2d(32, 64, 3, stride=1, padding=1, bias=False)

self.bn2 = BatchNorm(64)

self.block1 = Block(64, 128, reps=2, stride=2, BatchNorm=BatchNorm, start_with_relu=False)

self.block2 = Block(128, 256, reps=2, stride=2, BatchNorm=BatchNorm, start_with_relu=False, grow_first=True)

self.block3 = Block(256, 728, reps=2, stride=entry_block3_stride, BatchNorm=BatchNorm, start_with_relu=True, grow_first=True, is_last=True)

# Middle flow

self.block4 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation,

BatchNorm=BatchNorm, start_with_relu=True, grow_first=True)

self.block5 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation,

BatchNorm=BatchNorm, start_with_relu=True, grow_first=True)

self.block6 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation,

BatchNorm=BatchNorm, start_with_relu=True, grow_first=True)

self.block7 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation,

BatchNorm=BatchNorm, start_with_relu=True, grow_first=True)

self.block8 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation,

BatchNorm=BatchNorm, start_with_relu=True, grow_first=True)

self.block9 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation,

BatchNorm=BatchNorm, start_with_relu=True, grow_first=True)

self.block10 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation,

BatchNorm=BatchNorm, start_with_relu=True, grow_first=True)

self.block11 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation,

BatchNorm=BatchNorm, start_with_relu=True, grow_first=True)

self.block12 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation,

BatchNorm=BatchNorm, start_with_relu=True, grow_first=True)

self.block13 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation,

BatchNorm=BatchNorm, start_with_relu=True, grow_first=True)

self.block14 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation,

BatchNorm=BatchNorm, start_with_relu=True, grow_first=True)

self.block15 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation,

BatchNorm=BatchNorm, start_with_relu=True, grow_first=True)

self.block16 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation,

BatchNorm=BatchNorm, start_with_relu=True, grow_first=True)

self.block17 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation,

BatchNorm=BatchNorm, start_with_relu=True, grow_first=True)

self.block18 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation,

BatchNorm=BatchNorm, start_with_relu=True, grow_first=True)

self.block19 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation,

BatchNorm=BatchNorm, start_with_relu=True, grow_first=True)

# Exit flow

self.block20 = Block(728, 1024, reps=2, stride=1, dilation=exit_block_dilations[0], BatchNorm=BatchNorm, start_with_relu=True, grow_first=False, is_last=True)

self.conv3 = SeparableConv2d(1024, 1536, 3, stride=1, dilation=exit_block_dilations[1], BatchNorm=BatchNorm)

self.bn3 = BatchNorm(1536)

self.conv4 = SeparableConv2d(1536, 1536, 3, stride=1, dilation=exit_block_dilations[1], BatchNorm=BatchNorm)

self.bn4 = BatchNorm(1536)

self.conv5 = SeparableConv2d(1536, 2048, 3, stride=1, dilation=exit_block_dilations[1], BatchNorm=BatchNorm)

self.bn5 = BatchNorm(2048)

# Init weights

self._init_weight()

# Load pretrained model

if pretrained:

self._load_pretrained_model()

def forward(self, x):

# Entry flow

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.conv2(x)

x = self.bn2(x)

x = self.relu(x)

x = self.block1(x)

# add relu here

x = self.relu(x)

low_level_feat = x

x = self.block2(x)

x = self.block3(x)

# Middle flow

x = self.block4(x)

x = self.block5(x)

x = self.block6(x)

x = self.block7(x)

x = self.block8(x)

x = self.block9(x)

x = self.block10(x)

x = self.block11(x)

x = self.block12(x)

x = self.block13(x)

x = self.block14(x)

x = self.block15(x)

x = self.block16(x)

x = self.block17(x)

x = self.block18(x)

x = self.block19(x)

# Exit flow

x = self.block20(x)

x = self.relu(x)

x = self.conv3(x)

x = self.bn3(x)

x = self.relu(x)

x = self.conv4(x)

x = self.bn4(x)

x = self.relu(x)

x = self.conv5(x)

x = self.bn5(x)

x = self.relu(x)

return x, low_level_feat

def _init_weight(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

elif isinstance(m, SynchronizedBatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

def _load_pretrained_model(self):

pretrain_dict = model_zoo.load_url('http://data.lip6.fr/cadene/pretrainedmodels/xception-b5690688.pth')

model_dict = {}

state_dict = self.state_dict()

for k, v in pretrain_dict.items():

if k in state_dict:

if 'pointwise' in k:

v = v.unsqueeze(-1).unsqueeze(-1)

if k.startswith('block11'):

model_dict[k] = v

model_dict[k.replace('block11', 'block12')] = v

model_dict[k.replace('block11', 'block13')] = v

model_dict[k.replace('block11', 'block14')] = v

model_dict[k.replace('block11', 'block15')] = v

model_dict[k.replace('block11', 'block16')] = v

model_dict[k.replace('block11', 'block17')] = v

model_dict[k.replace('block11', 'block18')] = v

model_dict[k.replace('block11', 'block19')] = v

elif k.startswith('block12'):

model_dict[k.replace('block12', 'block20')] = v

elif k.startswith('bn3'):

model_dict[k] = v

model_dict[k.replace('bn3', 'bn4')] = v

elif k.startswith('conv4'):

model_dict[k.replace('conv4', 'conv5')] = v

elif k.startswith('bn4'):

model_dict[k.replace('bn4', 'bn5')] = v

else:

model_dict[k] = v

state_dict.update(model_dict)

self.load_state_dict(state_dict)

if __name__ == "__main__":

import torch

model = AlignedXception(BatchNorm=nn.BatchNorm2d, pretrained=True, output_stride=16)

input = torch.rand(1, 3, 512, 512)

output, low_level_feat = model(input)

print(output.size())

print(low_level_feat.size()) 轻量化模型小结

轻量化主要得益于depth-wise convolution,因此大家可以考虑采用depth-wise convolution来设计自己的轻量化网络,但是要注意信息流通不畅问题。

解决“信息流通不畅”的问题:

- MobileNet采用了point-wise convolution

- ShuffleNet采用的是channel shuffle

MobileNet相较于ShuffleNet使用了更多的卷积,计算量和参数量上是劣势,但是增加了非线性层数,理论上特征更抽象,更高级了;ShuffleNet则省去point-wise convolution,采用channel shuffle,简单明了,省去卷积步骤,减少了参数量。

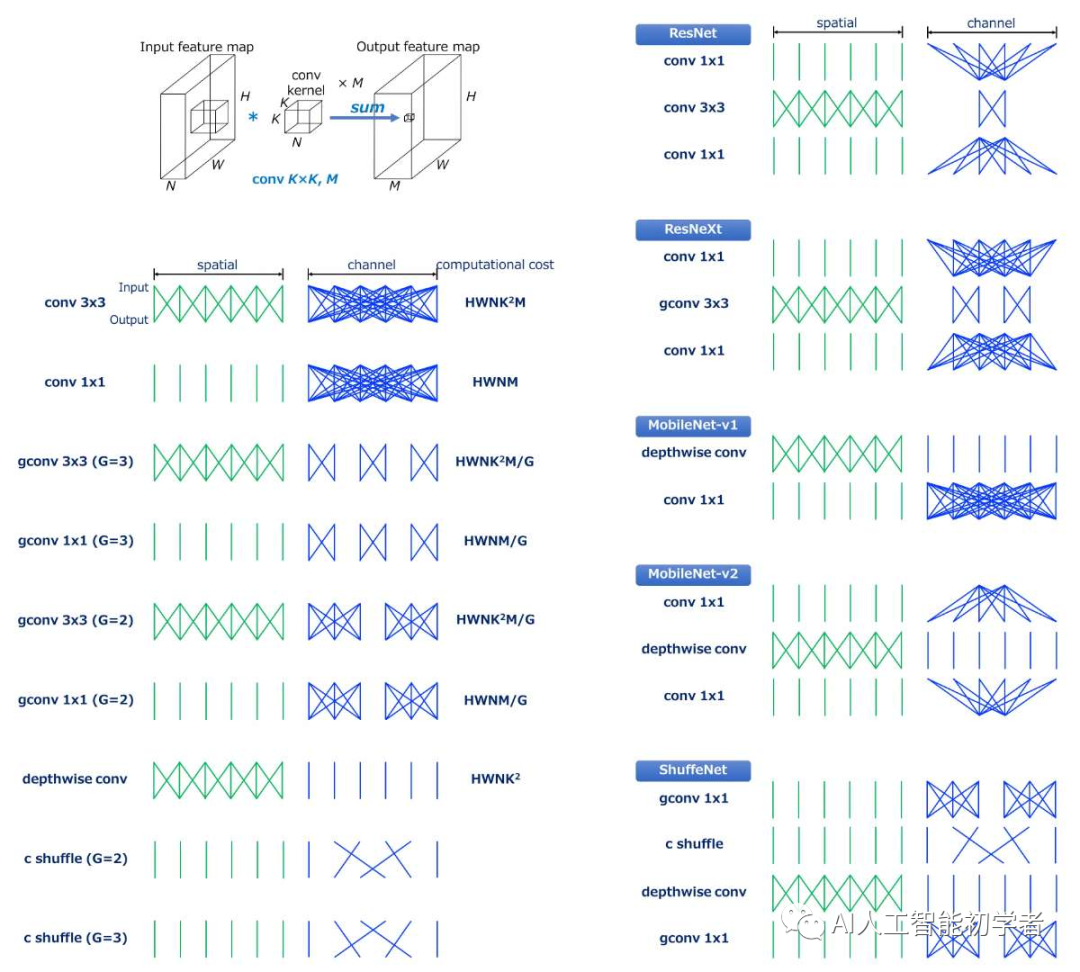

下图是对全文以及上一篇笔记的经典卷积神经网络的总结,可以说是很全面的了!!!

推荐专栏文章

更多嵌入式AI算法部署等请关注极术嵌入式AI专栏。