我是来自山区、朴实、不偷电瓶的AI算法工程师阿chai,给大家分享人工智能、自动驾驶、机器人、3D感知相关的知识

本篇推文是阿chai整理TensorFlow文档以及自己的项目。TensorFlow是谷歌的深度学习框架,虽然现在很多人都在用PyTorch,但是阿chai还在用,觉得非常不错,从TF1的静态图到现在的TF2的高级API真的是非常香。下面我们通过三个小案例来了解下TensorFlow。

分别从CV、语音、NLP三个领域举例。

SegNet

1.算法简介

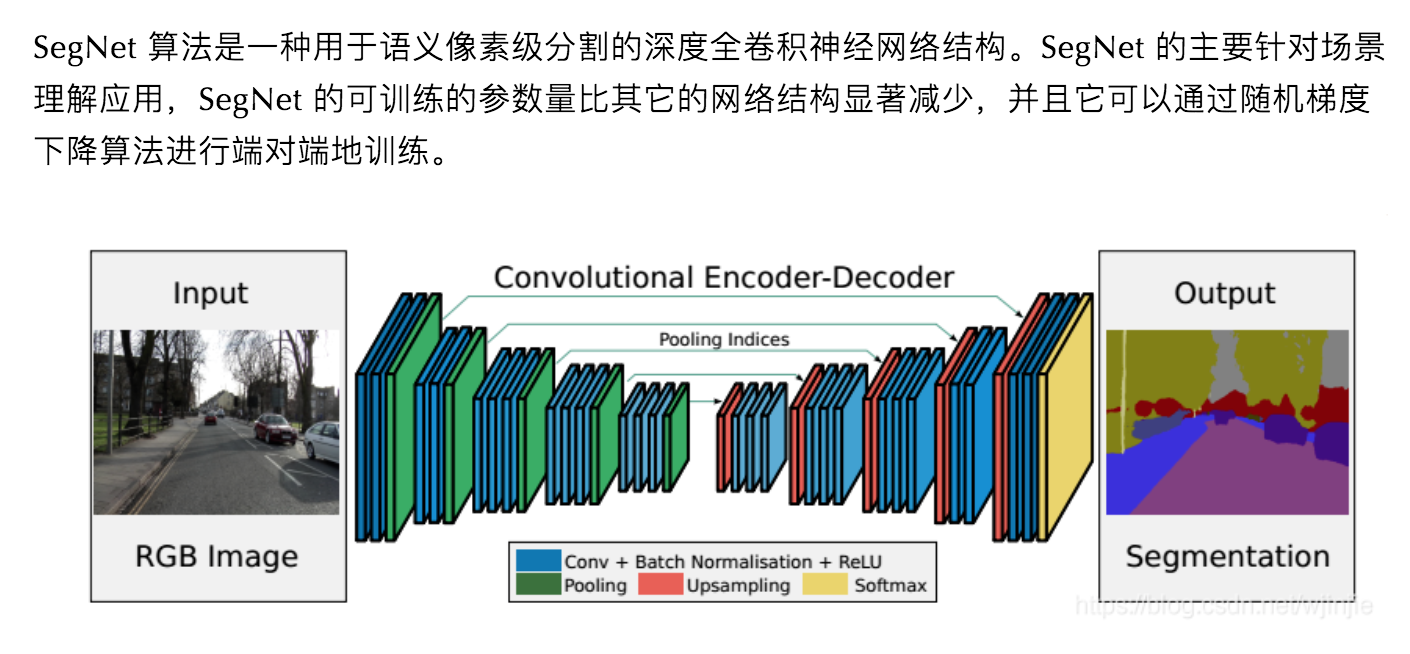

SegNet 算法是一种用于语义像素级分割的深度全卷积神经网络结构。SegNet 的主要针对场景理解应用,SegNet 的可训练的参数量比其它的网络结构显著减少,并且它可以通过随机梯度下降算法进行端对端地训练。

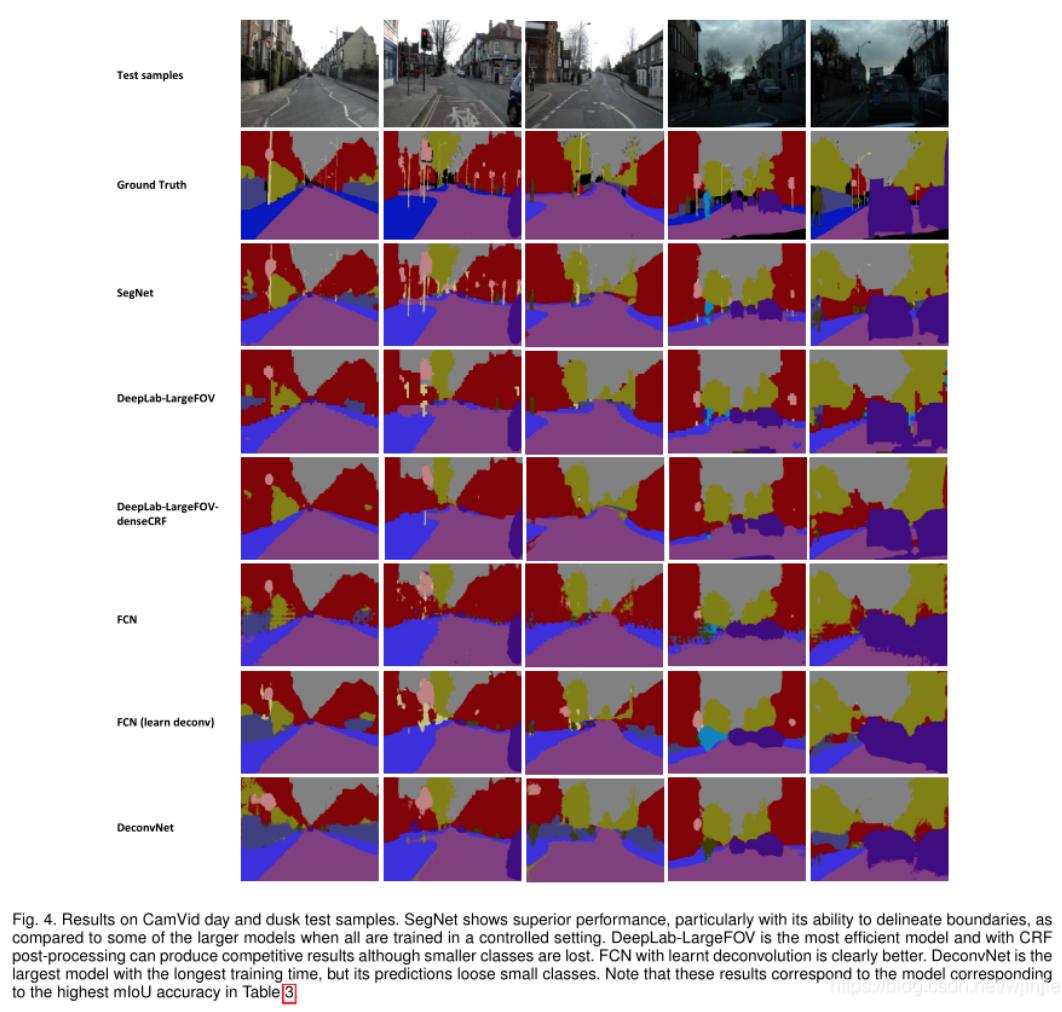

在 CamVid 数据集上白天和黑夜样本下的测试效果图。很明显,SegNet 的测试结果更接近 Ground Truth。因此,SegNet 在该数据集上的表现更好。

2.TensorFlow2搭建SegNet

a.数据准备

import osimgs_path = '/home/fmc/WX/Segmentation/SegNet-tf2/dataset/jpg'# 图片文件存放地址for files in os.listdir(imgs_path): print(files) image_name = files + ';' + files[:-4] + '.png' with open("train.txt", "a") as f: f.write(str(image_name) + '\n')f.close()b.搭建编码器网络

def vggnet_encoder(input_height=416, input_width=416, pretrained='imagenet'): img_input = tf.keras.Input(shape=(input_height, input_width, 3)) # 416,416,3 -> 208,208,64 x = layers.Conv2D(64, (3, 3), activation='relu', padding='same', name='block1_conv1')(img_input) x = layers.Conv2D(64, (3, 3), activation='relu', padding='same', name='block1_conv2')(x) x = layers.MaxPooling2D((2, 2), strides=(2, 2), name='block1_pool')(x) f1 = x # 208,208,64 -> 128,128,128 x = layers.Conv2D(128, (3, 3), activation='relu', padding='same', name='block2_conv1')(x) x = layers.Conv2D(128, (3, 3), activation='relu', padding='same', name='block2_conv2')(x) x = layers.MaxPooling2D((2, 2), strides=(2, 2), name='block2_pool')(x) f2 = x # 104,104,128 -> 52,52,256 x = layers.Conv2D(256, (3, 3), activation='relu', padding='same', name='block3_conv1')(x) x = layers.Conv2D(256, (3, 3), activation='relu', padding='same', name='block3_conv2')(x) x = layers.Conv2D(256, (3, 3), activation='relu', padding='same', name='block3_conv3')(x) x = layers.MaxPooling2D((2, 2), strides=(2, 2), name='block3_pool')(x) f3 = x # 52,52,256 -> 26,26,512 x = layers.Conv2D(512, (3, 3), activation='relu', padding='same', name='block4_conv1')(x) x = layers.Conv2D(512, (3, 3), activation='relu', padding='same', name='block4_conv2')(x) x = layers.Conv2D(512, (3, 3), activation='relu', padding='same', name='block4_conv3')(x) x = layers.MaxPooling2D((2, 2), strides=(2, 2), name='block4_pool')(x) f4 = x # 26,26,512 -> 13,13,512 x = layers.Conv2D(512, (3, 3), activation='relu', padding='same', name='block5_conv1')(x) x = layers.Conv2D(512, (3, 3), activation='relu', padding='same', name='block5_conv2')(x) x = layers.Conv2D(512, (3, 3), activation='relu', padding='same', name='block5_conv3')(x) x = layers.MaxPooling2D((2, 2), strides=(2, 2), name='block5_pool')(x) f5 = x return img_input, [f1, f2, f3, f4, f5]c.搭建解码器以及像素分层

# 解码器def decoder(feature_input, n_classes, n_upSample): # feature_input是vggnet第四个卷积块的输出特征矩阵 # 26,26,512 output = (layers.ZeroPadding2D((1, 1), data_format=IMAGE_ORDERING))(feature_input) output = (layers.Conv2D(512, (3, 3), padding='valid', data_format=IMAGE_ORDERING))(output) output = (layers.BatchNormalization())(output) # 进行一次UpSampling2D,此时hw变为原来的1/8 # 52,52,256 output = (layers.UpSampling2D((2, 2), data_format=IMAGE_ORDERING))(output) output = (layers.ZeroPadding2D((1, 1), data_format=IMAGE_ORDERING))(output) output = (layers.Conv2D(256, (3, 3), padding='valid', data_format=IMAGE_ORDERING))(output) output = (layers.BatchNormalization())(output) # 进行一次UpSampling2D,此时hw变为原来的1/4 # 104,104,128 for _ in range(n_upSample - 2): output = (layers.UpSampling2D((2, 2), data_format=IMAGE_ORDERING))(output) output = (layers.ZeroPadding2D((1, 1), data_format=IMAGE_ORDERING))(output) output = (layers.Conv2D(128, (3, 3), padding='valid', data_format=IMAGE_ORDERING))(output) output = (layers.BatchNormalization())(output) # 进行一次 UpSampling2D,此时 hw 变为原来的 1/2 # 208,208,64 output = (layers.UpSampling2D((2, 2), data_format=IMAGE_ORDERING))(output) output = (layers.ZeroPadding2D((1, 1), data_format=IMAGE_ORDERING))(output) output = (layers.Conv2D(64, (3, 3), padding='valid', data_format=IMAGE_ORDERING))(output) output = (layers.BatchNormalization())(output)# 像素级分类层 # 此时输出为h_input/2,w_input/2,nclasses # 208,208,2 output = layers.Conv2D(n_classes, (3, 3), padding='same', data_format=IMAGE_ORDERING)(output) return outputd.SegNet网络

# 语义分割网络SegNetdef SegNet(input_height=416, input_width=416, n_classes=2, n_upSample=3, encoder_level=3): img_input, features = vggnet_encoder(input_height=input_height, input_width=input_width) feature = features[encoder_level] # (26,26,512) output = decoder(feature, n_classes, n_upSample) # 将结果进行reshape output = tf.reshape(output, (-1, int(input_height / 2) * int(input_width / 2), 2)) output = layers.Softmax()(output) model = tf.keras.Model(img_input, output) return model3.训练与测试

a. 测试与训练

model.compile(loss=loss_function, # 交叉熵损失函数 optimizer=optimizers.Adam(lr=1e-3), # 优化器 metrics=['accuracy']) # 评价标准# 训练model.fit_generator(generate_arrays_from_file(lines[:num_train], batch_size), # 训练集 steps_per_epoch=max(1, num_train // batch_size), # 每一个epos的steps数 validation_data=generate_arrays_from_file(lines[num_train:], batch_size), # 验证集 validation_steps=max(1, num_val // batch_size), epochs=50, initial_epoch=0, callbacks=[checkpoint_period, reduce_lr, early_stopping]) # 回调b. 测试

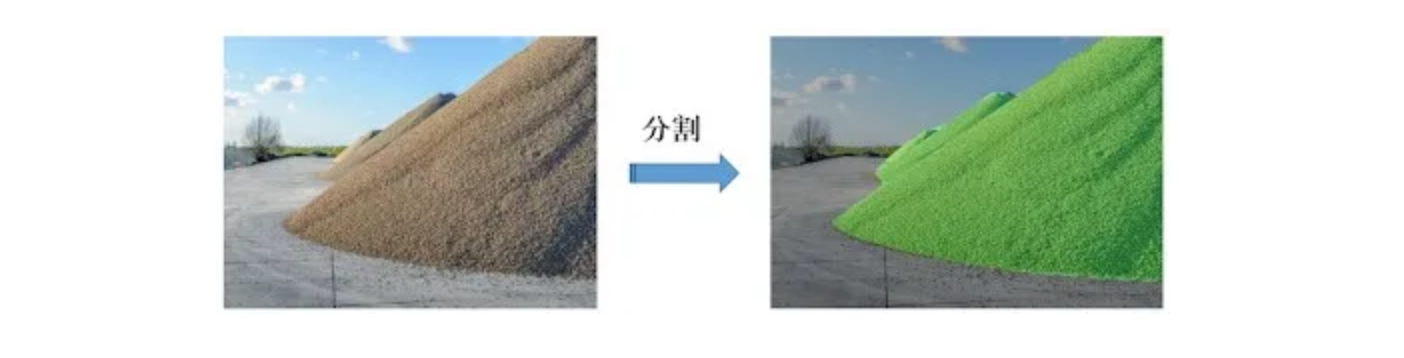

for jpg in imgs: img = Image.open("./img_test/"+jpg) old_img = copy.deepcopy(img) orininal_h = np.array(img).shape[0] orininal_w = np.array(img).shape[1] img = img.resize((WIDTH,HEIGHT)) img = np.array(img) img = img/255 img = img.reshape(-1,HEIGHT,WIDTH,3) pr = model.predict(img)[0] pr = pr.reshape((int(HEIGHT/2), int(WIDTH/2), NCLASSES)).argmax(axis=-1) seg_img = np.zeros((int(HEIGHT/2), int(WIDTH/2), 3)) colors = class_colors for c in range(NCLASSES): seg_img[:,:,1] += ((pr[:,: ] == c )*( colors[c][1] )).astype('uint8') # Image.fromarray将数组转换成image格式 seg_img = Image.fromarray(np.uint8(seg_img)).resize((orininal_w, orininal_h)) # 将两张图片合成一张图片 image = Image.blend(old_img, seg_img, 0.3) image.save("./img_out/"+jpg)我们可以看到如下结果,具体以自己的测试效果为准。

BERT

1.算法简介

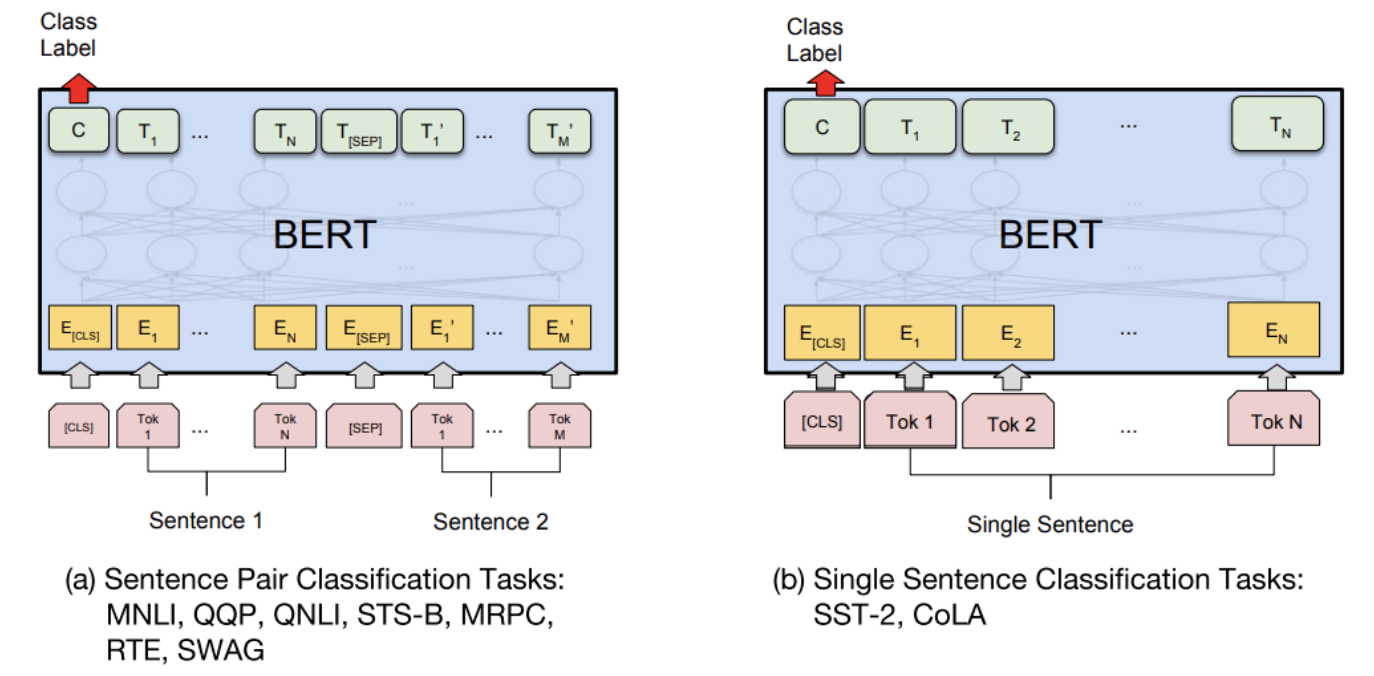

BERT的网络架构使用Transformer结构,其最大的特点是抛弃了传统的RNN和CNN,通过Attention机制将任意位置的两个单词的距离转换成1,有效的解决了NLP中棘手的长期依赖问题。

BERT 及其他 Transformer 编码器架构在自然语言处理 (NLP) 领域计算矢量空间下的文本表征任务中取得了巨大成功,不仅推进学术领域前沿研究指标的发展,还被广泛应用于 Google 搜索等大型应用。

2.TensorFlow搭建BERT

a.模型参数

class BertConfig(object):def __init__(self, **kwargs): super().__init__() self.vocab_size = kwargs.pop('vocab_size', 21128) # vocab size of pretrained model `bert-base-chinese` self.type_vocab_size = kwargs.pop('type_vocab_size', 2) self.hidden_size = kwargs.pop('hidden_size', 768) self.num_hidden_layers = kwargs.pop('num_hidden_layers', 12) self.num_attention_heads = kwargs.pop('num_attention_heads', 12) self.intermediate_size = kwargs.pop('intermediate_size', 3072) self.hidden_activation = kwargs.pop('hidden_activation', 'gelu') self.hidden_dropout_rate = kwargs.pop('hidden_dropout_rate', 0.1) self.attention_dropout_rate = kwargs.pop('attention_dropout_rate', 0.1) self.max_position_embeddings = kwargs.pop('max_position_embeddings', 512) self.max_sequence_length = kwargs.pop('max_sequence_length', 512)b.Embedding

class BertEmbedding(tf.keras.layers.Layer):def __init__(self, config, **kwargs): super().__init__(name='BertEmbedding') self.vocab_size = config.vocab_size self.hidden_size = config.hidden_size self.type_vocab_size = config.type_vocab_size self.position_embedding = tf.keras.layers.Embedding( config.max_position_embeddings, config.hidden_size, embeddings_initializer=tf.keras.initializers.TruncatedNormal(stddev=0.02), name='position_embedding' ) self.token_type_embedding = tf.keras.layers.Embedding( config.type_vocab_size, config.hidden_size, embeddings_initializer=tf.keras.initializers.TruncatedNormal(stddev=0.02), name='token_type_embedding' ) self.layer_norm = tf.keras.layers.LayerNormalization(epsilon=1e-12, name='LayerNorm') self.dropout = tf.keras.layers.Dropout(config.hidden_dropout_rate)def build(self, input_shape):with tf.name_scope('bert_embeddings'): self.token_embedding = self.add_weight('weight', shape=[self.vocab_size, self.hidden_size], initializer=tf.keras.initializers.TruncatedNormal(stddev=0.02) ) super().build(input_shape)def call(self, inputs, training=False, mode='embedding'):# used for masked lmif mode == 'linear':return tf.matmul(inputs, self.token_embedding, transpose_b=True) input_ids, token_type_ids = inputs position_ids = tf.range(input_ids.shape[1], dtype=tf.int32)[tf.newaxis, :]if token_type_ids is None: token_type_ids = tf.fill(input_ids.shape.as_list(), 0) position_embeddings = self.position_embedding(position_ids) token_type_embeddings = self.token_type_embedding(token_type_ids) token_embeddings = tf.gather(self.token_embedding, input_ids) embeddings = token_embeddings + token_type_embeddings + position_embeddings embeddings = self.layer_norm(embeddings) embeddings = self.dropout(embeddings, training=training)return embeddingsc.Multi-head attention

class BertAttention(tf.keras.layers.Layer):"""Multi-head self-attention mechanism from transformer."""def __init__(self, config, **kwargs): super().__init__(name='BertAttention') self.num_attention_heads = config.num_attention_heads self.hidden_size = config.hidden_sizeassert self.hidden_size % self.num_attention_heads == 0 self.attention_head_size = self.hidden_size // self.num_attention_heads self.wq = tf.keras.layers.Dense( self.hidden_size, kernel_initializer=tf.keras.initializers.TruncatedNormal(stddev=0.02), name='query' ) self.wk = tf.keras.layers.Dense( self.hidden_size, kernel_initializer=tf.keras.initializers.TruncatedNormal(stddev=0.02), name='key' ) self.wv = tf.keras.layers.Dense( self.hidden_size, kernel_initializer=tf.keras.initializers.TruncatedNormal(stddev=0.02), name='value' ) self.attention_dropout = tf.keras.layers.Dropout(config.attention_dropout_rate) self.dense = tf.keras.layers.Dense( self.hidden_size, kernel_initializer=tf.keras.initializers.TruncatedNormal(stddev=0.02), name='dense' ) self.layer_norm = tf.keras.layers.LayerNormalization(epsilon=1e-12, name='LayerNorm') self.hidden_dropout = tf.keras.layers.Dropout(config.hidden_dropout_rate)def call(self, inputs, training=False): hidden_states, attention_mask = inputs batch_size = tf.shape(hidden_states)[0] query = self.wq(hidden_states) key = self.wk(hidden_states) value = self.wv(hidden_states)def _split_heads(x): x = tf.reshape(x, shape=[batch_size, -1, self.num_attention_heads, self.attention_head_size])return tf.transpose(x, perm=[0, 2, 1, 3]) query = _split_heads(query) key = _split_heads(key) value = _split_heads(value) attention_score = tf.matmul(query, key, transpose_b=True) dk = tf.cast(hidden_states.shape[-1], tf.float32) attention_score = attention_score / tf.math.sqrt(dk)if attention_mask is not None: attention_mask = tf.cast(attention_mask[:, tf.newaxis, tf.newaxis, :], dtype=tf.float32) attention_mask = (1.0 - attention_mask) * -10000.0 attention_score = attention_score + attention_mask attention_score = tf.nn.softmax(attention_score) attention_score = self.attention_dropout(attention_score, training=training) context = tf.matmul(attention_score, value) context = tf.transpose(context, perm=[0, 2, 1, 3]) context = tf.reshape(context, (batch_size, -1, self.hidden_size))# layer norm _hidden_states = self.dense(context) _hidden_states = self.hidden_dropout(_hidden_states, training=training) _hidden_states = self.layer_norm(hidden_states + _hidden_states)return _hidden_states, attention_scored.Encoder

def gelu(x): cdf = 0.5 * (1.0 + tf.math.erf(x / tf.math.sqrt(2.0)))return x * cdfdef gelu_new(x): cdf = 0.5 * (1.0 + tf.tanh((np.sqrt(2 / np.pi) * (x + 0.044715 * tf.pow(x, 3)))))return x * cdfdef swish(x):return x * tf.sigmoid(x)ACT2FN = {"gelu": tf.keras.layers.Activation(gelu),"relu": tf.keras.activations.relu,"swish": tf.keras.layers.Activation(swish),"gelu_new": tf.keras.layers.Activation(gelu_new),}class BertIntermediate(tf.keras.layers.Layer):def __init__(self, config, **kwargs): super().__init__(**kwargs) self.dense = tf.keras.layers.Dense( config.intermediate_size, kernel_initializer=tf.keras.initializers.TruncatedNormal(stddev=0.02) )if isinstance(config.hidden_activation, tf.keras.layers.Activation): self.activation = config.hidden_activationelif isinstance(config.hidden_activation, str): self.activation = ACT2FN[config.hidden_activation]else: self.activation = ACT2FN['gelu']def call(self, inputs, traning=False): hidden_states = inputs hidden_states = self.dense(hidden_states) hidden_states = self.activation(hidden_states)return hidden_statesclass BertEncoderLayer(tf.keras.layers.Layer):def __init__(self, config, **kwargs): super().__init__(name='BertEncoderLayer') self.attention = BertAttention(config) self.intermediate = BertIntermediate(config) self.dense = tf.keras.layers.Dense( config.hidden_size, kernel_initializer=tf.keras.initializers.TruncatedNormal(stddev=0.02) ) self.dropout = tf.keras.layers.Dropout(config.hidden_dropout_rate) self.layer_norm = tf.keras.layers.LayerNormalization(epsilon=1e-12, name='LayerNorm')def call(self, inputs, training=False): hidden_states, attention_mask = inputs _hidden_states, attention_score = self.attention(inputs=[hidden_states, attention_mask], training=training) outputs = self.intermediate(inputs=_hidden_states) outputs = self.dense(outputs) outputs = self.dropout(outputs, training=training) outputs = self.layer_norm(_hidden_states + outputs)return outputs, attention_scoreclass BertEncoder(tf.keras.layers.Layer):def __init__(self, config, **kwargs): super().__init__(name='BertEncoder') self.encoder_layers = [BertEncoderLayer(config) for _ in range(config.num_hidden_layers)]def call(self, inputs, training=False): hidden_states, attention_mask = inputs all_hidden_states = [] all_attention_scores = []for _, encoder in enumerate(self.encoder_layers): hidden_states, attention_score = encoder(inputs=[hidden_states, attention_mask], training=training) all_hidden_states.append(hidden_states) all_attention_scores.append(attention_score)return all_hidden_states, all_attention_scorese.Masked Language Model

class BertMLMHead(tf.keras.layers.Layer):"""Masked language model for BERT pre-training."""def __init__(self, config, embedding, **kwargs): super().__init__(**kwargs) self.vocab_size = config.vocab_size self.embedding = embedding self.dense = tf.keras.layers.Dense( config.hidden_size, kernel_initializer=tf.keras.initializers.TruncatedNormal(stddev=0.02), name='dense' )if isinstance(config.hidden_activation, tf.keras.layers.Activation): self.activation = config.hidden_activationelif isinstance(config.hidden_activation, str): self.activation = ACT2FN[config.hidden_activation]else: self.activation = ACT2FN['gelu'] self.layer_norm = tf.keras.layers.LayerNormalization(epsilon=1e-12)def build(self, input_shape): self.bias = self.add_weight(shape=(self.vocab_size,), initializer='zeros', trainable=True, name='bias') super().build(input_shape)def call(self, inputs, training=False): hidden_states = inputs hidden_states = self.dense(hidden_states) hidden_states = self.activation(hidden_states) hidden_states = self.layer_norm(hidden_states) hidden_states = self.embedding(inputs=hidden_states, mode='linear') hidden_states = hidden_states + self.biasreturn hidden_states最后收尾:

class BertPooler(tf.keras.layers.Layer):def __init__(self, config, **kwargs): super().__init__(**kwargs) self.dense = tf.keras.layers.Dense( config.hidden_size, kernel_initializer=tf.keras.initializers.TruncatedNormal(stddev=0.02), activation='tanh', name='pooler' )def call(self, inputs, training=False): hidden_states = inputs# pool the first token: [CLS] outputs = self.dense(hidden_states[:, 0])return outputsclass BertNSPHead(tf.keras.layers.Layer):"""Next sentence prediction for BERT pre-training."""def __init__(self, config, **kwargs): super().__init__(**kwargs) self.classifier = tf.keras.layers.Dense(2, kernel_initializer=tf.keras.initializers.TruncatedNormal(stddev=0.02), name='sequence_relationip' )def call(self, inputs, training=False): pooled_output = inputs relation = self.classifier(pooled_output)return relation3.TensorFlow Hub中调用BERT

a. 配置模型

import seaborn as snsfrom sklearn.metrics import pairwiseimport tensorflow as tfimport tensorflow_hub as hubimport tensorflow_text as text BERT_MODEL = "https://hub.tensorflow.google.cn/google/experts/bert/wiki_books/2"PREPROCESS_MODEL = "https://hub.tensorflow.google.cn/tensorflow/bert_en_uncased_preprocess/1"b.从 Wikipedia 中获取一些要通过模型运行的句子.

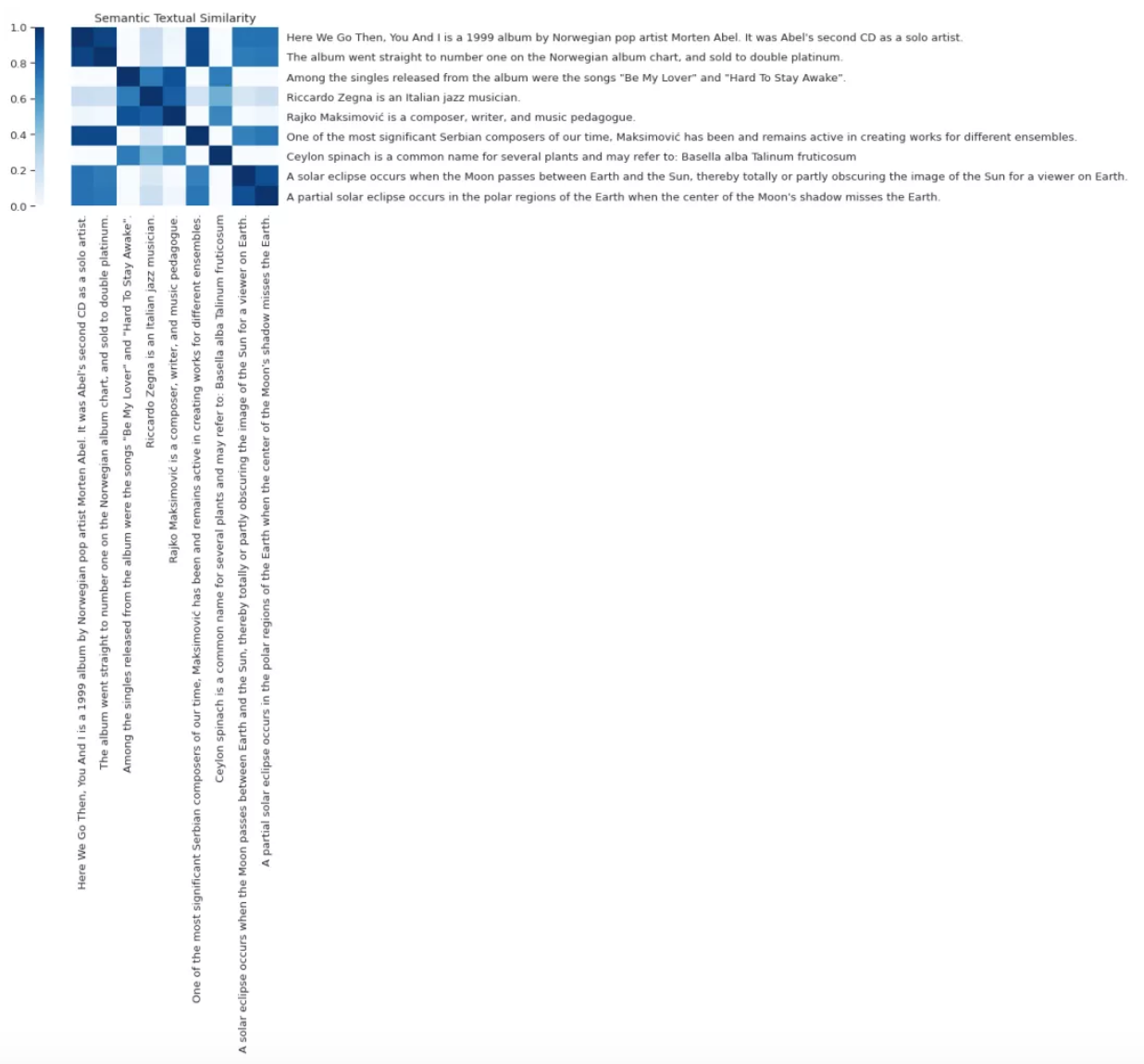

sentences = [ "Here We Go Then, You And I is a 1999 album by Norwegian pop artist Morten Abel. It was Abel's second CD as a solo artist.", "The album went straight to number one on the Norwegian album chart, and sold to double platinum.", "Among the singles released from the album were the songs \"Be My Lover\" and \"Hard To Stay Awake\".", "Riccardo Zegna is an Italian jazz musician.", "Rajko Maksimović is a composer, writer, and music pedagogue.", "One of the most significant Serbian composers of our time, Maksimović has been and remains active in creating works for different ensembles.", "Ceylon spinach is a common name for several plants and may refer to: Basella alba Talinum fruticosum", "A solar eclipse occurs when the Moon passes between Earth and the Sun, thereby totally or partly obscuring the image of the Sun for a viewer on Earth.", "A partial solar eclipse occurs in the polar regions of the Earth when the center of the Moon's shadow misses the Earth.",]c.运行demo

preprocess = hub.load(PREPROCESS_MODEL)bert = hub.load(BERT_MODEL)inputs = preprocess(sentences)outputs = bert(inputs)print("Sentences:")print(sentences)print("\nBERT inputs:")print(inputs)print("\nPooled embeddings:")print(outputs["pooled_output"])print("\nPer token embeddings:")print(outputs["sequence_output"])输出如下:

Sentences:["Here We Go Then, You And I is a 1999 album by Norwegian pop artist Morten Abel. It was Abel's second CD as a solo artist.", 'The album went straight to number one on the Norwegian album chart, and sold to double platinum.', 'Among the singles released from the album were the songs "Be My Lover" and "Hard To Stay Awake".', 'Riccardo Zegna is an Italian jazz musician.', 'Rajko Maksimović is a composer, writer, and music pedagogue.', 'One of the most significant Serbian composers of our time, Maksimović has been and remains active in creating works for different ensembles.', 'Ceylon spinach is a common name for several plants and may refer to: Basella alba Talinum fruticosum', 'A solar eclipse occurs when the Moon passes between Earth and the Sun, thereby totally or partly obscuring the image of the Sun for a viewer on Earth.', "A partial solar eclipse occurs in the polar regions of the Earth when the center of the Moon's shadow misses the Earth."]BERT inputs:{'input_type_ids': <tf.Tensor: shape=(9, 128), dtype=int32, numpy=array([[0, 0, 0, ..., 0, 0, 0], [0, 0, 0, ..., 0, 0, 0], [0, 0, 0, ..., 0, 0, 0], ..., [0, 0, 0, ..., 0, 0, 0], [0, 0, 0, ..., 0, 0, 0], [0, 0, 0, ..., 0, 0, 0]], dtype=int32)>, 'input_mask': <tf.Tensor: shape=(9, 128), dtype=int32, numpy=array([[1, 1, 1, ..., 0, 0, 0], [1, 1, 1, ..., 0, 0, 0], [1, 1, 1, ..., 0, 0, 0], ..., [1, 1, 1, ..., 0, 0, 0], [1, 1, 1, ..., 0, 0, 0], [1, 1, 1, ..., 0, 0, 0]], dtype=int32)>, 'input_word_ids': <tf.Tensor: shape=(9, 128), dtype=int32, numpy=array([[ 101, 2182, 2057, ..., 0, 0, 0], [ 101, 1996, 2201, ..., 0, 0, 0], [ 101, 2426, 1996, ..., 0, 0, 0], ..., [ 101, 16447, 6714, ..., 0, 0, 0], [ 101, 1037, 5943, ..., 0, 0, 0], [ 101, 1037, 7704, ..., 0, 0, 0]], dtype=int32)>}Pooled embeddings:tf.Tensor([[ 0.79759794 -0.48580435 0.49781656 ... -0.34488496 0.39727688 -0.20639414] [ 0.57120484 -0.41205186 0.70489156 ... -0.35185218 0.19032398 -0.4041889 ] [-0.6993836 0.1586663 0.06569844 ... -0.06232387 -0.8155013 -0.07923748] ... [-0.35727036 0.77089816 0.15756643 ... 0.441857 -0.8644817 0.04504787] [ 0.9107702 0.41501534 0.5606339 ... -0.49263883 0.3964067 -0.05036191] [ 0.90502924 -0.15505327 0.726722 ... -0.34734532 0.50526506 -0.19542982]], shape=(9, 768), dtype=float32)Per token embeddings:tf.Tensor([[[ 1.09197533e+00 -5.30553877e-01 5.46399117e-01 ... -3.59626472e-01 4.20411289e-01 -2.09402084e-01] [ 1.01438284e+00 7.80790329e-01 8.53758693e-01 ... 5.52820444e-01 -1.12457883e+00 5.60277641e-01] [ 7.88627684e-01 7.77753443e-02 9.51507747e-01 ... -1.90755337e-01 5.92060506e-01 6.19107723e-01] ... [-3.22031736e-01 -4.25212324e-01 -1.28237933e-01 ... -3.90951157e-01 -7.90973544e-01 4.22365129e-01] [-3.10389847e-02 2.39855915e-01 -2.19942629e-01 ... -1.14405245e-01 -1.26804781e+00 -1.61363974e-01] [-4.20636892e-01 5.49730241e-01 -3.24446023e-01 ... -1.84789032e-01 -1.13429689e+00 -5.89773059e-02]] [[ 6.49309337e-01 -4.38080192e-01 8.76956999e-01 ... -3.67556065e-01 1.92673296e-01 -4.28645700e-01] [-1.12487435e+00 2.99313068e-01 1.17996347e+00 ... 4.87294406e-01 5.34003854e-01 2.28363827e-01] [-2.70572990e-01 3.23538631e-02 1.04257035e+00 ... 5.89937270e-01 1.53678954e+00 5.84256709e-01] ... [-1.47624981e+00 1.82391271e-01 5.58804125e-02 ... -1.67332077e+00 -6.73984885e-01 -7.24499583e-01] [-1.51381290e+00 5.81846952e-01 1.61421359e-01 ... -1.26408398e+00 -4.02721316e-01 -9.71973777e-01] [-4.71531510e-01 2.28173390e-01 5.27765870e-01 ... -7.54838765e-01 -9.09029484e-01 -1.69548154e-01]] [[-8.66093040e-01 1.60018250e-01 6.57932162e-02 ... -6.24047518e-02 -1.14323711e+00 -7.94039369e-02] [ 7.71180928e-01 7.08045244e-01 1.13499165e-01 ... 7.88309634e-01 -3.14380586e-01 -9.74871933e-01] [-4.40023899e-01 -3.00594330e-01 3.54794949e-01 ... 7.97353014e-02 -4.73935485e-01 -1.10018420e+00] ... [-1.02053010e+00 2.69383639e-01 -4.73101676e-01 ... -6.63193762e-01 -1.45799184e+00 -3.46655250e-01] [-9.70034838e-01 -4.50136065e-02 -5.97798169e-01 ... -3.05265576e-01 -1.27442575e+00 -2.80517340e-01] [-7.31442988e-01 1.76993430e-01 -4.62578893e-01 ... -1.60623401e-01 -1.63460755e+00 -3.20607185e-01]] ... [[-3.73753369e-01 1.02253771e+00 1.58890173e-01 ... 4.74535972e-01 -1.31081581e+00 4.50783782e-02] [-4.15891230e-01 5.00191450e-01 -4.58438754e-01 ... 4.14822072e-01 -6.20658875e-01 -7.15549171e-01] [-1.25043917e+00 5.09365320e-01 -5.71037054e-01 ... 3.54916602e-01 2.43683696e-01 -2.05771995e+00] ... [ 1.33936703e-01 1.18591738e+00 -2.21700743e-01 ... -8.19471061e-01 -1.67373013e+00 -3.96926820e-01] [-3.36624265e-01 1.65562105e+00 -3.78126293e-01 ... -9.67453301e-01 -1.48010290e+00 -8.33311737e-01] [-2.26493448e-01 1.61784422e+00 -6.70443296e-01 ... -4.90783423e-01 -1.45356917e+00 -7.17075229e-01]] [[ 1.53202307e+00 4.41654980e-01 6.33757174e-01 ... -5.39538860e-01 4.19378459e-01 -5.04045524e-02] [ 8.93778205e-01 8.93955052e-01 3.06287408e-02 ... 5.90391904e-02 -2.06495613e-01 -8.48110974e-01] [-1.85600221e-02 1.04790771e+00 -1.33295977e+00 ... -1.38697088e-01 -3.78795475e-01 -4.90686238e-01] ... [ 1.42756522e+00 1.06969848e-01 -4.06335592e-02 ... -3.17773186e-02 -4.14598197e-01 7.00368583e-01] [ 1.12866342e+00 1.45478487e-01 -6.13721192e-01 ... 4.74921733e-01 -3.98516655e-01 4.31243867e-01] [ 1.43932939e+00 1.80306956e-01 -4.28539753e-01 ... -2.50225902e-01 -1.00005007e+00 3.59855264e-01]] [[ 1.49934173e+00 -1.56314075e-01 9.21745181e-01 ... -3.62421691e-01 5.56351066e-01 -1.97976440e-01] [ 1.11105371e+00 3.66513431e-01 3.55058551e-01 ... -5.42975247e-01 1.44716531e-01 -3.16758066e-01] [ 2.40487278e-01 3.81156325e-01 -5.91827273e-01 ... 3.74107122e-01 -5.98296165e-01 -1.01662648e+00] ... [ 1.01586223e+00 5.02603769e-01 1.07373089e-01 ... -9.56426382e-01 -4.10394996e-01 -2.67601997e-01] [ 1.18489289e+00 6.54797733e-01 1.01688504e-03 ... -8.61546934e-01 -8.80392492e-02 -3.06370854e-01] [ 1.26691115e+00 4.77678716e-01 6.62857294e-03 ... -1.15858066e+00 -7.06758797e-02 -1.86787039e-01]]], shape=(9, 128, 768), dtype=float32)d.相似度

def plot_similarity(features, labels): """Plot a similarity matrix of the embeddings.""" cos_sim = pairwise.cosine_similarity(features) sns.set(font_scale=1.2) cbar_kws=dict(use_gridspec=False, location="left") g = sns.heatmap( cos_sim, xticklabels=labels, yticklabels=labels, vmin=0, vmax=1, cmap="Blues", cbar_kws=cbar_kws) g.tick_params(labelright=True, labelleft=False) g.set_yticklabels(labels, rotation=0) g.set_title("Semantic Textual Similarity")plot_similarity(outputs["pooled_output"], sentences)YAMNet ML语音分类

YAMNet 是一个经过预训练的深度网络,可基于 AudioSet-YouTube 语料库预测 521 种音频事件类别,并采用 Mobilenet\_v1 深度可分离卷积架构。

TensorFlow Hub加载模型。

import tensorflow as tfimport tensorflow_hub as hubimport numpy as npimport csvimport matplotlib.pyplot as pltfrom IPython.display import Audiofrom scipy.io import wavfile# Load the model.model = hub.load('https://hub.tensorflow.google.cn/google/yamnet/1')标签文件将从模型资产中加载,位于model.class_map_path()。将其加载到class_names变量上。

def class_names_from_csv(class_map_csv_text): """Returns list of class names corresponding to score vector.""" class_names = [] with tf.io.gfile.GFile(class_map_csv_text) as csvfile: reader = csv.DictReader(csvfile) for row in reader: class_names.append(row['display_name']) return class_namesclass_map_path = model.class_map_path().numpy()class_names = class_names_from_csv(class_map_path)添加一种方法来验证和转换加载的音频是否在正确的sample\_rate(16K)上,否则会影响模型的结果。

def ensure_sample_rate(original_sample_rate, waveform, desired_sample_rate=16000): """Resample waveform if required.""" if original_sample_rate != desired_sample_rate: desired_length = int(round(float(len(waveform)) / original_sample_rate * desired_sample_rate)) waveform = scipy.signal.resample(waveform, desired_length) return desired_sample_rate, waveform下载语音文件:

curl -O https://storage.googleapis.com/audioset/speech_whistling2.wavcurl -O https://storage.googleapis.com/audioset/miaow_16k.wav# wav_file_name = 'speech_whistling2.wav'wav_file_name = 'miaow_16k.wav'sample_rate, wav_data = wavfile.read(wav_file_name, 'rb')sample_rate, wav_data = ensure_sample_rate(sample_rate, wav_data)# Show some basic information about the audio.duration = len(wav_data)/sample_rateprint(f'Sample rate: {sample_rate} Hz')print(f'Total duration: {duration:.2f}s')print(f'Size of the input: {len(wav_data)}')# Listening to the wav file.Audio(wav_data, rate=sample_rate)采样率:16000 Hz总持续时间:6.73s输入大小:107698/tmpfs/src/tf_docs_env/lib/python3.6/site-packages/ipykernel_launcher.py:3:WavFileWarning:不理解块(非数据),请跳过它。 这与ipykernel软件包分开,因此我们可以避免导入,直到wav_data进行归一化

waveform = wav_data / tf.int16.max用已经准备好的数据调用模型获取评分和频谱图。

scores, embeddings, spectrogram = model(waveform)scores_np = scores.numpy()spectrogram_np = spectrogram.numpy()infered_class = class_names[scores_np.mean(axis=0).argmax()]print(f'The main sound is: {infered_class}')可视化:

plt.figure(figsize=(10, 6))# Plot the waveform.plt.subplot(3, 1, 1)plt.plot(waveform)plt.xlim([0, len(waveform)])# Plot the log-mel spectrogram (returned by the model).plt.subplot(3, 1, 2)plt.imshow(spectrogram_np.T, aspect='auto', interpolation='nearest', origin='lower')# Plot and label the model output scores for the top-scoring classes.mean_scores = np.mean(scores, axis=0)top_n = 10top_class_indices = np.argsort(mean_scores)[::-1][:top_n]plt.subplot(3, 1, 3)plt.imshow(scores_np[:, top_class_indices].T, aspect='auto', interpolation='nearest', cmap='gray_r')# patch_padding = (PATCH_WINDOW_SECONDS / 2) / PATCH_HOP_SECONDS# values from the model documentationpatch_padding = (0.025 / 2) / 0.01plt.xlim([-patch_padding-0.5, scores.shape[0] + patch_padding-0.5])# Label the top_N classes.yticks = range(0, top_n, 1)plt.yticks(yticks, [class_names[top_class_indices[x]] for x in yticks])_ = plt.ylim(-0.5 + np.array([top_n, 0]))阿chai最早使用的深度学习框架是Theano和Caffe,后来在TensorFlow测试版本出的时候就成为"TFBoy"了,由于某种原因,一直用到现在,Hub、lite等等都在使用。虽然TF的动态图出来的有点晚,但是阿chai觉得TF还是非常棒的,朋友说是因为我没用过PyTorch,额,但是除了特殊情况,阿chai还是坚持TF大法的。

推荐阅读

更多嵌入式AI技术干货请关注嵌入式AI专栏。