文章转载于:阿chai带你学AI

作者: 阿chai

我是来自山区、朴实、不偷电瓶的AI算法工程师阿chai,给大家分享人工智能、自动驾驶、机器人、3D感知相关的知识

FaceBook推出了D2Go,Detectron2的移动端,用于在移动设备上训练和部署有深度学习检测与分割的模型。

- 它是由PyTorch和Detectron2提供支持的深度学习工具包。

- 适用于移动设备的最新高效骨干网。

- 端到端模型培训,量化和部署流程。

- 轻松导出为TorchScript格式进行部署。

可完全在移动端设备运行,算是部署一条龙服务的工具了。

D2Go的安装

1.配置PyTorch(CUDA10.2):

# conda创建环境conda create -n D2Go python=3.7# 激活环境source activate D2Go# 配置PyTorchconda install pytorch torchvision cudatoolkit=10.2 -c pytorch-nightly2.安装Detectron2工具包:

# 使用码云可能有模块包不兼容python -m pip install 'git+https://github.com/facebookresearch/detectron2.git'3.安装mobile\_cv:

python -m pip install 'git+https://github.com/facebookresearch/mobile-vision.git'4.安装D2Go(别忘了intall后面的"."):

# clone D2Go源码git clone https://github.com/facebookresearch/d2go# 安装cd d2gopython -m pip install .基础测试Python

在使用之前先去文章开篇的模型库中下载模型,这里以faster\_rcnn为例:

# 进入项目文件cd demo/# 运行demo,如果在colab中运行的可以直接绑定自己谷歌网盘中的模型资源python demo.py --config-file faster_rcnn_fbnetv3a_C4.yaml --input cat.jpg --output res.jpg运行视频文件(支持的视频格式小伙伴们看一下具体源码上的说明):

python demo.py --config-file faster_rcnn_fbnetv3a_C4.yaml --video-input video.mp4 --output res.mp4train之前首先得按照detectron2的说明设置数据集。

# 训练命令d2go.train_net --config-file ./configs/faster_rcnn_fbnetv3a_C4.yaml训练完成后进行评估:

d2go.train_net --config-file ./configs/faster_rcnn_fbnetv3a_C4.yaml --eval-only \MODEL.WEIGHTS model_path也可以对官方的模型进行评估(下载估计需要科学上网):

d2go.train_net --config-file ./configs/faster_rcnn_fbnetv3a_C4.yaml --eval-only \MODEL.WEIGHTS https://mobile-cv.s3-us-west-2.amazonaws.com/d2go/models/246823121/model_0479999.pth模型导出与量化

模型可导出Torchscript以及进行量化,但是导出int8模型可能会导致精度下降,因此可以使用quantization-aware training,我们首先看一下案例中的配置文件,主要以现成的模型文件为例:

_BASE_: "faster_rcnn_fbnetv3a_C4.yaml"SOLVER: BASE_LR: 0.0001 MAX_ITER: 50 IMS_PER_BATCH: 48 # for 8GPUsQUANTIZATION: BACKEND: "qnnpack" QAT: ENABLED: True START_ITER: 0 ENABLE_OBSERVER_ITER: 0 DISABLE_OBSERVER_ITER: 5 FREEZE_BN_ITER: 7我们使用可以通过如下命令完成quantization-aware training:

d2go.train_net --config-file configs/qat_faster_rcnn_fbnetv3a_C4.yaml \MODEL.WEIGHTS https://mobile-cv.s3-us-west-2.amazonaws.com/d2go/models/246823121/model_0479999.pth对于一般的Torchscript model导出可以使用:

d2go.exporter --config-file configs/faster_rcnn_fbnetv3a_C4.yaml \--predictor-types torchscript --output-dir ./ \MODEL.WEIGHTS https://mobile-cv.s3-us-west-2.amazonaws.com/d2go/models/246823121/model_0479999.pthint8量化:

d2go.exporter --config-file configs/faster_rcnn_fbnetv3a_C4.yaml \--output-dir ./ --predictor-type torchscript_int8 \MODEL.WEIGHTS https://mobile-cv.s3-us-west-2.amazonaws.com/d2go/models/246823121/model_0479999.pth在Android上进行D2Go

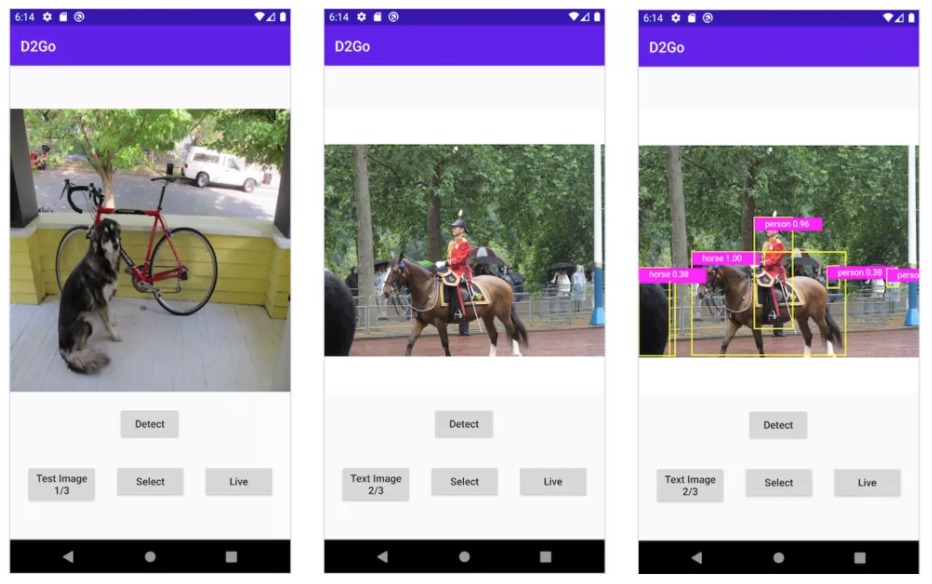

Demo案例是基于Object Detection demo app并且与原项目的YOLOv5做了比较,在谷歌Pixel 3手机上的速度分别为50ms与550ms。

前面的安装过程不再赘述,区别是推荐使用如下配置:

- PyTorch 1.8.0和Torchvision 0.9.0

- Python 3.8或更高版本

- Android Pytorch==1.8.0、torchvision==1.8.0torchvision\_ops==0.9.0

- Android Studio 4.0.1或更高版本

FaceBook官方提供了APP的代码,我们首先下载下来:

git clone https://github.com/pytorch/android-demo-app在Android Studio中,打开android-demo-app/D2Go(不是android-demo-app/D2Go/ObjectDetection)。如果发生错误“ Gradle的依赖项可能已损坏”,那么在Android Studio-文件-项目结构中修改Gradle的版本为4.10.1。

添加构建的torchvision-ops库在build.gradle文件和MainActivity.java中:

implementation 'org.pytorch:pytorch_android:1.8.0'implementation 'org.pytorch:pytorch_android_torchvision:1.8.0'implementation 'org.pytorch:torchvision_ops:0.9.0'static { if (!NativeLoader.isInitialized()) { NativeLoader.init(new SystemDelegate()); } NativeLoader.loadLibrary("pytorch_jni"); NativeLoader.loadLibrary("torchvision_ops");}如下代码显示的速度:

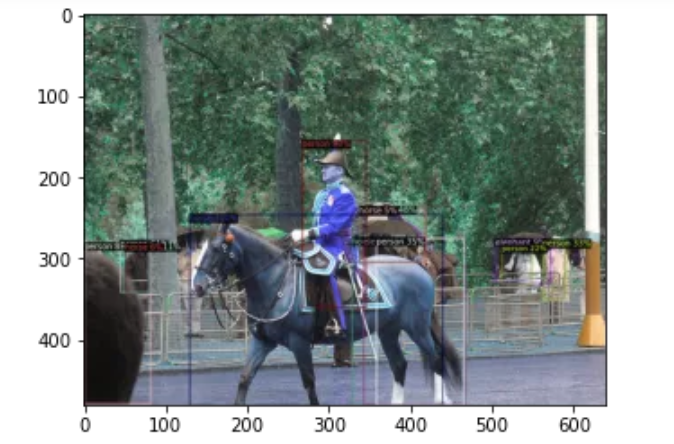

final long startTime = SystemClock.elapsedRealtime();IValue[] outputTuple = mModule.forward(IValue.listFrom(inputTensor)).toTuple();final long inferenceTime = SystemClock.elapsedRealtime() - startTime;Log.d("D2Go", "inference time (ms): " + inferenceTime);下面为手机上的效果展示:

notebook上的D2Go案例

以下建议在Colab中运行,Colab的教程请看Cui神的教程。

这介绍d2go的一些基本用法,包括:

(1) 使用预先训练好的d2go模型,对图像或视频进行推理; (2) 加载一个新的数据集并训练一个d2go模型; (3) 使用训练后量化将模型导出到int8;

首先从d2go导入model zoo API,并获取一个faster-rcnn的模型:

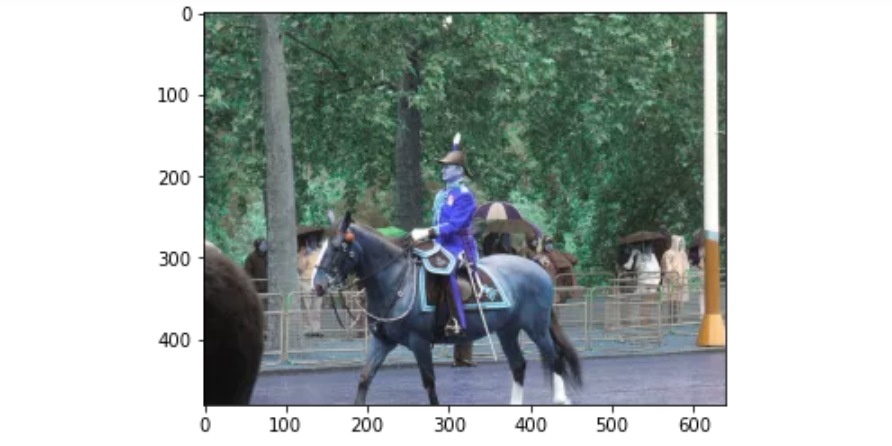

from d2go.model_zoo import model_zoomodel = model_zoo.get('faster_rcnn_fbnetv3a_C4.yaml', trained=True)然后我们从COCO数据集中获取一张image:

import cv2from matplotlib import pyplot as plt!wget http://images.cocodataset.org/val2017/000000439715.jpg -q -O input.jpgim = cv2.imread("./input.jpg")plt.imshow(im)创建一个DemoPredictor进行推理,并查看输出结果:

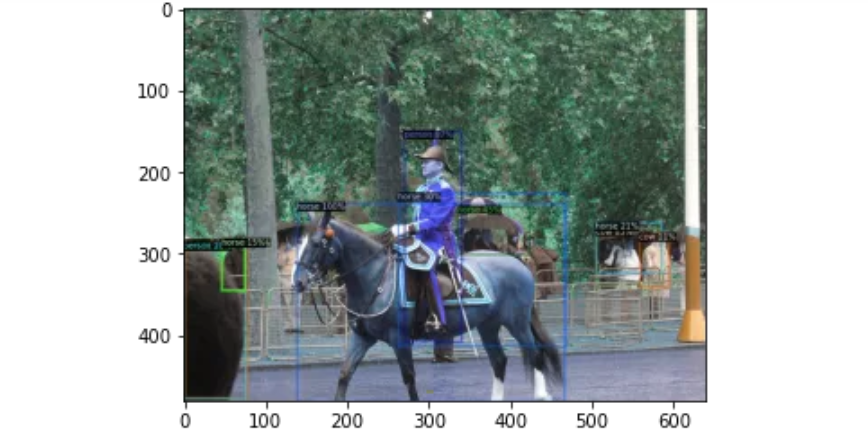

from d2go.utils.demo_predictor import DemoPredictorpredictor = DemoPredictor(model)outputs = predictor(im)# output object categories、bounding boxesprint(outputs["instances"].pred_classes)print(outputs["instances"].pred_boxes)下面为输出结果:

tensor([17, 0, 19, 17, 17, 17, 19, 0, 0, 19, 17, 17, 19], device='cuda:0')Boxes(tensor([[139.2618, 238.0369, 467.4290, 480.0000], [271.1126, 150.1942, 341.3032, 406.9402], [505.8431, 268.7002, 585.4443, 339.0620], [ 2.2081, 282.8764, 78.0862, 475.8036], [336.2187, 241.6059, 424.0182, 301.4847], [262.5104, 226.0209, 468.7945, 413.3305], [ 46.3860, 282.2225, 74.5961, 344.6837], [ 0.0000, 285.9752, 75.4428, 477.4194], [ 46.6597, 281.7245, 74.5386, 344.4575], [ 0.0000, 286.2906, 75.8711, 477.1982], [505.0901, 262.1472, 586.4306, 323.4085], [ 46.7585, 282.4321, 74.5174, 344.7928], [559.0256, 275.1691, 595.4606, 339.6431]], device='cuda:0'))接下来讲结果可视化,讲类别的标签与边界框绘制到image中显示:

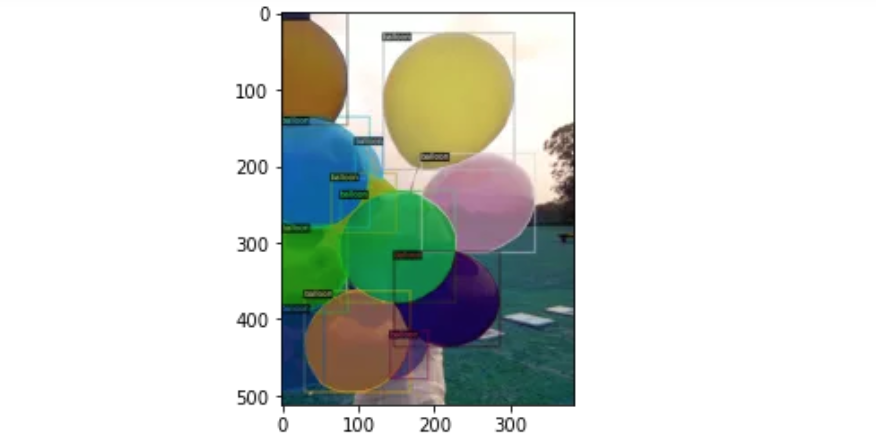

from detectron2.utils.visualizer import Visualizerfrom detectron2.data import MetadataCatalog, DatasetCatalogv = Visualizer(im[:, :, ::-1], MetadataCatalog.get("coco_2017_train"))out = v.draw_instance_predictions(outputs["instances"].to("cpu"))plt.imshow(out.get_image()[:, :, ::-1])接下来介绍自定义数据集的训练,分割图片中的气球。

我们首先下载数据集,下载的过程termianl中输出太多了,就不在这里展示了。由于是在Colab上跑,因此需要前面加上“!”。

!wget https://github.com/matterport/Mask_RCNN/releases/download/v2.1/balloon_dataset.zip!unzip -o balloon_dataset.zip > /dev/nullD2Go构建在detectron2之上,让我们根据detectron2 custom dataset tutorial。数据集是其自定义格式,因此我们编写一个函数来解析并变为为detectron2的标准格式:

import osimport jsonimport numpy as npfrom detectron2.structures import BoxModedef get_balloon_dicts(img_dir): json_file = os.path.join(img_dir, "via_region_data.json") with open(json_file) as f: imgs_anns = json.load(f) dataset_dicts = [] for idx, v in enumerate(imgs_anns.values()): record = {} filename = os.path.join(img_dir, v["filename"]) height, width = cv2.imread(filename).shape[:2] record["file_name"] = filename record["image_id"] = idx record["height"] = height record["width"] = width annos = v["regions"] objs = [] for _, anno in annos.items(): assert not anno["region_attributes"] anno = anno["shape_attributes"] px = anno["all_points_x"] py = anno["all_points_y"] poly = [(x + 0.5, y + 0.5) for x, y in zip(px, py)] poly = [p for x in poly for p in x] obj = { "bbox": [np.min(px), np.min(py), np.max(px), np.max(py)], "bbox_mode": BoxMode.XYXY_ABS, "segmentation": [poly], "category_id": 0, } objs.append(obj) record["annotations"] = objs dataset_dicts.append(record) return dataset_dictsfor d in ["train", "val"]: DatasetCatalog.register("balloon_" + d, lambda d=d: get_balloon_dicts("balloon/" + d)) MetadataCatalog.get("balloon_" + d).set(thing_classes=["balloon"], evaluator_type="coco")balloon_metadata = MetadataCatalog.get("balloon_train")为了验证数据加载是否正确,随机选取的样本在训练集中的标注可视化:

import randomdataset_dicts = get_balloon_dicts("balloon/train")img = cv2.imread(d["file_name"])visualizer = Visualizer(img[:, :, ::-1],metadata=balloon_metadata, scale=0.5)out = visualizer.draw_dataset_dict(d)plt.figure()plt.imshow(out.get_image()[:, :, ::-1])

接下来开始训练,网络框架使用FBNetV3A Mask R-CNN:

for d in ["train", "val"]: MetadataCatalog.get("balloon_" + d).set(thing_classes=["balloon"], evaluator_type="coco")from d2go.runner import Detectron2GoRunnerdef prepare_for_launch(): runner = Detectron2GoRunner() cfg = runner.get_default_cfg() cfg.merge_from_file(model_zoo.get_config_file("faster_rcnn_fbnetv3a_C4.yaml")) cfg.MODEL_EMA.ENABLED = False cfg.DATASETS.TRAIN = ("balloon_train",) cfg.DATASETS.TEST = ("balloon_val",) cfg.DATALOADER.NUM_WORKERS = 2 cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("faster_rcnn_fbnetv3a_C4.yaml") # Let training initialize from model zoo cfg.SOLVER.IMS_PER_BATCH = 2 cfg.SOLVER.BASE_LR = 0.00025 # pick a good LR cfg.SOLVER.MAX_ITER = 600 # 300 iterations seems good enough for this toy dataset; you will need to train longer for a practical dataset cfg.SOLVER.STEPS = [] # do not decay learning rate cfg.MODEL.ROI_HEADS.BATCH_SIZE_PER_IMAGE = 128 # faster, and good enough for this toy dataset (default: 512) cfg.MODEL.ROI_HEADS.NUM_CLASSES = 1 # only has one class (ballon). (see https://detectron2.readthedocs.io/tutorials/datasets.html#update-the-config-for-new-datasets) # NOTE: this config means the number of classes, but a few popular unofficial tutorials incorrect uses num_classes+1 here. os.makedirs(cfg.OUTPUT_DIR, exist_ok=True) return cfg, runnercfg, runner = prepare_for_launch()model = runner.build_model(cfg)runner.do_train(cfg, model, resume=False)在气球验证数据集上进行模型验证:

metrics = runner.do_test(cfg, model)print(metrics)Loading and preparing results...DONE (t=0.00s)creating index...index created! Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.494 Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.651 Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.543 Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000 Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.104 Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.757 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.204 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.526 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.526 Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.000 Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.118 Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.810OrderedDict([('default', OrderedDict([('balloon_val', OrderedDict([('bbox', {'AP': 49.406689278871866, 'AP50': 65.11971524476927, 'AP75': 54.327369011410944, 'APs': 0.0, 'APm': 10.396039603960396, 'APl': 75.65561502020056})]))]))])进行int8量化:

import copyfrom detectron2.data import build_detection_test_loaderfrom d2go.export.api import convert_and_export_predictorfrom d2go.tests.data_loader_helper import create_fake_detection_data_loaderfrom d2go.export.d2_meta_arch import patch_d2_meta_archimport logging# disable all the warningsprevious_level = logging.root.manager.disablelogging.disable(logging.INFO)patch_d2_meta_arch()cfg_name = 'faster_rcnn_fbnetv3a_dsmask_C4.yaml'pytorch_model = model_zoo.get(cfg_name, trained=True)pytorch_model.cpu()with create_fake_detection_data_loader(224, 320, is_train=False) as data_loader: predictor_path = convert_and_export_predictor( model_zoo.get_config(cfg_name), copy.deepcopy(pytorch_model), "torchscript_int8@tracing", './', data_loader, )# recover the logging levellogging.disable(previous_level)接下来使用量化后的int8模型进行测试:

from mobile_cv.predictor.api import create_predictormodel = create_predictor(predictor_path)from d2go.utils.demo_predictor import DemoPredictorpredictor = DemoPredictor(model)outputs = predictor(im)v = Visualizer(im[:, :, ::-1], MetadataCatalog.get("coco_2017_train"))out = v.draw_instance_predictions(outputs["instances"].to("cpu"))plt.imshow(out.get_image()[:, :, ::-1])Facebook官方D2Go博客:

https://ai.facebook.com/blog/d2go-brings-detectron2-to-mobile/推荐阅读

更多嵌入式AI技术干货请关注嵌入式AI专栏。