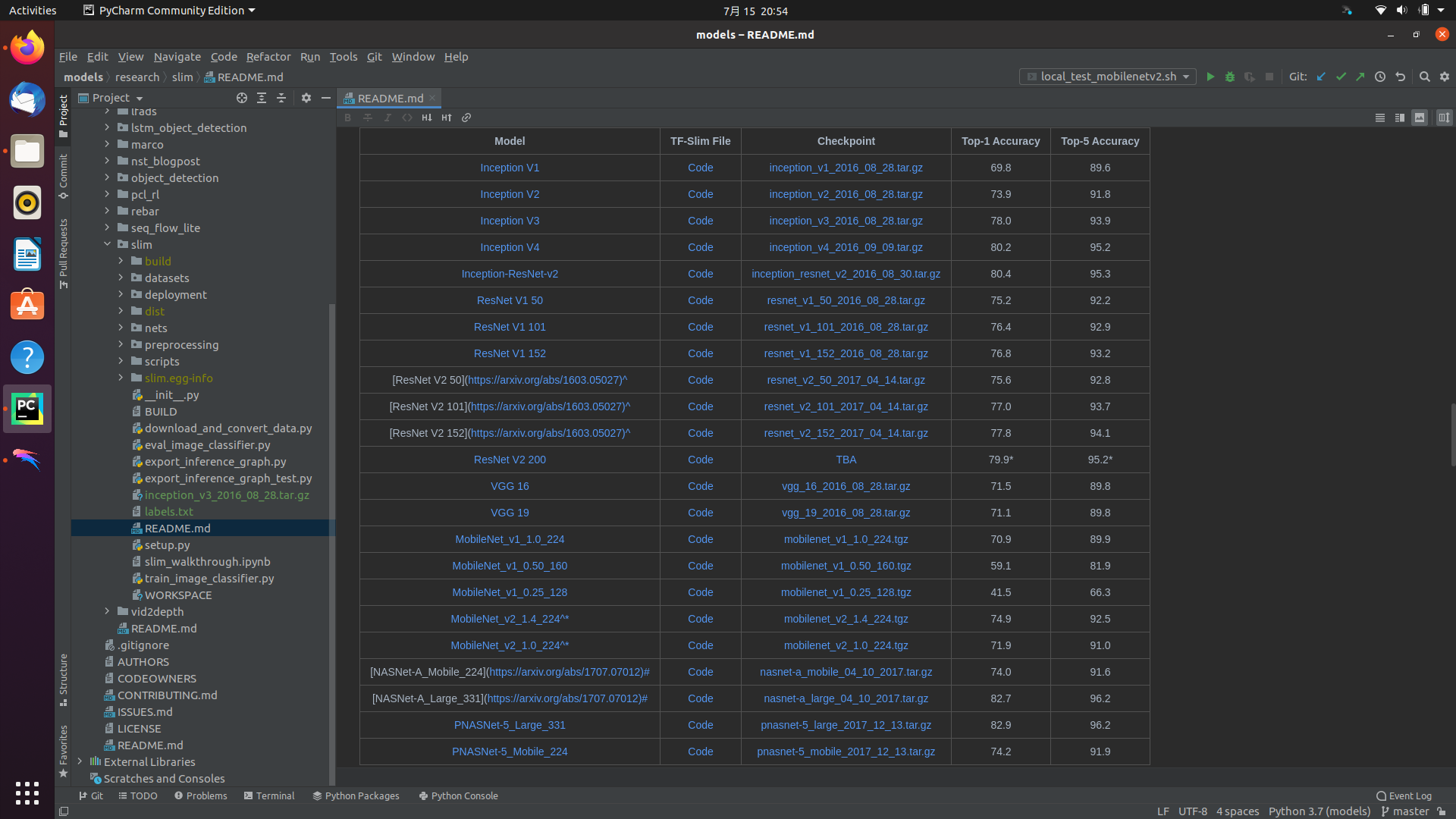

1. 模型

拉取源码

git clone https://github.com/tensorflow/models.gitgit clone -b v1.15.5 https://github.com/tensorflow/tensorflow.git

搭建环境:tensorflow==1.15.0 官方的预训练权重,导出pb模型

2.docker

sudo docker pull zepan/zhouyi

# 链接:https://pan.baidu.com/s/1yaKBPDxR_oakdTnqgyn5fg

# 提取码:f8dr3. 准备量化矫正数据集

import tensorflow as tf

import numpy as np

import sys

import os

import cv2

sys.path.append('/project/ai/scratch01/salyua01/sharing/guide_acc')

img_dir='./img/'

label_file='./label.txt'

#RESNET PARAM

input_height=224

input_width=224

input_channel = 3

mean = [123.68, 116.78, 103.94]

var = 1

tf.enable_eager_execution()

def smallest_size_at_least(height, width, resize_min):

"""Computes new shape with the smallest side equal to `smallest_side`.

Computes new shape with the smallest side equal to `smallest_side` while

preserving the original aspect ratio.

Args:

height: an int32 scalar tensor indicating the current height.

width: an int32 scalar tensor indicating the current width.

resize_min: A python integer or scalar `Tensor` indicating the size of

the smallest side after resize.

Returns:

new_height: an int32 scalar tensor indicating the new height.

new_width: an int32 scalar tensor indicating the new width.

"""

resize_min = tf.cast(resize_min, tf.float32)

# Convert to floats to make subsequent calculations go smoothly.

height, width = tf.cast(height, tf.float32), tf.cast(width, tf.float32)

smaller_dim = tf.minimum(height, width)

scale_ratio = resize_min / smaller_dim

# Convert back to ints to make heights and widths that TF ops will accept.

new_height = tf.cast(tf.round(height * scale_ratio), tf.int32)

new_width = tf.cast(tf.round(width * scale_ratio), tf.int32)

return new_height, new_width

def resize_image(image, height, width, method='BILINEAR'):

"""Simple wrapper around tf.resize_images.

This is primarily to make sure we use the same `ResizeMethod` and other

details each time.

Args:

image: A 3-D image `Tensor`.

height: The target height for the resized image.

width: The target width for the resized image.

Returns:

resized_image: A 3-D tensor containing the resized image. The first two

dimensions have the shape [height, width].

"""

resize_func = tf.image.ResizeMethod.NEAREST_NEIGHBOR if method == 'NEAREST' else tf.image.ResizeMethod.BILINEAR

return tf.image.resize_images(image, [height, width], method=resize_func, align_corners=False)

def aspect_preserving_resize(image, resize_min, channels=3, method='BILINEAR'):

"""Resize images preserving the original aspect ratio.

Args:

image: A 3-D image `Tensor`.

resize_min: A python integer or scalar `Tensor` indicating the size of

the smallest side after resize.

Returns:

resized_image: A 3-D tensor containing the resized image.

"""

shape = tf.shape(image)

height, width = shape[0], shape[1]

new_height, new_width = smallest_size_at_least(height, width, resize_min)

return resize_image(image, new_height, new_width, method)

def central_crop(image, crop_height, crop_width, channels=3):

"""Performs central crops of the given image list.

Args:

image: a 3-D image tensor

crop_height: the height of the image following the crop.

crop_width: the width of the image following the crop.

Returns:

3-D tensor with cropped image.

"""

shape = tf.shape(image)

height, width = shape[0], shape[1]

amount_to_be_cropped_h = height - crop_height

crop_top = amount_to_be_cropped_h // 2

amount_to_be_cropped_w = width - crop_width

crop_left = amount_to_be_cropped_w // 2

# return tf.image.crop_to_bounding_box(image, crop_top, crop_left, crop_height, crop_width)

size_assertion = tf.Assert(

tf.logical_and(

tf.greater_equal(height, crop_height),

tf.greater_equal(width, crop_width)),

['Crop size greater than the image size.']

)

with tf.control_dependencies([size_assertion]):

if channels == 1:

image = tf.squeeze(image)

crop_start = [crop_top, crop_left, ]

crop_shape = [crop_height, crop_width, ]

elif channels >= 3:

crop_start = [crop_top, crop_left, 0]

crop_shape = [crop_height, crop_width, -1]

image = tf.slice(image, crop_start, crop_shape)

return tf.reshape(image, [crop_height, crop_width, -1])

label_data = open(label_file)

filename_list = []

label_list = []

for line in label_data:

filename_list.append(line.rstrip('\n').split(' ')[0])

label_list.append(int(line.rstrip('\n').split(' ')[1]))

label_data.close()

img_num = len(label_list)

images = np.zeros([img_num, input_height, input_width, input_channel], np.float32)

for file_name, img_idx in zip(filename_list, range(img_num)):

image_file = os.path.join(img_dir, file_name)

img_s = tf.gfile.GFile(image_file, 'rb').read()

image = tf.image.decode_jpeg(img_s)

image = tf.cast(image, tf.float32)

image = tf.clip_by_value(image, 0., 255.)

image = aspect_preserving_resize(image, min(input_height, input_width), input_channel)

image = central_crop(image, input_height, input_width)

image = tf.image.resize_images(image, [input_height, input_width])

image = (image - mean) / var

image = image.numpy()

_, _, ch = image.shape

if ch == 1:

image = tf.tile(image, multiples=[1,1,3])

image = image.numpy()

images[img_idx] = image

np.save('dataset.npy', images)

labels = np.array(label_list)

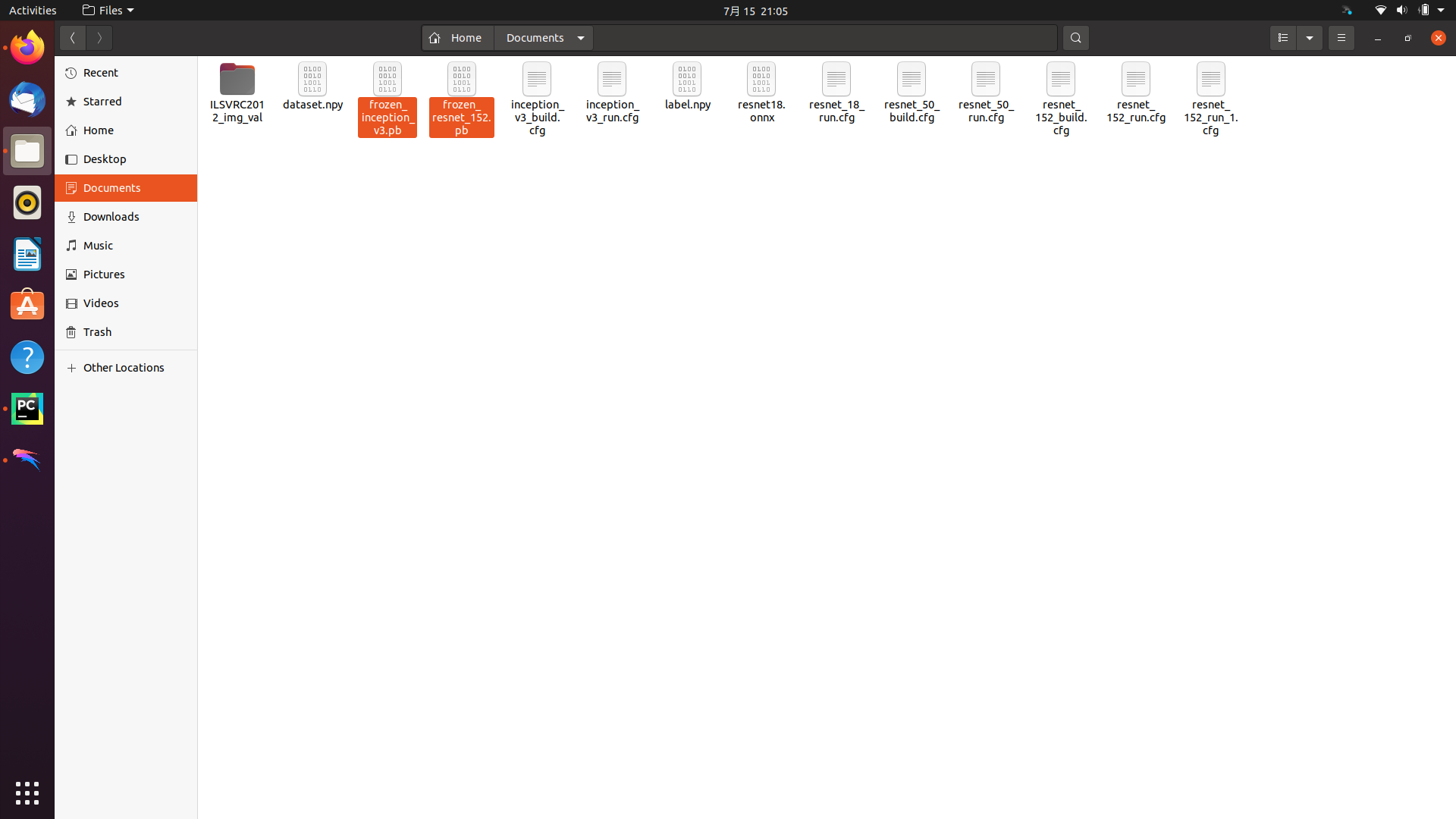

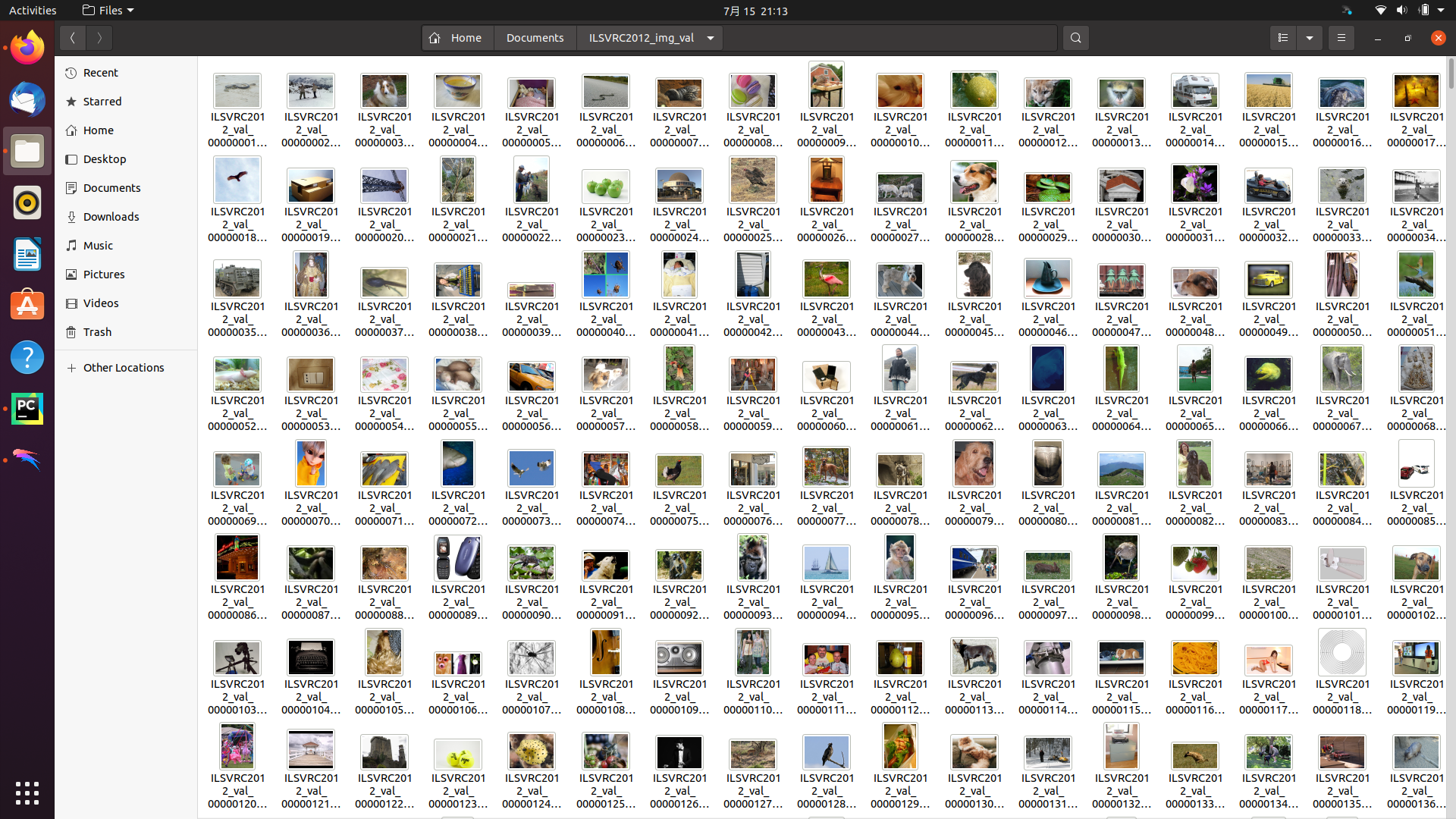

np.save('label.npy', labels)preprocess\_for\_dataset.py生成 dataset.npy和label.npy

4. 编辑NN compiler配置文件

[Common]

mode=run

[Parser]

model_name = resnet_152

detection_postprocess =

model_domain = image_classification

output = resnet_v1_152/predictions/Reshape_1

input_model = ./model/frozen_resnet_152.pb

input = input

input_shape = [1,224,224,3]

output_dir = ./

[AutoQuantizationTool]

model_name = resnet_152

quantize_method = SYMMETRIC

ops_per_channel = DepthwiseConv

calibration_data = ./dataset/dataset.npy

calibration_label = ./dataset/label.npy

preprocess_mode = normalize

quant_precision=int8

reverse_rgb = False

label_id_offset = 0

[GBuilder]

inputs=./model/input.bin

simulator=aipu_simulator_z1

outputs=output_resnet_152.bin

profile= True

target=Z1_0701simulator仿真aipubuild config/resnet_152_run.cfg

[I] step1: get max/min statistic value DONE

[I] step2: quantization each op DONE

[I] step3: build quantization forward DONE

[I] step4: show output scale of end node:

[I] layer_id:214, layer_top:resnet_v1_152/predictions/Reshape_1_0, output_scale:[372.5597]

[I] ==== auto-quantization DONE =

[I] Quantize model complete

[I] Building ...

[I] [common_options.h: 276] BuildTool version: 4.0.175. Build for target Z1_0701 at frequency 800MHz

[I] [common_options.h: 297] using default profile events to profile AIFF

[I] [IRChecker] Start to check IR: /tmp/AIPUBuilder_1626355647.7874727/resnet_152_int8.txt

[I] [IRChecker] model_name: resnet_152

[I] [IRChecker] IRChecker: All IR pass

[I] [graph.cpp : 846] loading graph weight: /tmp/AIPUBuilder_1626355647.7874727/resnet_152_int8.bin size: 0x398d9a4

[I] [builder.cpp:1059] Total memory for this graph: 0x4055790 Bytes

[I] [builder.cpp:1060] Text section: 0x0005ca90 Bytes

[I] [builder.cpp:1061] RO section: 0x0000a400 Bytes

[I] [builder.cpp:1062] Desc section: 0x00013800 Bytes

[I] [builder.cpp:1063] Data section: 0x0396d100 Bytes

[I] [builder.cpp:1064] BSS section: 0x0062dc00 Bytes

[I] [builder.cpp:1065] Stack : 0x00040400 Bytes

[I] [builder.cpp:1066] Workspace(BSS): 0x000c4000 Bytes

[I] [main.cpp : 467] # autogenrated by aipurun, do NOT modify!

LOG_FILE=log_default

FAST_FWD_INST=0

INPUT_INST_CNT=1

INPUT_DATA_CNT=2

CONFIG=Z1-0701

LOG_LEVEL=0

INPUT_INST_FILE0=/tmp/temp_7f213b561036e3c5c72971300849.text

INPUT_INST_BASE0=0x0

INPUT_INST_STARTPC0=0x0

INPUT_DATA_FILE0=/tmp/temp_7f213b561036e3c5c72971300849.ro

INPUT_DATA_BASE0=0x10000000

INPUT_DATA_FILE1=/tmp/temp_7f213b561036e3c5c72971300849.data

INPUT_DATA_BASE1=0x20000000

OUTPUT_DATA_CNT=2

OUTPUT_DATA_FILE0=output_resnet_152.bin

OUTPUT_DATA_BASE0=0x24100000

OUTPUT_DATA_SIZE0=0x3e8

OUTPUT_DATA_FILE1=profile_data.bin

OUTPUT_DATA_BASE1=0x23a71500

OUTPUT_DATA_SIZE1=0x1500

RUN_DESCRIPTOR=BIN[0]

[I] [main.cpp : 118] run simulator:

aipu_simulator_z1 /tmp/temp_7f213b561036e3c5c72971300849.cfg

[INFO]:SIMULATOR START!

[INFO]:========================================================================

[INFO]: STATIC CHECK

[INFO]:========================================================================

[INFO]: INST START ADDR : 0x0(0)

[INFO]: INST END ADDR : 0x5ca8f(379535)

[INFO]: INST SIZE : 0x5ca90(379536)

[INFO]: PACKET CNT : 0x5ca9(23721)

[INFO]: INST CNT : 0x172a4(94884)

[INFO]:------------------------------------------------------------------------

[WARN]:[0803] INST WR/RD REG CONFLICT! PACKET 0x44e: 0x472021b(POP R27,Rc7) vs 0x5f00000(MVI R0,0x0,Rc7), PACKET:0x44e(1102) SLOT:0 vs 3

[WARN]:[0803] INST WR/RD REG CONFLICT! PACKET 0x45b: 0x472021b(POP R27,Rc7) vs 0x5f00000(MVI R0,0x0,Rc7), PACKET:0x45b(1115) SLOT:0 vs 3

[WARN]:[0803] INST WR/RD REG CONFLICT! PACKET 0x5c0: 0x472021b(POP R27,Rc7) vs 0x9f80020(ADD.S R0,R0,0x1,Rc7), PACKET:0x5c0(1472) SLOT:0 vs 3

[WARN]:[0803] INST WR/RD REG CONFLICT! PACKET 0x7fd: 0x4520180(BRL R0) vs 0x47a03e4(ADD R4,R0,R31,Rc7), PACKET:0x7fd(2045) SLOT:0 vs 3

[WARN]:[0803] INST WR/RD REG CONFLICT! PACKET 0x996: 0x4720204(POP R4,Rc7) vs 0x9f80020(ADD.S R0,R0,0x1,Rc7), PACKET:0x996(2454) SLOT:0 vs 3

[WARN]:[0803] INST WR/RD REG CONFLICT! PACKET 0xe40: 0x4720204(POP R4,Rc7) vs 0x47a1be0(ADD R0,R6,R31,Rc7), PACKET:0xe40(3648) SLOT:0 vs 3

[WARN]:[0803] INST WR/RD REG CONFLICT! PACKET 0x1266: 0x472021b(POP R27,Rc7) vs 0x5f00000(MVI R0,0x0,Rc7), PACKET:0x1266(4710) SLOT:0 vs 3

[WARN]:[0803] INST WR/RD REG CONFLICT! PACKET 0x1273: 0x472021b(POP R27,Rc7) vs 0x5f00000(MVI R0,0x0,Rc7), PACKET:0x1273(4723) SLOT:0 vs 3

[WARN]:[0803] INST WR/RD REG CONFLICT! PACKET 0x13d8: 0x472021b(POP R27,Rc7) vs 0x9f80020(ADD.S R0,R0,0x1,Rc7), PACKET:0x13d8(5080) SLOT:0 vs 3

[WARN]:[0803] INST WR/RD REG CONFLICT! PACKET 0x1575: 0x4520180(BRL R0) vs 0x47a03e5(ADD R5,R0,R31,Rc7), PACKET:0x1575(5493) SLOT:0 vs 3

[INFO]:========================================================================

[INFO]: STATIC CHECK END

[INFO]:========================================================================

[INFO]:AIPU START RUNNING: BIN[0]

[INFO]:TOTAL TIME: 16.073494s.

[INFO]:SIMULATOR EXIT!

[I] [main.cpp : 135] Simulator finished.

Total errors: 0, warnings: 0quant\_predict.py

from matplotlib import pyplot as plt

import matplotlib.patches as patches

import numpy as np

import os

import imagenet_classes as class_name

current_dir = os.getcwd()

label_offset = 1

outputfile = current_dir + '/output_resnet_152.bin'

npyoutput = np.fromfile(outputfile, dtype=np.uint8)

outputclass = npyoutput.argmax()

head5p = npyoutput.argsort()[-5:][::-1]

labelfile = current_dir + '/output_ref.bin'

npylabel = np.fromfile(labelfile, dtype=np.int8)

labelclass = npylabel.argmax()

head5t = npylabel.argsort()[-5:][::-1]

print("predict first 5 label:")

for i in head5p:

print(" index %4d, prob %3d, name: %s"%(i, npyoutput[i], class_name.class_names[i-label_offset]))

print("true first 5 label:")

for i in head5t:

print(" index %4d, prob %3d, name: %s"%(i, npylabel[i], class_name.class_names[i-label_offset]))

# Show input picture

print('Detect picture save to result.jpeg')

input_path = './model/input.bin'

npyinput = np.fromfile(input_path, dtype=np.int8)

image = np.clip(np.round(npyinput)+128, 0, 255).astype(np.uint8)

image = np.reshape(image, (224, 224, 3))

im = Image.fromarray(image)

im.save('result.jpeg')quant\_predict.py解析

predict first 5 label:

index 230, prob 216, name: Old English sheepdog, bobtail

index 231, prob 39, name: Shetland sheepdog, Shetland sheep dog, Shetland

index 999, prob 0, name: ear, spike, capitulum

index 342, prob 0, name: hog, pig, grunter, squealer, Sus scrofa

index 340, prob 0, name: sorrel

true first 5 label:

index 230, prob 109, name: Old English sheepdog, bobtail

index 231, prob 96, name: Shetland sheepdog, Shetland sheep dog, Shetland

index 232, prob 57, name: collie

index 226, prob 54, name: malinois

index 263, prob 53, name: Brabancon griffon

Detect picture save to result.jpeg

矫正集的data.npy和label.npy $ NN compiler的cfg文件

新人发帖 如有错误 欢迎指正