1. 阻尼牛顿法

牛顿法最突出的优点是收敛速度快,具有局部二阶收敛性,但是,基本牛顿法初始点需要足够“靠近”极小点,否则,有可能导致算法不收敛。

这样就引入了阻尼牛顿法,阻尼牛顿法最核心的一点在于可以修改每次迭代的步长,通过沿着牛顿法确定的方向一维搜索最优的步长,最终选择使得函数值最小的步长。

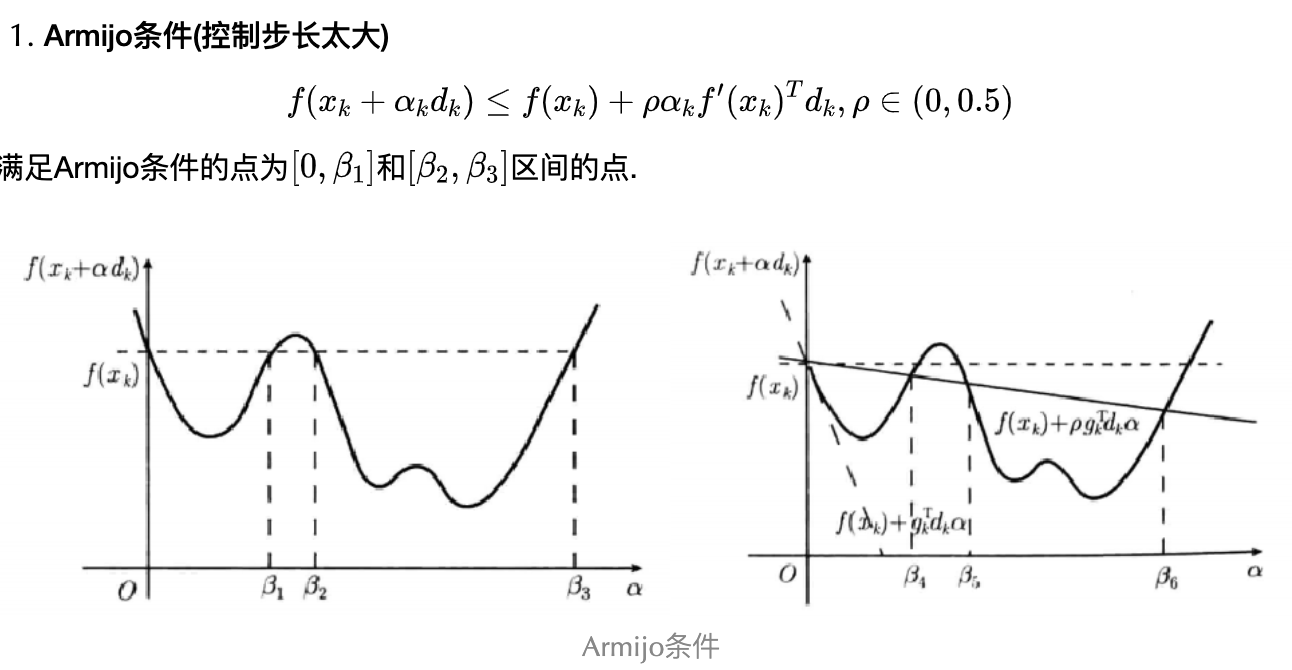

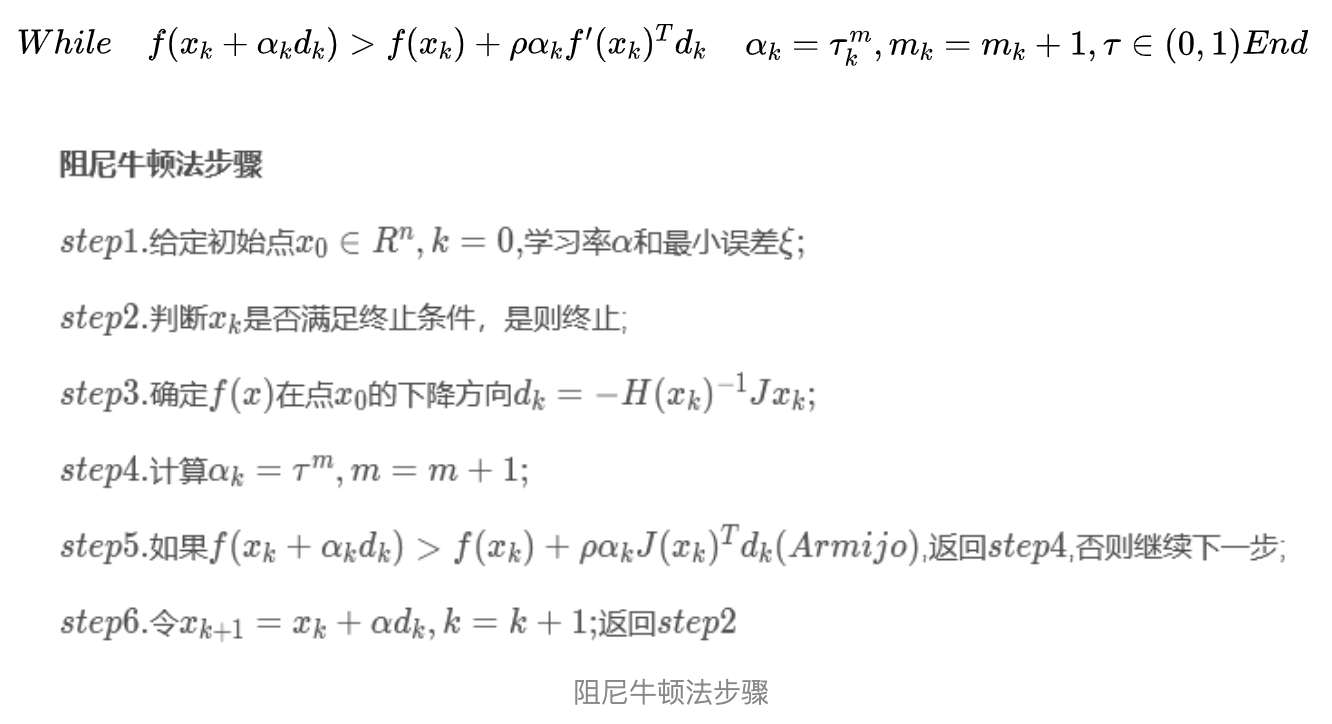

补充:一维搜索非精确搜索方法。

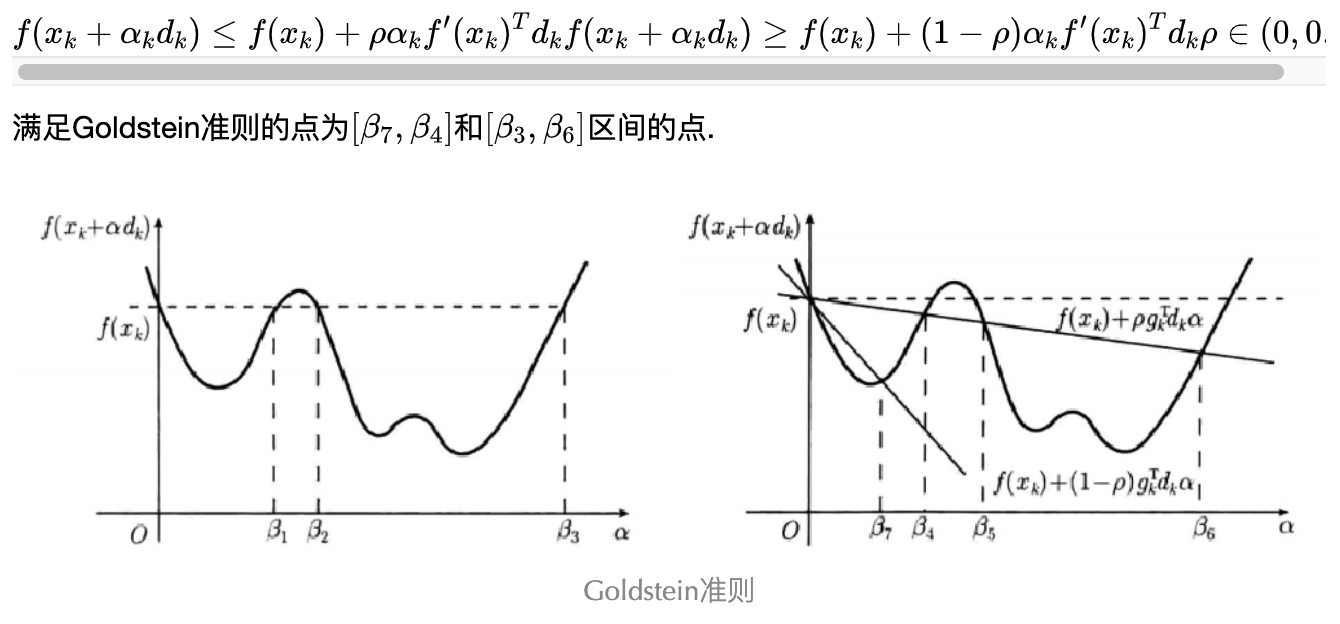

- Goldstein准则(控制步长太小)

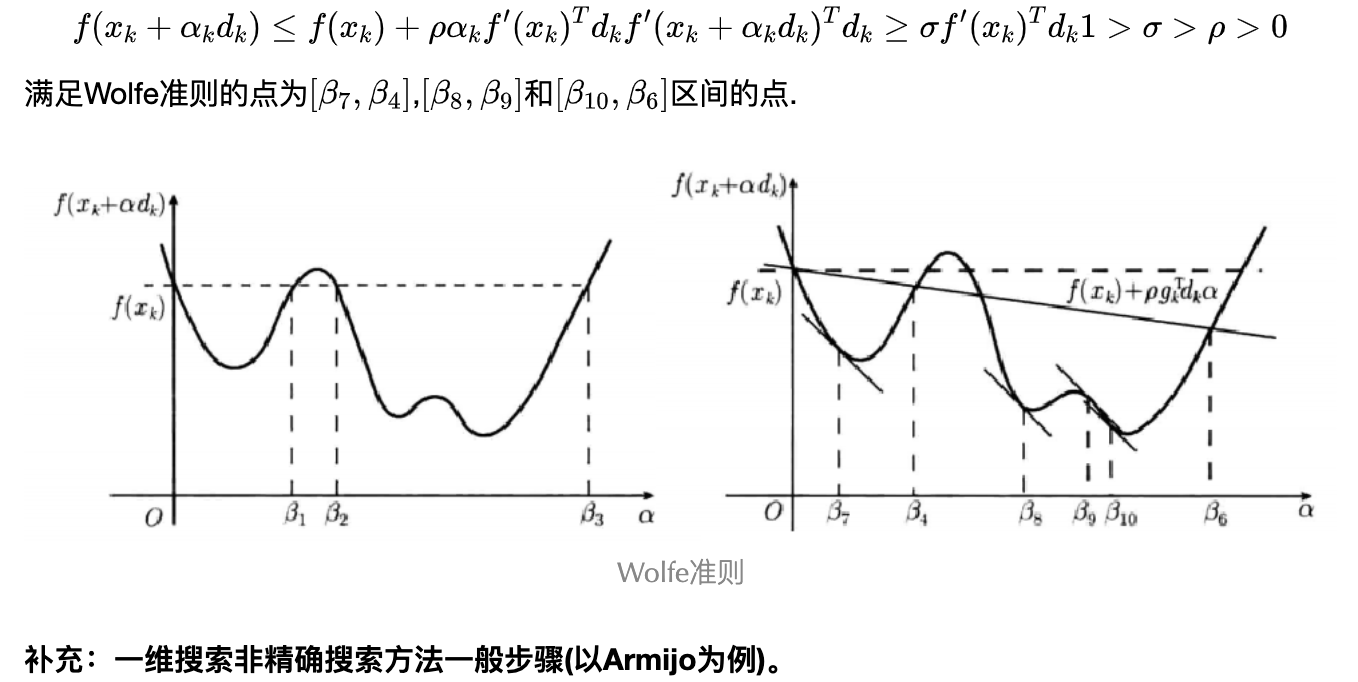

- Wolfe准则

满足Wolfe准则的点为,和区间的点.

#include <Msnhnet/math/MsnhMatrixS.h>

#include <Msnhnet/cv/MsnhCVGui.h>

#include <iostream>

using namespace Msnhnet;

class DampedNewton

{

public:

DampedNewton(int maxIter, double eps, double rho, double tau):_maxIter(maxIter),_eps(eps),_rho(rho),_tau(tau){}

void setMaxIter(int maxIter)

{

_maxIter = maxIter;

}

virtual int solve(MatSDS &startPoint) = 0;

void setEps(double eps)

{

_eps = eps;

}

void setRho(double rho)

{

_rho = rho;

}

void setTau(double tau)

{

_tau = tau;

}

//正定性判定

bool isPosMat(const MatSDS &H)

{

MatSDS eigen = H.eigen()[0];

for (int i = 0; i < eigen.mWidth; ++i)

{

if(eigen[i]<=0)

{

return false;

}

}

return true;

}

const std::vector<Vec2F32> &getXStep() const

{

return _xStep;

}

protected:

int _maxIter = 100;

double _eps = 0.00001;

double _rho = 0.2;

double _tau = 0.9;

std::vector<Vec2F32> _xStep;

protected:

virtual MatSDS calGradient(const MatSDS& point) = 0;

virtual MatSDS calHessian(const MatSDS& point) = 0;

virtual bool calDk(const MatSDS& point, MatSDS &dk) = 0;

virtual MatSDS function(const MatSDS& point) = 0;

};

class DampedNewtonProblem1:public DampedNewton

{

public:

DampedNewtonProblem1(int maxIter, double eps, double rho, double tau):DampedNewton(maxIter, eps, rho, tau){}

MatSDS calGradient(const MatSDS &point) override

{

MatSDS J(1,2);

double x1 = point(0,0);

double x2 = point(0,1);

J(0,0) = 6*x1 - 2*x1*x2;

J(0,1) = 6*x2 - x1*x1;

return J;

}

MatSDS calHessian(const MatSDS &point) override

{

MatSDS H(2,2);

double x1 = point(0,0);

double x2 = point(0,1);

H(0,0) = 6 - 2*x2;

H(0,1) = -2*x1;

H(1,0) = -2*x1;

H(1,1) = 6;

return H;

}

bool calDk(const MatSDS& point, MatSDS &dk) override

{

MatSDS J = calGradient(point);

MatSDS H = calHessian(point);

if(!isPosMat(H))

{

return false;

}

dk = -1*H.invert()*J;

return true;

}

MatSDS function(const MatSDS &point) override

{

MatSDS f(1,1);

double x1 = point(0,0);

double x2 = point(0,1);

f(0,0) = 3*x1*x1 + 3*x2*x2 - x1*x1*x2;

return f;

}

int solve(MatSDS &startPoint) override

{

MatSDS x = startPoint;

for (int i = 0; i < _maxIter; ++i)

{

_xStep.push_back({(float)x[0],(float)x[1]});

MatSDS dk;

bool ok = calDk(x, dk);

if(!ok)

{

return -2;

}

double alpha = 1;

//Armijo准则

for (int i = 0; i < 100; ++i)

{

MatSDS left = function(x + alpha*dk);

MatSDS right = function(x) + this->_rho*alpha*calGradient(x).transpose()*dk;

if(left(0,0) <= right(0,0))

{

break;

}

alpha = alpha * _tau;

}

std::cout<<std::left<<"Iter(s): "<<std::setw(4)<<i<<", Loss: "<<std::setw(12)<<dk.L2()<<" Result: "<<function(x)[0]<<std::endl;

x = x + alpha*dk;

if(dk.LInf() < _eps)

{

startPoint = x;

return i+1;

}

}

return -1;

}

};

int main()

{

DampedNewtonProblem1 function(100, 0.001, 0.4, 0.8);

MatSDS startPoint(1,2,{1.5,1.5});

try

{

int res = function.solve(startPoint);

if(res == -1)

{

std::cout<<"求解失败"<<std::endl;

}

else if(res == -2)

{

std::cout<<"Hessian 矩阵非正定, 求解失败"<<std::endl;

}

else

{

std::cout<<"求解成功! 迭代次数: "<<res<<std::endl;

std::cout<<"最小值点:"<<res<<std::endl;

startPoint.print();

std::cout<<"此时方程的值为:"<<std::endl;

function.function(startPoint).print();

#ifdef WIN32

Gui::setFont("c:/windows/fonts/MSYH.TTC",16);

#endif

std::cout<<"按\"esc\"退出!"<<std::endl;

Gui::plotLine(u8"阻尼牛顿法迭代X中间值","x",function.getXStep());

Gui::wait();

}

}

catch(Exception ex)

{

std::cout<<ex.what();

}

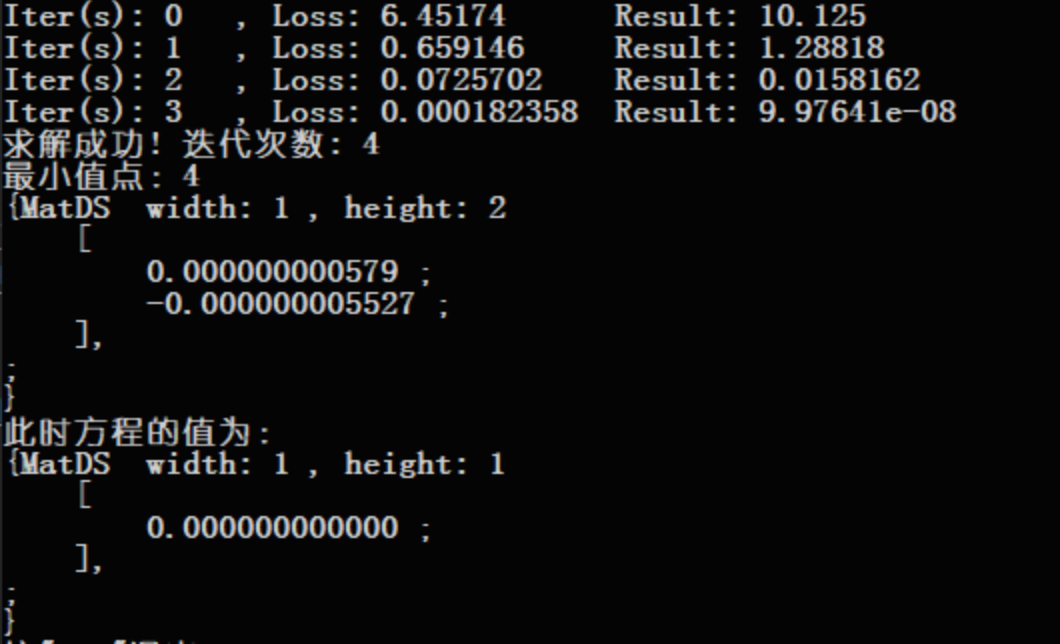

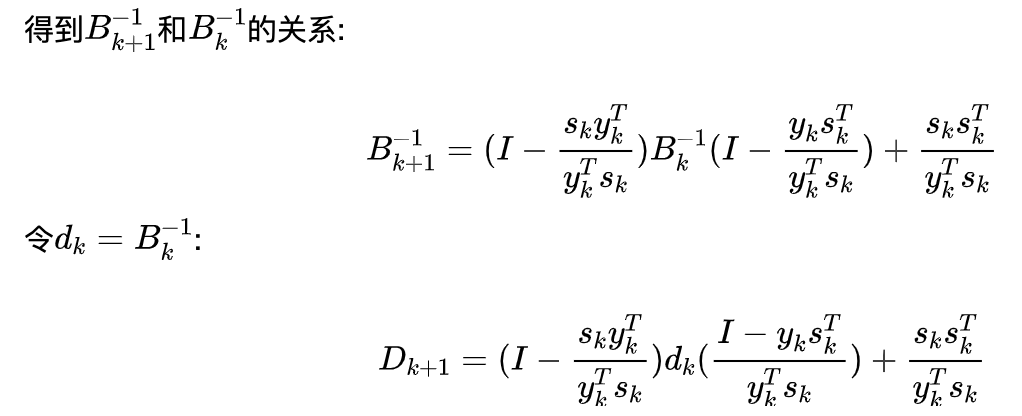

} 结果:对于初始点 (1.5,1.5) ,迭代4次即可完成

阻尼牛顿法,4次完成迭代

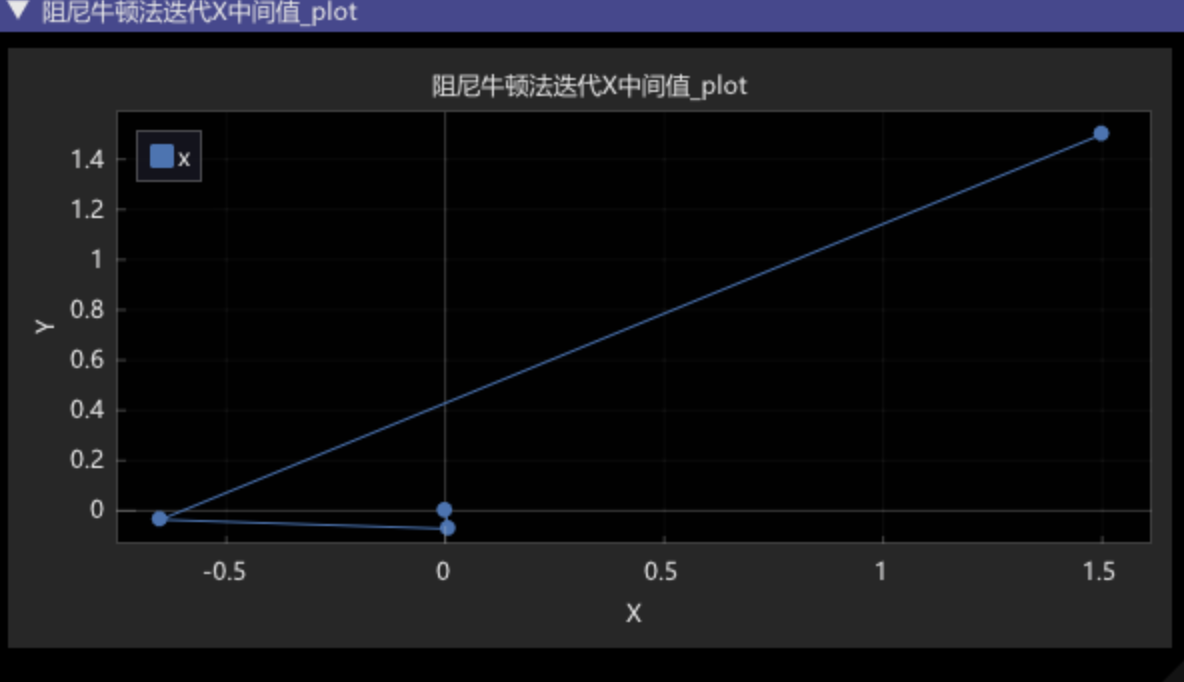

阻尼牛顿法X中间点可视化

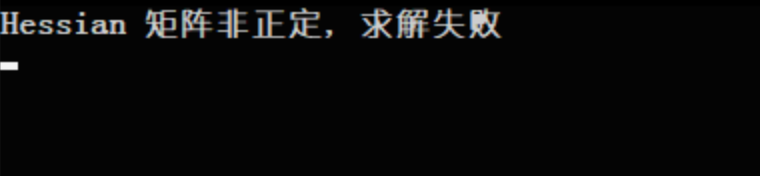

结果:对于初始点 (0,3) ,同样是Hessian矩阵不正定.

阻尼牛顿法求解失败

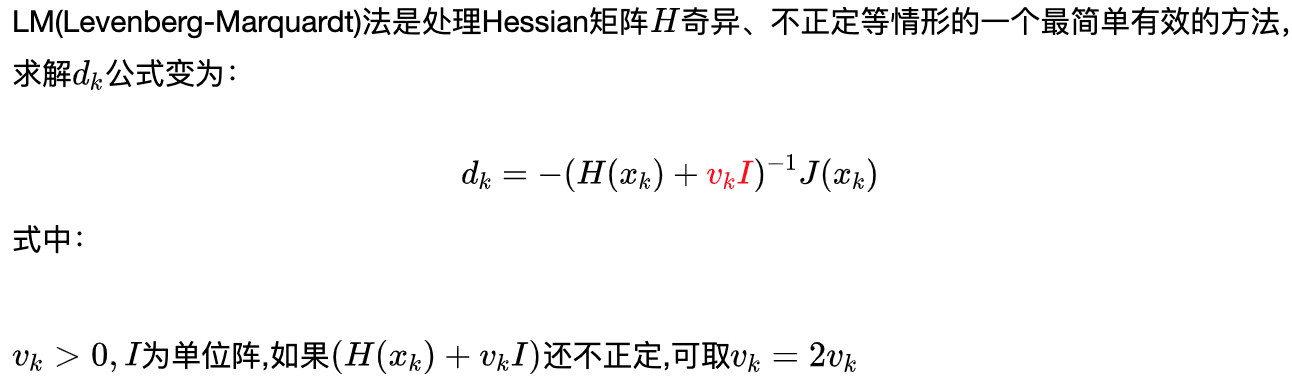

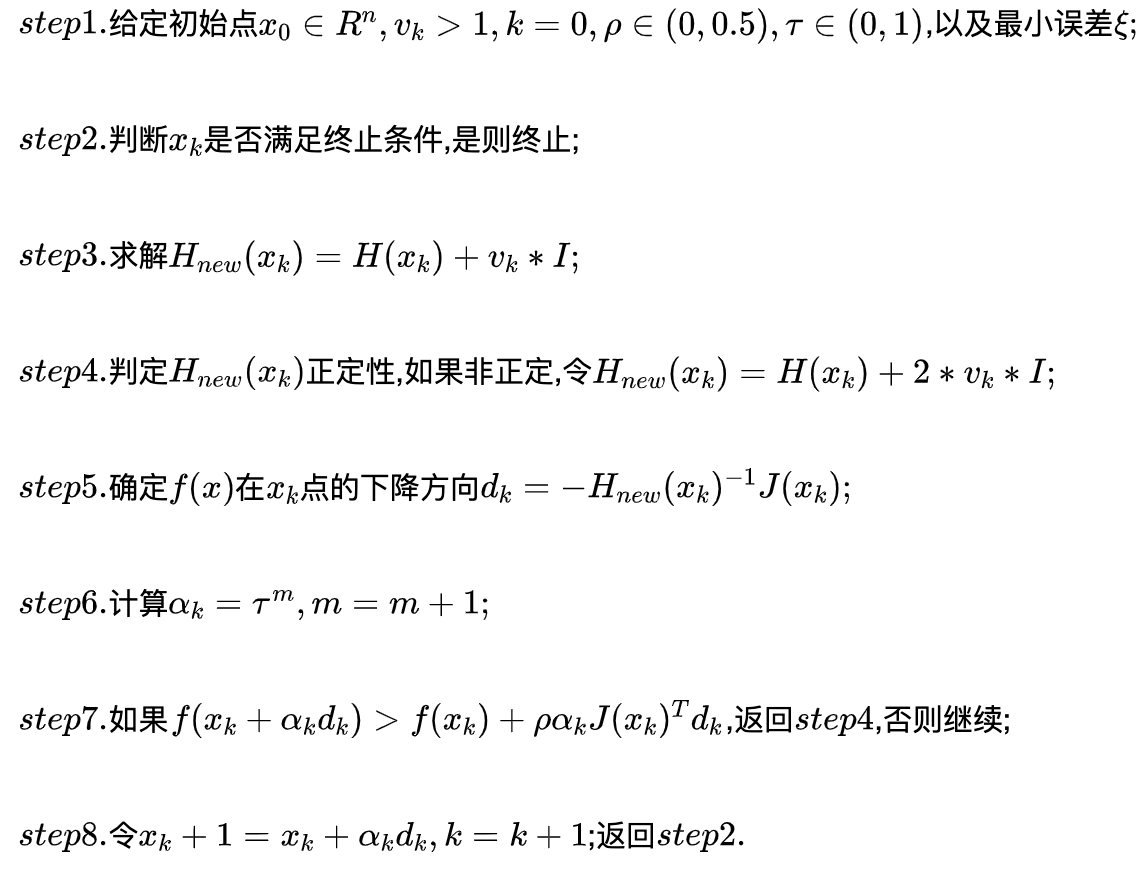

2. 牛顿Levenberg-Marquardt法

步骤

举例

#include <Msnhnet/math/MsnhMatrixS.h>

#include <Msnhnet/cv/MsnhCVGui.h>

#include <iostream>

using namespace Msnhnet;

class NewtonLM

{

public:

NewtonLM(int maxIter, double eps, double vk, double rho, double tau):_maxIter(maxIter),_eps(eps),_vk(vk),_rho(rho),_tau(tau){}

void setMaxIter(int maxIter)

{

_maxIter = maxIter;

}

virtual int solve(MatSDS &startPoint) = 0;

void setEps(double eps)

{

_eps = eps;

}

void setRho(double rho)

{

_rho = rho;

}

void setTau(double tau)

{

_tau = tau;

}

//正定性判定

bool isPosMat(const MatSDS &H)

{

MatSDS eigen = H.eigen()[0];

for (int i = 0; i < eigen.mWidth; ++i)

{

if(eigen[i]<=0)

{

return false;

}

}

return true;

}

const std::vector<Vec2F32> &getXStep() const

{

return _xStep;

}

protected:

int _maxIter = 100;

double _eps = 0.00001;

double _vk = 3;

double _rho = 0.2;

double _tau = 0.9;

std::vector<Vec2F32> _xStep;

protected:

virtual MatSDS calGradient(const MatSDS& point) = 0;

virtual MatSDS calHessian(const MatSDS& point) = 0;

virtual MatSDS calDk(const MatSDS& point) = 0;

virtual MatSDS function(const MatSDS& point) = 0;

};

class NewtonLMProblem1:public NewtonLM

{

public:

NewtonLMProblem1(int maxIter, double eps, double vk, double rho, double tau):NewtonLM(maxIter, eps, vk,rho,tau){}

MatSDS calGradient(const MatSDS &point) override

{

MatSDS J(1,2);

double x1 = point(0,0);

double x2 = point(0,1);

J(0,0) = 6*x1 - 2*x1*x2;

J(0,1) = 6*x2 - x1*x1;

return J;

}

MatSDS calHessian(const MatSDS &point) override

{

MatSDS H(2,2);

double x1 = point(0,0);

double x2 = point(0,1);

H(0,0) = 6 - 2*x2;

H(0,1) = -2*x1;

H(1,0) = -2*x1;

H(1,1) = 6;

return H;

}

MatSDS calDk(const MatSDS& point) override

{

MatSDS J = calGradient(point);

MatSDS H = calHessian(point);

MatSDS I = MatSDS::eye(H.mWidth);

MatSDS Hp = H + _vk*I;

if(!isPosMat(Hp))

{

H = H + 2*_vk*I;

}

else

{

H = Hp;

}

return -1*H.invert()*J;

}

MatSDS function(const MatSDS &point) override

{

MatSDS f(1,1);

double x1 = point(0,0);

double x2 = point(0,1);

f(0,0) = 3*x1*x1 + 3*x2*x2 - x1*x1*x2;

return f;

}

int solve(MatSDS &startPoint) override

{

MatSDS x = startPoint;

for (int i = 0; i < _maxIter; ++i)

{

_xStep.push_back({(float)x[0],(float)x[1]});

//这里就不用检查正定了

MatSDS dk = calDk(x);

double alpha = 1;

//Armijo准则

for (int i = 0; i < 100; ++i)

{

MatSDS left = function(x + alpha*dk);

MatSDS right = function(x) + this->_rho*alpha*calGradient(x).transpose()*dk;

if(left(0,0) <= right(0,0))

{

break;

}

alpha = alpha * _tau;

}

std::cout<<std::left<<"Iter(s): "<<std::setw(4)<<i<<", Loss: "<<std::setw(12)<<dk.L2()<<" Result: "<<function(x)[0]<<std::endl;

x = x + alpha*dk;

if(dk.LInf() < _eps)

{

startPoint = x;

return i+1;

}

}

return -1;

}

};

int main()

{

NewtonLMProblem1 function(1000, 0.001,3, 0.4, 0.8);

MatSDS startPoint(1,2,{0,3});

try

{

int res = function.solve(startPoint);

if(res < 0)

{

std::cout<<"求解失败"<<std::endl;

}

else

{

std::cout<<"求解成功! 迭代次数: "<<res<<std::endl;

std::cout<<"最小值点:"<<res<<std::endl;

startPoint.print();

std::cout<<"此时方程的值为:"<<std::endl;

function.function(startPoint).print();

#ifdef WIN32

Gui::setFont("c:/windows/fonts/MSYH.TTC",16);

#endif

std::cout<<"按\"esc\"退出!"<<std::endl;

Gui::plotLine(u8"牛顿LM法迭代X中间值","x",function.getXStep());

Gui::wait();

}

}

catch(Exception ex)

{

std::cout<<ex.what();

}

}

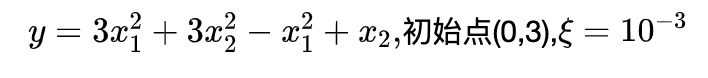

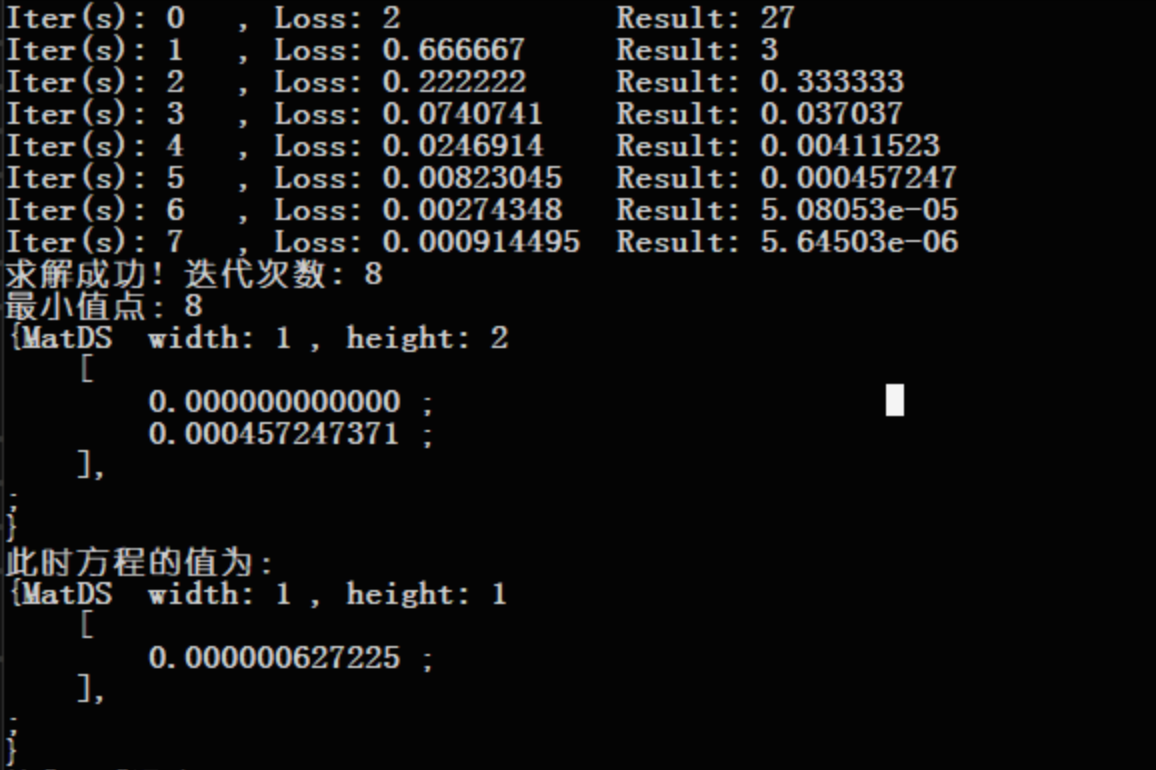

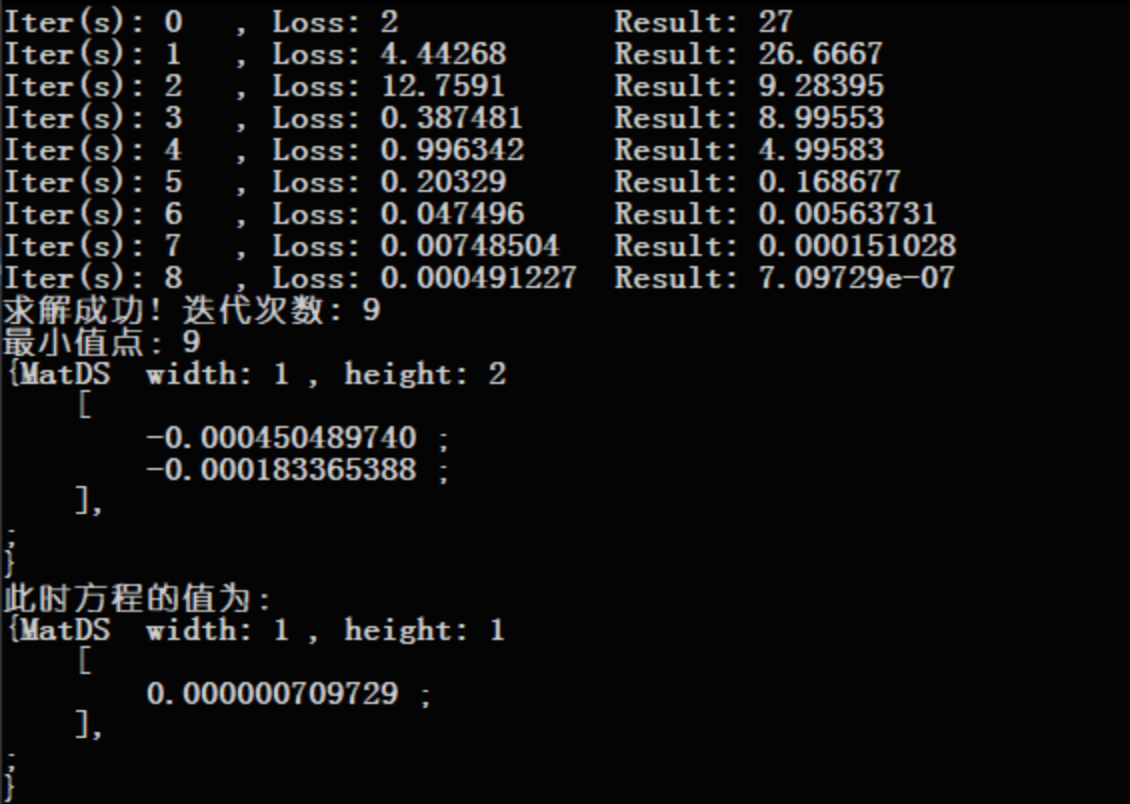

结果: 对于初始点 (0,3) ,迭代8次即可完成,解决了Newton法Hessian矩阵不正定的问题.

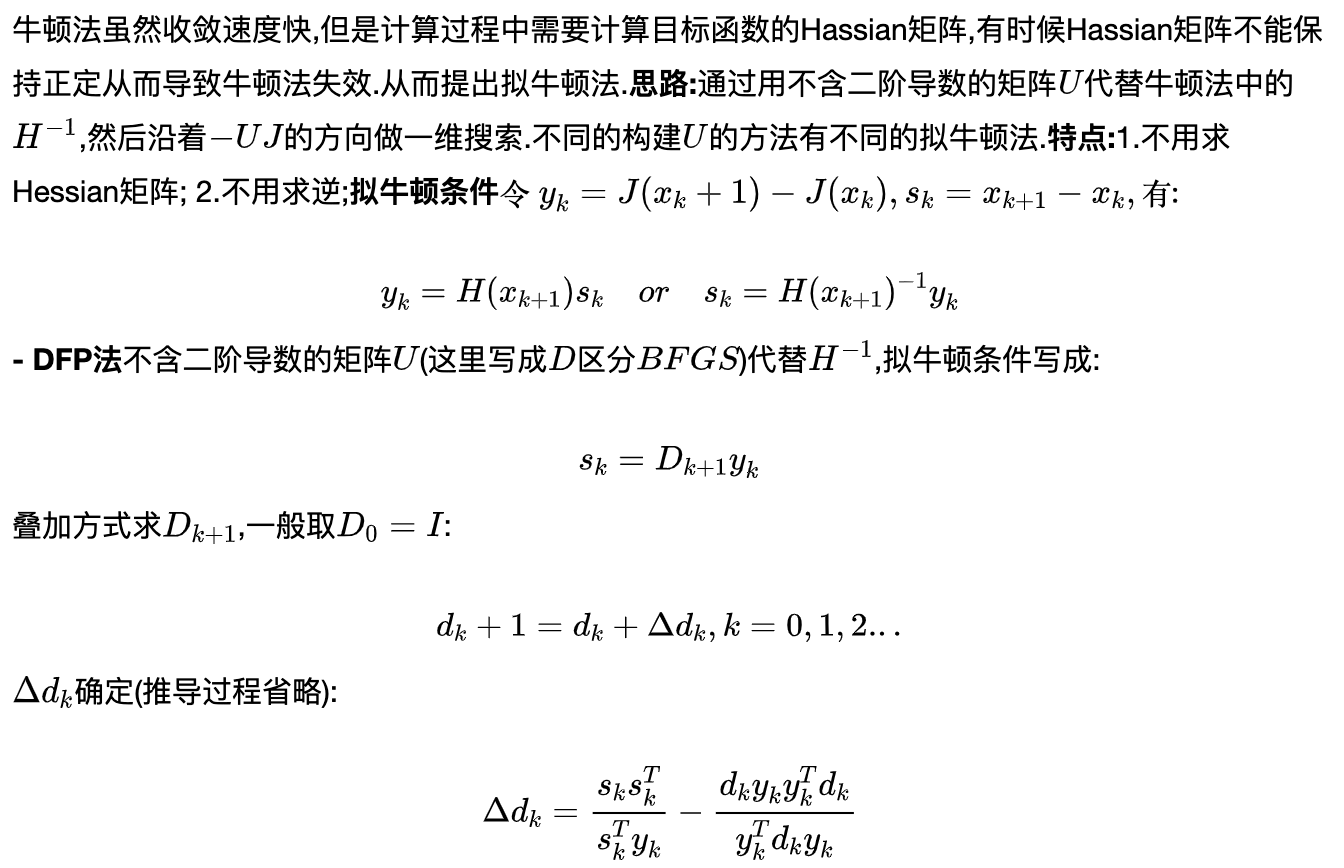

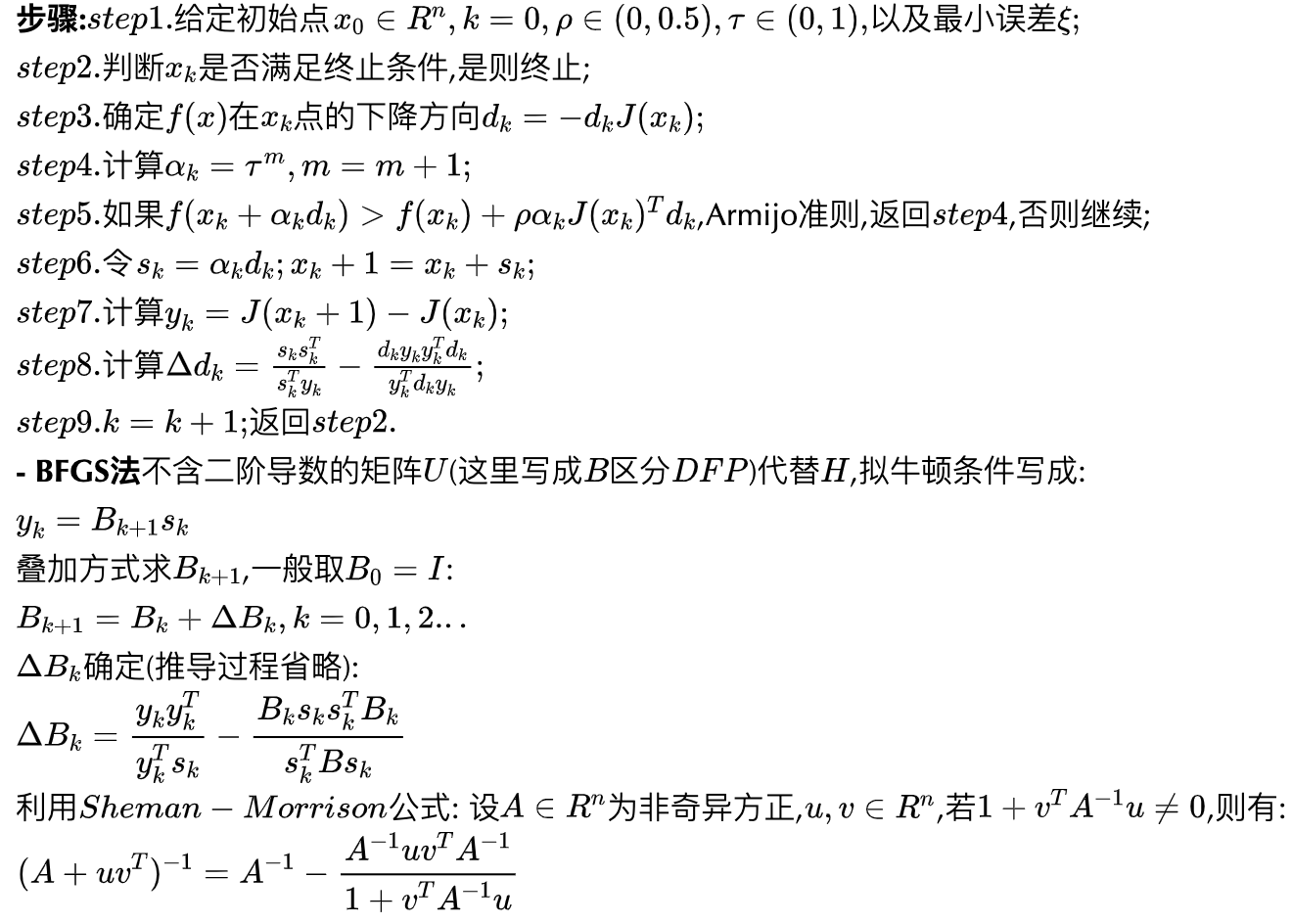

3.拟牛顿法

举例

#include <Msnhnet/math/MsnhMatrixS.h>

#include <Msnhnet/cv/MsnhCVGui.h>

#include <iostream>

using namespace Msnhnet;

class NewtonLM

{

public:

NewtonLM(int maxIter, double eps, double vk, double rho, double tau):_maxIter(maxIter),_eps(eps),_vk(vk),_rho(rho),_tau(tau){}

void setMaxIter(int maxIter)

{

_maxIter = maxIter;

}

virtual int solve(MatSDS &startPoint) = 0;

void setEps(double eps)

{

_eps = eps;

}

void setRho(double rho)

{

_rho = rho;

}

void setTau(double tau)

{

_tau = tau;

}

//正定性判定

bool isPosMat(const MatSDS &H)

{

MatSDS eigen = H.eigen()[0];

for (int i = 0; i < eigen.mWidth; ++i)

{

if(eigen[i]<=0)

{

return false;

}

}

return true;

}

const std::vector<Vec2F32> &getXStep() const

{

return _xStep;

}

protected:

int _maxIter = 100;

double _eps = 0.00001;

double _vk = 3;

double _rho = 0.2;

double _tau = 0.9;

std::vector<Vec2F32> _xStep;

protected:

virtual MatSDS calGradient(const MatSDS& point) = 0;

virtual MatSDS calHessian(const MatSDS& point) = 0;

virtual MatSDS calDk(const MatSDS& point) = 0;

virtual MatSDS function(const MatSDS& point) = 0;

};

class NewtonLMProblem1:public NewtonLM

{

public:

NewtonLMProblem1(int maxIter, double eps, double vk, double rho, double tau):NewtonLM(maxIter, eps, vk,rho,tau){}

MatSDS calGradient(const MatSDS &point) override

{

MatSDS J(1,2);

double x1 = point(0,0);

double x2 = point(0,1);

J(0,0) = 6*x1 - 2*x1*x2;

J(0,1) = 6*x2 - x1*x1;

return J;

}

MatSDS calHessian(const MatSDS &point) override

{

MatSDS H(2,2);

double x1 = point(0,0);

double x2 = point(0,1);

H(0,0) = 6 - 2*x2;

H(0,1) = -2*x1;

H(1,0) = -2*x1;

H(1,1) = 6;

return H;

}

MatSDS calDk(const MatSDS& point) override

{

MatSDS J = calGradient(point);

MatSDS H = calHessian(point);

MatSDS I = MatSDS::eye(H.mWidth);

MatSDS Hp = H + _vk*I;

if(!isPosMat(Hp))

{

H = H + 2*_vk*I;

}

else

{

H = Hp;

}

return -1*H.invert()*J;

}

MatSDS function(const MatSDS &point) override

{

MatSDS f(1,1);

double x1 = point(0,0);

double x2 = point(0,1);

f(0,0) = 3*x1*x1 + 3*x2*x2 - x1*x1*x2;

return f;

}

int solve(MatSDS &startPoint) override

{

MatSDS x = startPoint;

for (int i = 0; i < _maxIter; ++i)

{

_xStep.push_back({(float)x[0],(float)x[1]});

//这里就不用检查正定了

MatSDS dk = calDk(x);

double alpha = 1;

//Armijo准则

for (int i = 0; i < 100; ++i)

{

MatSDS left = function(x + alpha*dk);

MatSDS right = function(x) + this->_rho*alpha*calGradient(x).transpose()*dk;

if(left(0,0) <= right(0,0))

{

break;

}

alpha = alpha * _tau;

}

std::cout<<std::left<<"Iter(s): "<<std::setw(4)<<i<<", Loss: "<<std::setw(12)<<dk.L2()<<" Result: "<<function(x)[0]<<std::endl;

x = x + alpha*dk;

if(dk.LInf() < _eps)

{

startPoint = x;

return i+1;

}

}

return -1;

}

};

int main()

{

NewtonLMProblem1 function(1000, 0.001,3, 0.4, 0.8);

MatSDS startPoint(1,2,{0,3});

try

{

int res = function.solve(startPoint);

if(res < 0)

{

std::cout<<"求解失败"<<std::endl;

}

else

{

std::cout<<"求解成功! 迭代次数: "<<res<<std::endl;

std::cout<<"最小值点:"<<res<<std::endl;

startPoint.print();

std::cout<<"此时方程的值为:"<<std::endl;

function.function(startPoint).print();

#ifdef WIN32

Gui::setFont("c:/windows/fonts/MSYH.TTC",16);

#endif

std::cout<<"按\"esc\"退出!"<<std::endl;

Gui::plotLine(u8"牛顿LM法迭代X中间值","x",function.getXStep());

Gui::wait();

}

}

catch(Exception ex)

{

std::cout<<ex.what();

}

}

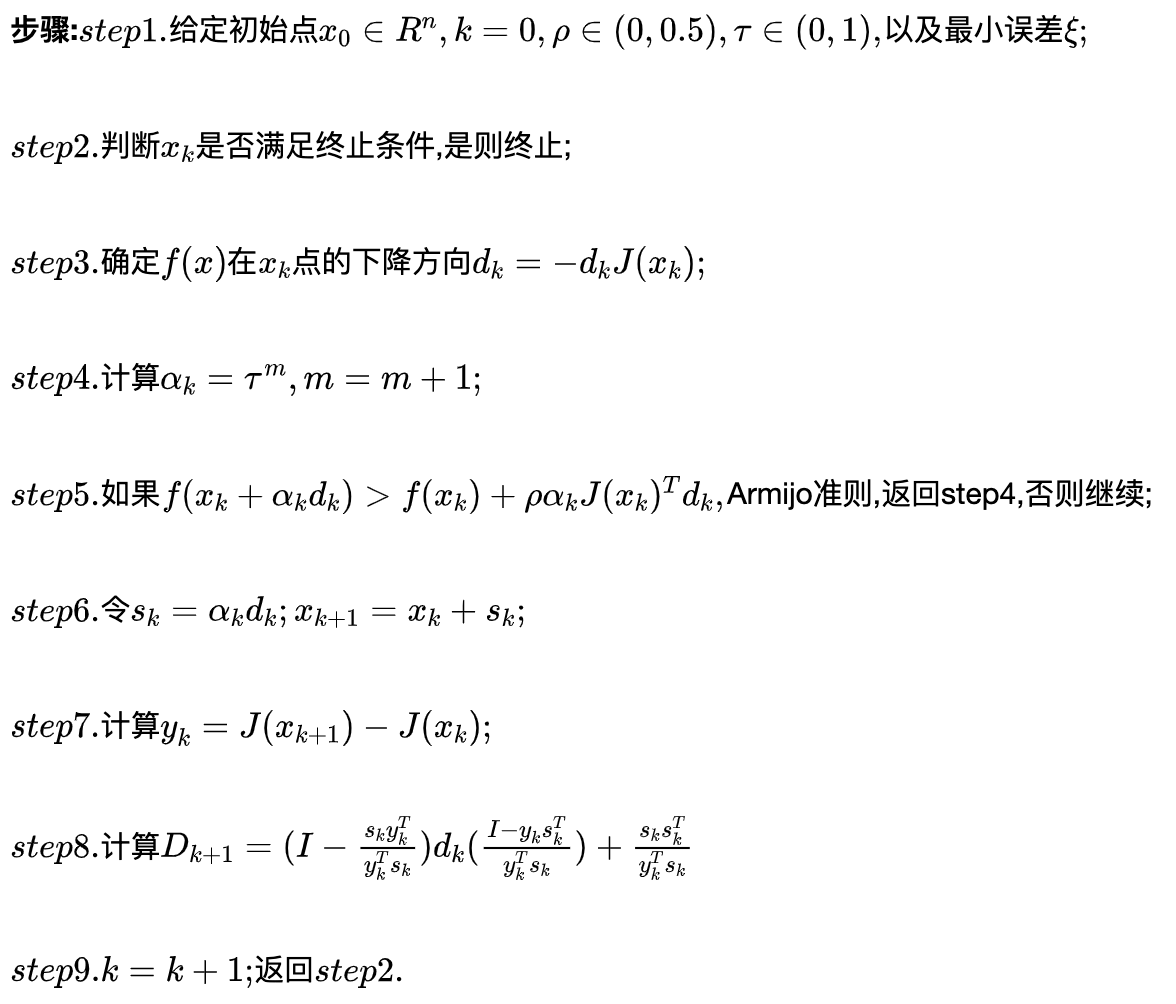

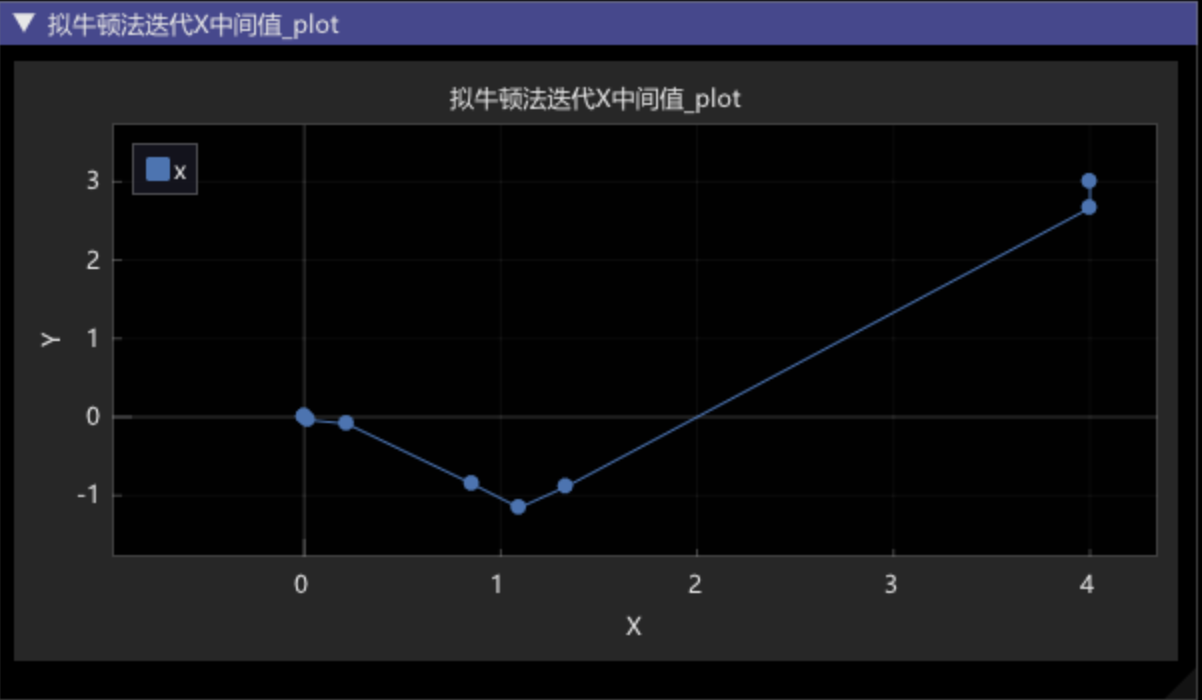

结果:对于初始点 (4,3) ,迭代9次即可完成,此点Newton法,DFP法都无解,BFGS方法有解,一般来说BFGS效果比较好.

拟牛顿法9次完成求解

拟牛顿法X中间值可视化

4. 源码

https://github.com/msnh2012/n...https://github.com/msnh2012/numerical-optimizaiton)

5. 依赖包

https://github.com/msnh2012/M...https://github.com/msnh2012/Msnhnet)

6. 参考文献

- Numerical Optimization. Jorge Nocedal Stephen J. Wrigh

- Methods for non-linear least squares problems. K. Madsen, H.B. Nielsen, O. Tingleff.

- Practical Optimization\_ Algorithms and Engineering Applications. Andreas Antoniou Wu-Sheng Lu

- 最优化理论与算法. 陈宝林

- 数值最优化方法. 高立

网盘资料下载:链接:https://pan.baidu.com/s/1hpFw... 提取码:b6gq

7. 最后

- 欢迎关注我和BBuf及公众号的小伙伴们一块维护的一个深度学习框架Msnhnet: https://github.com/msnh2012/M... Msnhnet除了是一个深度网络推理库之外,还是一个小型矩阵库,包含了矩阵常规操作,LU分解,Cholesky分解,SVD分解。

END

原文:GiantPandaCV

作者:msnh2012

推荐阅读

更多嵌入式AI技术干货请关注嵌入式AI专栏。