经过几天周易AIPU仿真模拟,踩了不少坑(具体可以参考: 【周易AIPU 仿真】mobilefacenet人脸特征模型),终于可以拿到了R329来进行实机验证了。

系统镜像烧录

我烧录的系统镜像是r329-mainline-debian-20210802.img,具体可以参考https://aijishu.com/a/1060000000224083,友情提醒一个好的读卡器和SD卡是很有必要的,有些小伙伴反馈烧录镜像后老是启动异常,可以先换个读卡器或者SD卡试试。

实机测试

1. 模型生成

有了之前的模拟仿真经验,可以很快入手转换模型。

第一步,启动镜像,进入事先准备好的模型转换容器

docker run --gpus all -it --rm -e DISPLAY=$DISPLAY -v /tmp/.X11-unix:/tmp/.X11-unix -v ${PWD}:${PWD} -w ${PWD} --name zhouyi zepan/zhouyi

第二步,修改模型转换配置文件,与模拟仿真唯一不同的就是GBuilder字段下只需要配置outputs就行了

[Common]

mode = build

[Parser]

model_name = facenet

detection_postprocess =

model_domain = image_classification

input_model = ./model/FaceMobileNet192_train_false.pb

input = img_inputs

input_shape = [1, 112, 112, 3]

output = MobileFaceNet/Logits/SpatialSqueeze

output_dir = ./

[AutoQuantizationTool]

quantize_method = SYMMETRIC

quant_precision = int8

ops_per_channel = DepthwiseConv

reverse_rgb = False

log = True

calibration_data = ./dataset/dataset.npy

calibration_label = ./dataset/label.npy

preprocess_mode = normalize

[GBuilder]

outputs=aipu.bin

target=Z1_0701第三步,运行模型转换脚本

aipubuild facenet_run_pan.cfg模型转换成功后,会有如下输出信息,重点要记录下输出层量化系数output_scale:[6.513236],后面在做反量化时会用到

[I] step1: get max/min statistic value DONE

[I] step2: quantization each op DONE

[I] step3: build quantization forward DONE

[I] step4: show output scale of end node:

[I] layer_id: 65, layer_top:MobileFaceNet/Logits/SpatialSqueeze_0, output_scale:[6.513236]

[I] ==== auto-quantization DONE =

[I] Quantize model complete

[I] Building ...

[I] [common_options.h: 276] BuildTool version: 4.0.175. Build for target Z1_0701 at frequency 800MHz

[I] [IRChecker] Start to check IR: /tmp/AIPUBuilder_1629203633.8358698/facenet_int8.txt

[I] [IRChecker] model_name: facenet

[I] [IRChecker] IRChecker: All IR pass

[I] [graph.cpp : 846] loading graph weight: /tmp/AIPUBuilder_1629203633.8358698/facenet_int8.bin size: 0x147200

[I] [builder.cpp:1059] Total memory for this graph: 0x7880b0 Bytes

[I] [builder.cpp:1060] Text section: 0x00002370 Bytes

[I] [builder.cpp:1061] RO section: 0x00003600 Bytes

[I] [builder.cpp:1062] Desc section: 0x00016000 Bytes

[I] [builder.cpp:1063] Data section: 0x00213900 Bytes

[I] [builder.cpp:1064] BSS section: 0x00518a40 Bytes

[I] [builder.cpp:1065] Stack : 0x00040400 Bytes

[I] [builder.cpp:1066] Workspace(BSS): 0x00000000 Bytes

Total errors: 0, warnings: 0至此你就得到了可实机运行的模型文件aipu.bin。

2. 更新应用程序zhouyi_cam

需要追加如下功能:

- 模型输出数值进行反量化操作,此时需要用到刚才模型转换时的量化系数output_scale

std::vector<float> data;

for(int i=0; i < dim; i++) {

data.push_back((float)result[i] / output_scale);

}- 预先录入底库人脸,可以先针对底库照片打印出人脸特征数据后,硬编码到程序中

- 人脸特征归一化,由于采用余弦距离作为评价相似度的手段,需要对模型输出的人脸特征进行归一化操作

float norm2 = 0;

for (int i = 0; i < dim; i++) {

norm2 += data[i] * data[i];

}

float norm = sqrt(norm2);

for (int i = 0; i < dim; i++) {

data[i] = data[i] / norm;

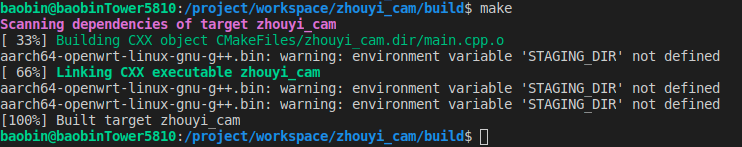

}编译输出

3. 实际运行效果

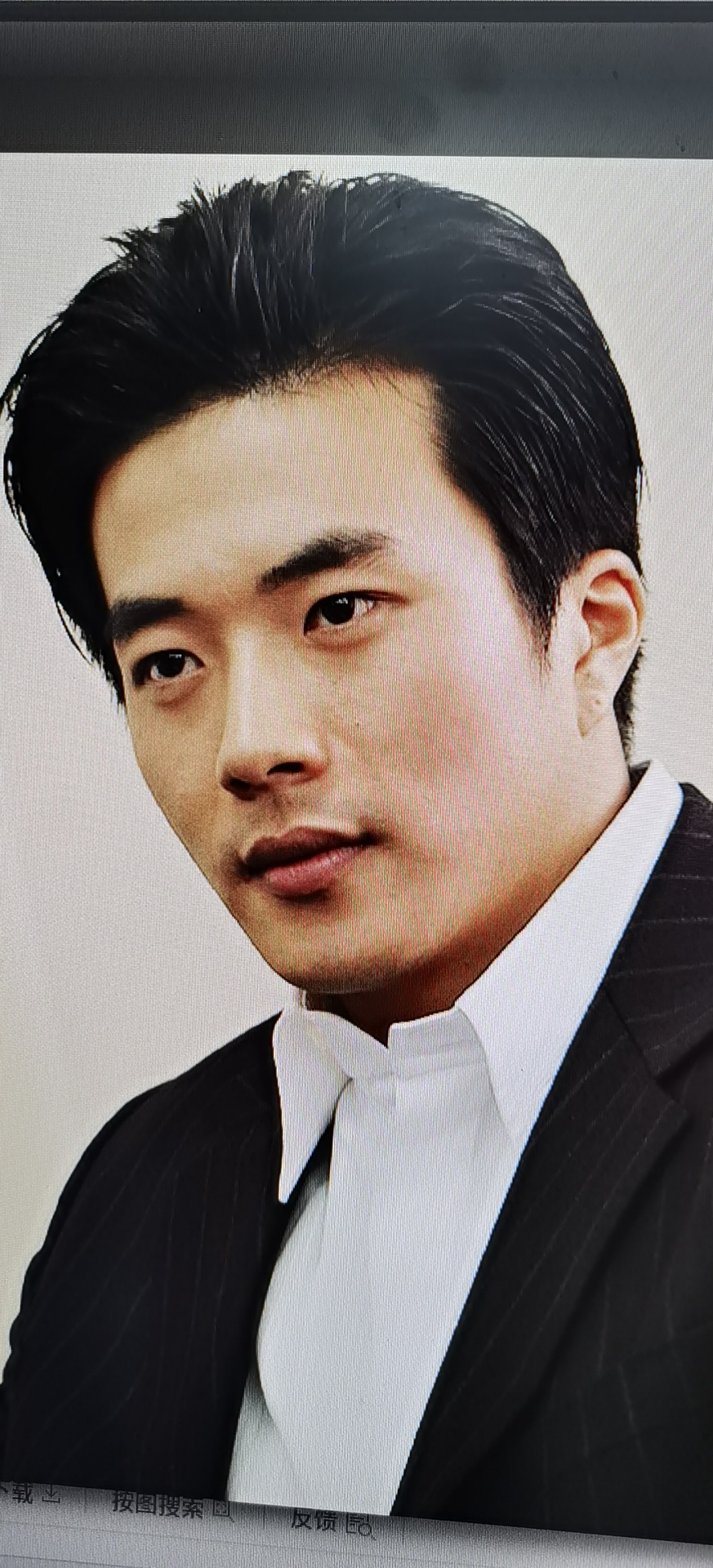

采用底库照片为

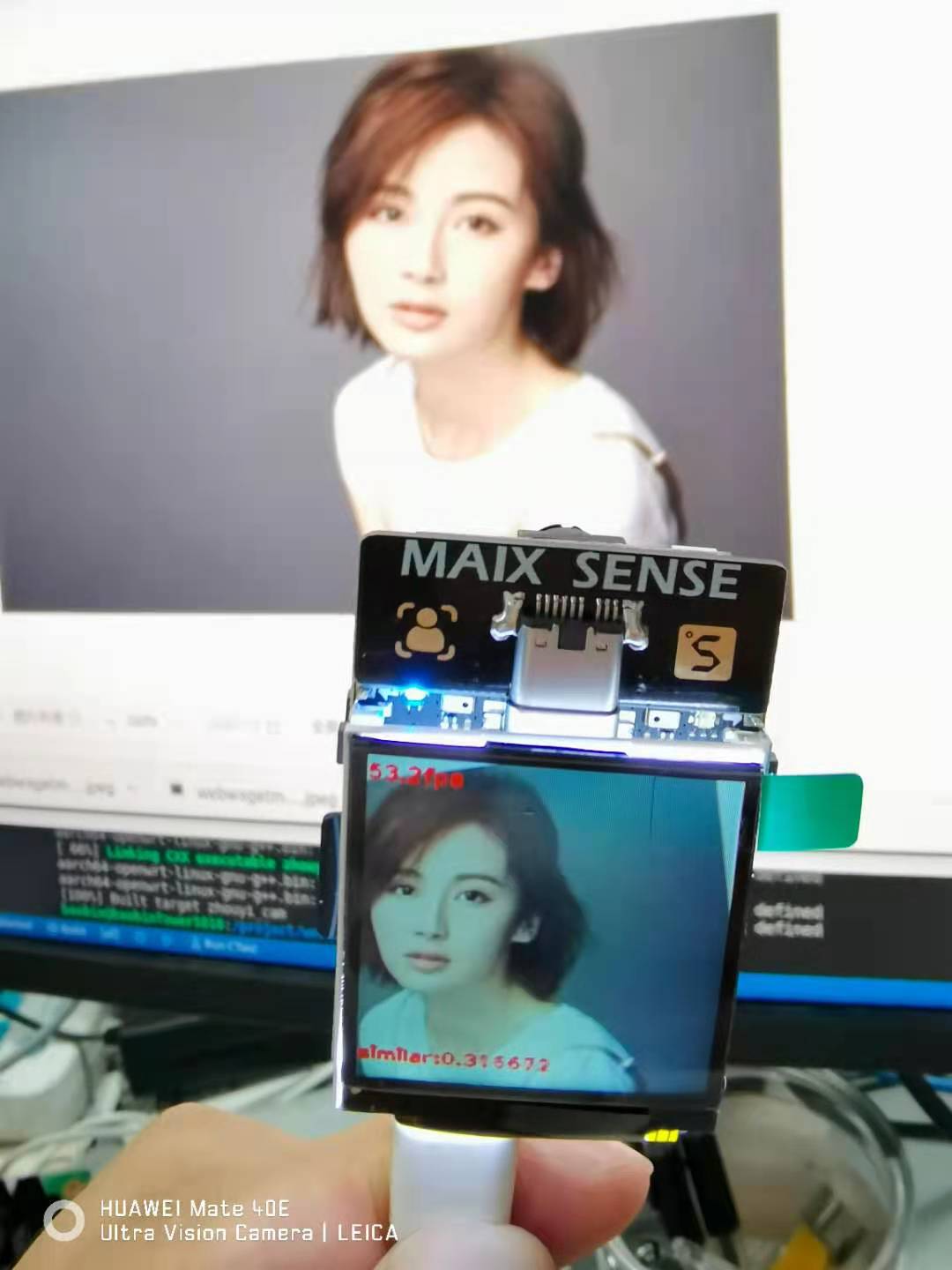

与其他人进行对比分值

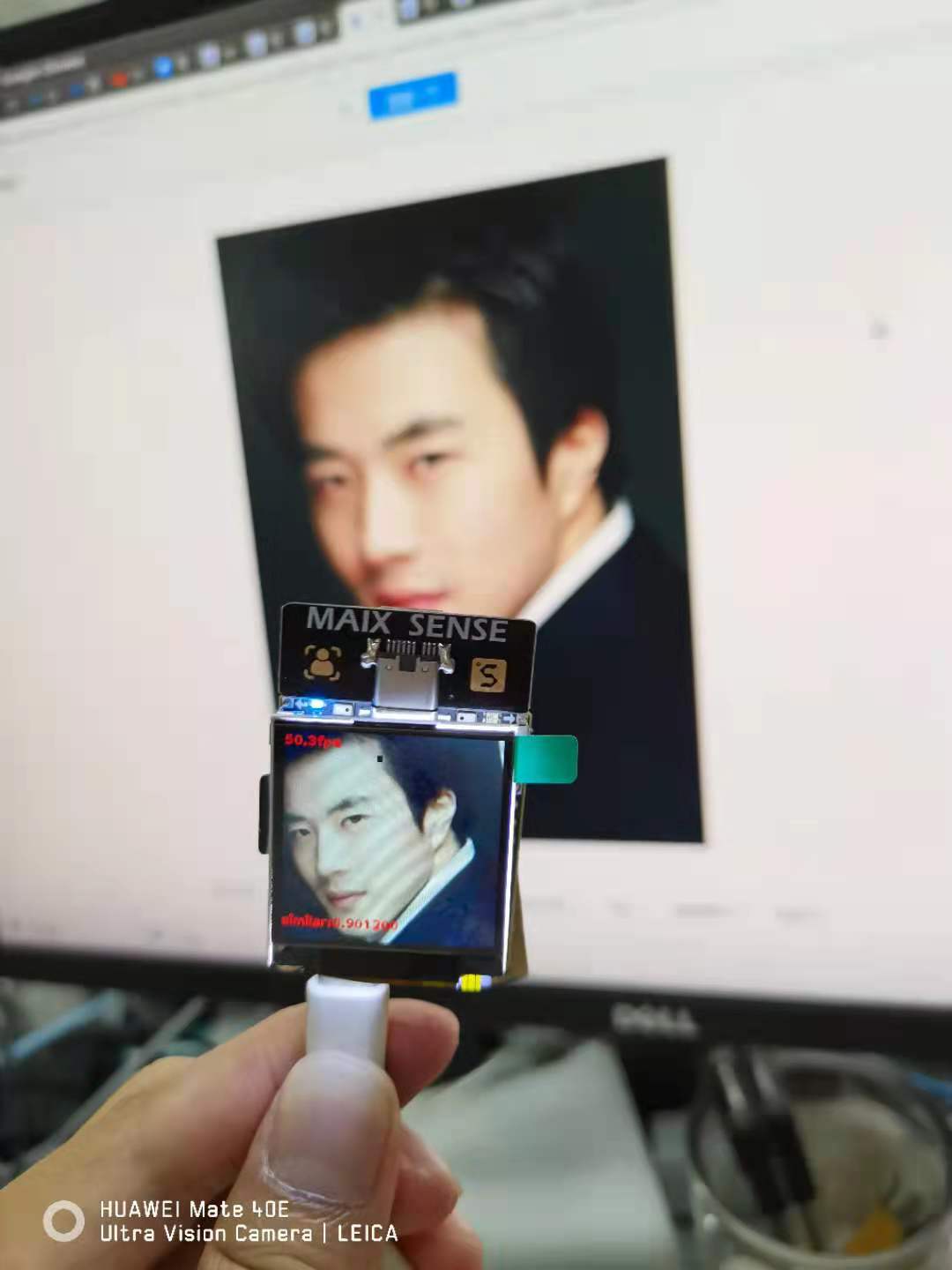

与底库人源进行对比分值

模型运行速度也很理想,达到50fps,完全满足实时要求,精度也符合之前预期。

4. 后期工作

期待把人脸检测、人脸关键点检测和基于人脸关键点的人脸矫正算法都迁移进去,完成整套人脸识别流程。