关于ICLR2022

In 2022, in an effort to broaden the diversity of the pool of participants to ICLR 2022, we are starting a program specifically assisting underrepresented, underprivileged, independent, and particularly first-time ICLR submitters. We hope this program can help create a path for prospective ICLR authors—who would not otherwise have considered participating in, or working on a submission to ICLR—to join the ICLR community, find project ideas, collaborators, mentorship and computational support throughout the submission process, and establish valuable connections and first-hand training during their early career.

Our goal is to provide underrepresented minorities, especially first-timers, and independent researchers, a taste of first-hand research experiences within a community, and a clear target to work towards. For that we need experienced researchers to join this program to provide starter ideas, ongoing feedback, lightweight mentorship, all the way to fully engaged collaboration.

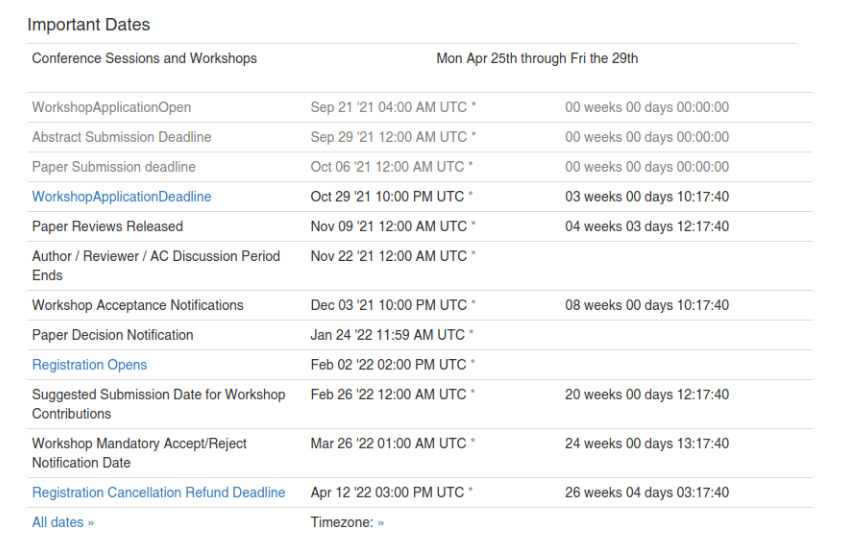

重要时间节点

提交的强化学习论文列表

1. Learning Sampling Policy for Faster Derivative Free Optimization

2. IA-MARL: Imputation Assisted Multi-Agent Reinforcement Learning for Missing Training Data

3. Pessimistic Model Selection for Offline Deep Reinforcement Learning

4. Robust Imitation via Mirror Descent Inverse Reinforcement Learning

5. Optimizing Few-Step Diffusion Samplers by Gradient Descent

6. Offline Pre-trained Multi-Agent Decision Transformer

7. Tesseract: Gradient Flip Score to Secure Federated Learning against Model Poisoning Attacks

8. DreamerPro: Reconstruction-Free Model-Based Reinforcement Learning with Prototypical Representations

9. Skill-based Meta-Reinforcement Learning

10. Decentralized Learning for Overparameterized Problems: A Multi-Agent Kernel Approximation Approach

11. Faster Reinforcement Learning with Value Target Lower Bounding

12. On Distributed Adaptive Optimization with Gradient Compression

13. Learning Two-Step Hybrid Policy for Graph-Based Interpretable Reinforcement Learning

14. DRIBO: Robust Deep Reinforcement Learning via Multi-View Information Bottleneck

15. An Experimental Design Perspective on Exploration in Reinforcement Learning

16. Zero-Shot Reward Specification via Grounded Natural Language

17. Coordinated Attacks Against Federated Learning: A Multi-Agent Reinforcement Learning Approach

18. Generalisation in Lifelong Reinforcement Learning through Logical Composition

19. Distributional Reinforcement Learning with Monotonic Splines

20. Learning Pseudometric-based Action Representations for Offline Reinforcement Learning

21. Efficient Learning of Safe Driving Policy via Human-AI Copilot Optimization

22. Programmatic Reinforcement Learning without Oracles

23. Containerized Distributed Value-Based Multi-Agent Reinforcement Learning

24. PMIC: Improving Multi-Agent Reinforcement Learning with Progressive Mutual Information Collaboration

25. Bi-linear Value Networks for Multi-goal Reinforcement Learning

26. Pareto Policy Pool for Model-based Offline Reinforcement Learning

27. Resmax: An Alternative Soft-Greedy Operator for Reinforcement Learning

28. A Simple Reward-free Approach to Constrained Reinforcement Learning

29. Decentralized Cross-Entropy Method for Model-Based Reinforcement Learning

30. The Remarkable Effectiveness of Combining Policy and Value Networks in A*-based Deep RL for AI Planning

31. COPA: Certifying Robust Policies for Offline Reinforcement Learning against Poisoning Attacks

32. Stochastic Reweighted Gradient Descent

33. Stochastic Reweighted Gradient Descent

34. EqR: Equivariant Representations for Data-Efficient Reinforcement Learning

35. A First-Order Method for Estimating Natural Gradients for Variational Inference with Gaussians and Gaussian Mixture Models

36. Reinforcement Learning with Predictive Consistent Representations

37. Offline-Online Reinforcement Learning: Extending Batch and Online RL

38. DisTop: Discovering a Topological representation to learn diverse and rewarding skills

39. OVD-Explorer: A General Information-theoretic Exploration Approach for Reinforcement Learning

40. LIGS: Learnable Intrinsic-Reward Generation Selection for Multi-Agent Learning

41. Projective Manifold Gradient Layer for Deep Rotation Regression

42. Learning Invariant Reward Functions through Trajectory Interventions

43. A Relational Intervention Approach for Unsupervised Dynamics Generalization in Model-Based Reinforcement Learning

44. Reachability Traces for Curriculum Design in Reinforcement Learning

45. When Can We Learn General-Sum Markov Games with a Large Number of Players Sample-Efficiently?

46. The Boltzmann Policy Distribution: Accounting for Systematic Suboptimality in Human Models

47. EAT-C: Environment-Adversarial sub-Task Curriculum for Efficient Reinforcement Learning

48. A Communication-Efficient Distributed Gradient Clipping Algorithm for Training Deep Neural Networks

49. The Effects of Reward Misspecification: Mapping and Mitigating Misaligned Models

50. Finding General Equilibria in Many-Agent Economic Simulations using Deep Reinforcement Learning

51. Constructing a Good Behavior Basis for Transfer using Generalized Policy Updates

52. Accelerated Policy Learning with Parallel Differentiable Simulation

53. Learning Transferable Reward for Query Object Localization with Policy Adaptation

54. A Risk-Sensitive Policy Gradient Method

55. Implicit Bias of MSE Gradient Optimization in Underparameterized Neural Networks

56. Know Your Action Set: Learning Action Relations for Reinforcement Learning

57. Multi-agent Performative Prediction: From Global Stability and Optimality to Chaos

58. The Convex Geometry of Backpropagation: Neural Network Gradient Flows Converge to Extreme Points of the Dual Convex Program

59. Variational Wasserstein gradient flow

60. GradMax: Growing Neural Networks using Gradient Information

61. SPLID: Self-Imitation Policy Learning through Iterative Distillation

62. Goal Randomization for Playing Text-based Games without a Reward Function

63. Recycling Model Updates in Federated Learning: Are Gradient Subspaces Low-Rank?

64. Second-Order Rewards For Successor Features

65. StARformer: Transformer with State-Action-Reward Representations

66. Data Sharing without Rewards in Multi-Task Offline Reinforcement Learning

67. MixRL: Data Mixing Augmentation for Regression using Reinforcement Learning

68. Safety-aware Policy Optimisation for Autonomous Racing

69. Reward Learning as Doubly Nonparametric Bandits: Optimal Design and Scaling Laws

70. Low-Precision Stochastic Gradient Langevin Dynamics

71. Autonomous Reinforcement Learning: Formalism and Benchmarking

72. Gradient Assisted Learning

73. A Boosting Approach to Reinforcement Learning

74. Should I Run Offline Reinforcement Learning or Behavioral Cloning?

75. Disentangling Generalization in Reinforcement Learning

76. Latent Variable Sequential Set Transformers for Joint Multi-Agent Motion Prediction

77. Variance Reduced Domain Randomization for Policy Gradient

78. Learning Symmetric Locomotion using Cumulative Fatigue for Reinforcement Learning

79. Reinforcement Learning with Efficient Active Feature Acquisition

80. Xi-learning: Successor Feature Transfer Learning for General Reward Functions

81. On the Implicit Biases of Architecture & Gradient Descent

82. CROP: Certifying Robust Policies for Reinforcement Learning through Functional Smoothing

83. Near-optimal Offline Reinforcement Learning with Linear Representation: Leveraging Variance Information with Pessimism

84. NASViT: Neural Architecture Search for Efficient Vision Transformers with Gradient Conflict aware Supernet Training

85. Transformers are Meta-Reinforcement Learners

86. Modeling Bounded Rationality in Multi-Agent Simulations Using Rationally Inattentive Reinforcement Learning

87. Memory-Constrained Policy Optimization

88. Language Model Pre-training Improves Generalization in Policy Learning

89. Explaining Off-Policy Actor-Critic From A Bias-Variance Perspective

90. SHAQ: Incorporating Shapley Value Theory into Multi-Agent Q-Learning

91. Deep Inverse Reinforcement Learning via Adversarial One-Class Classification

92. Joint Self-Supervised Learning for Vision-based Reinforcement Learning

93. Differentiable Gradient Sampling for Learning Implicit 3D Scene Reconstructions from a Single Image

94. Learning When and What to Ask: a Hierarchical Reinforcement Learning Framework

95. SS-MAIL: Self-Supervised Multi-Agent Imitation Learning

96. Fast Convergence of Optimistic Gradient Ascent in Network Zero-Sum Extensive Form Games

97. Finite-Time Convergence and Sample Complexity of Multi-Agent Actor-Critic Reinforcement Learning with Average Reward

98. Beyond Prioritized Replay: Sampling States in Model-Based Reinforcement Learning via Simulated Priorities

99. DESTA: A Framework for Safe Reinforcement Learning with Markov Games of Intervention

100. Learning State Representations via Retracing in Reinforcement Learning

101. State-Action Joint Regularized Implicit Policy for Offline Reinforcement Learning

102. State-Action Joint Regularized Implicit Policy for Offline Reinforcement Learning

103. Asynchronous Multi-Agent Actor-Critic with Macro-Actions

104. Adapting Stepsizes by Momentumized Gradients Improves Optimization and Generalization

105. SparRL: Graph Sparsification via Deep Reinforcement Learning

106. Structure-Aware Transformer Policy for Inhomogeneous Multi-Task Reinforcement Learning

107. Transform2Act: Learning a Transform-and-Control Policy for Efficient Agent Design

108. OSSuM: A Gradient-Free Approach For Pruning Neural Networks At Initialization

109. Adversarial Unlearning of Backdoors via Implicit Hypergradient

110. Mean-Variance Efficient Reinforcement Learning by Expected Quadratic Utility Maximization

111. Task-driven Discovery of Perceptual Schemas for Generalization in Reinforcement Learning

112. Offline Reinforcement Learning for Large Scale Language Action Spaces

113. Generalized Natural Gradient Flows in Hidden Convex-Concave Games and GANs

114. Softmax Gradient Tampering: Decoupling the Backward Pass for Improved Fitting

115. Sample Efficient Deep Reinforcement Learning via Uncertainty Estimation

116. Closed-Loop Control of Additive Manufacturing via Reinforcement Learning

117. Generalizing Few-Shot NAS with Gradient Matching

118. Recurrent Model-Free RL is a Strong Baseline for Many POMDPs

119. THOMAS: Trajectory Heatmap Output with learned Multi-Agent Sampling

120. A First-Occupancy Representation for Reinforcement Learning

121. Reinforcement Learning with Sparse Rewards using Guidance from Offline Demonstration

122. SURF: Semi-supervised Reward Learning with Data Augmentation for Feedback-efficient Preference-based Reinforcement Learning

123. Modular Lifelong Reinforcement Learning via Neural Composition

124. Continuously Discovering Novel Strategies via Reward-Switching Policy Optimization

125. Policy Smoothing for Provably Robust Reinforcement Learning

126. Do Androids Dream of Electric Fences? Safety-Aware Reinforcement Learning with Latent Shielding

127. MARNET: Backdoor Attacks against Value-Decomposition Multi-Agent Reinforcement Learning

128. CoMPS: Continual Meta Policy Search

129. SAFER: Data-Efficient and Safe Reinforcement Learning Through Skill Acquisition

130. Superior Performance with Diversified Strategic Control in FPS Games Using General Reinforcement Learning

131. Benchmarking Sample Selection Strategies for Batch Reinforcement Learning

132. Learning Neural Contextual Bandits through Perturbed Rewards

133. Communicating via Markov Decision Processes

134. Contractive error feedback for gradient compression

135. Influence-Based Reinforcement Learning for Intrinsically-Motivated Agents

136. Quasi-Newton policy gradient algorithms

137. Mitigating Dataset Bias Using Per-Sample Gradients From A Biased Classifier

138. Identifying Interactions among Categorical Predictors with Monte-Carlo Tree Search

139. Pruning Edges and Gradients to Learn Hypergraphs from Larger Sets

140. AARL: Automated Auxiliary Loss for Reinforcement Learning

141. Retrieval-Augmented Reinforcement Learning

142. Reward Uncertainty for Exploration in Preference-based Reinforcement Learning

143. Invariance in Policy Optimisation and Partial Identifiability in Reward Learning

144. Exploring the Robustness of Distributional Reinforcement Learning against Noisy State Observations

145. Dynamics-Aware Comparison of Learned Reward Functions

146. A Hierarchical Bayesian Approach to Inverse Reinforcement Learning with Symbolic Reward Machines

147. Temporal Efficient Training of Spiking Neural Network via Gradient Re-weighting

148. Pessimistic Bootstrapping for Uncertainty-Driven Offline Reinforcement Learning

149. Reinforcement Learning under a Multi-agent Predictive State Representation Model: Method and Theory

150. DATA-DRIVEN EVALUATION OF TRAINING ACTION SPACE FOR REINFORCEMENT LEARNING

151. Learning Long-Term Reward Redistribution via Randomized Return Decomposition

152. Multi-batch Reinforcement Learning via Sample Transfer and Imitation Learning

153. Reinforcement Learning State Estimation for High-Dimensional Nonlinear Systems

154. CausalDyna: Improving Generalization of Dyna-style Reinforcement Learning via Counterfactual-Based Data Augmentation

155. Reasoning With Hierarchical Symbols: Reclaiming Symbolic Policies For Visual Reinforcement Learning

156. Distributional Perturbation for Efficient Exploration in Distributional Reinforcement Learning

157. PDQN - A Deep Reinforcement Learning Method for Planning with Long Delays: Optimization of Manufacturing Dispatching

158. Value Gradient weighted Model-Based Reinforcement Learning

159. De novo design of protein target specific scaffold-based Inhibitors via Reinforcement Learning

160. Bayesian Framework for Gradient Leakage

161. Prospect Pruning: Finding Trainable Weights at Initialization using Meta-Gradients

162. On the Convergence of the Monte Carlo Exploring Starts Algorithm for Reinforcement Learning

163. Rethinking Pareto Approaches in Constrained Reinforcement Learning

164. Decision Tree Algorithms for MDP

165. Sample Complexity of Offline Reinforcement Learning with Deep ReLU Networks

166. Bayesian Exploration for Lifelong Reinforcement Learning

167. Implicit Bias of Projected Subgradient Method Gives Provable Robust Recovery of Subspaces of Unknown Codimension

168. Occupy & Specify: Investigations into a Maximum Credit Assignment Occupancy Objective for Data-efficient Reinforcement Learning

169. sbfδ2-exploration for Reinforcement Learning

170. An Optics Controlling Environment and Reinforcement Learning Benchmarks

171. Learning to Annotate Part Segmentation with Gradient Matching

172. Structured Stochastic Gradient MCMC

173. Near-Optimal Reward-Free Exploration for Linear Mixture MDPs with Plug-in Solver

174. TransDreamer: Reinforcement Learning with Transformer World Models

175. Information Prioritization through Empowerment in Visual Model-based RL

176. Escaping Saddle Points in Nonconvex Minimax Optimization via Cubic-Regularized Gradient Descent-Ascent

177. Adaptive Behavior Cloning Regularization for Stable Offline-to-Online Reinforcement Learning

178. Neural Networks Playing Dough: Investigating Deep Cognition With a Gradient-Based Adversarial Attack

179. Continual Backprop: Stochastic Gradient Descent with Persistent Randomness

180. HyAR: Addressing Discrete-Continuous Action Reinforcement Learning via Hybrid Action Representation

181. TRGP: Trust Region Gradient Projection for Continual Learning

182. Ensemble Kalman Filter (EnKF) for Reinforcement Learning (RL)

183. Learning by Directional Gradient Descent

184. Revisiting Design Choices in Offline Model Based Reinforcement Learning

185. Learning Generalizable Representations for Reinforcement Learning via Adaptive Meta-learner of Behavioral Similarities

186. Multi-Agent Reinforcement Learning with Shared Resource in Inventory Management

187. SPP-RL: State Planning Policy Reinforcement Learning

188. Loss Function Learning for Domain Generalization by Implicit Gradient

189. Hypothesis Driven Coordinate Ascent for Reinforcement Learning

190. Weakly-Supervised Learning of Disentangled and Interpretable Skills for Hierarchical Reinforcement Learning

191. The guide and the explorer: smart agents for resource-limited iterated batch reinforcement learning

192. Offline Reinforcement Learning with Resource Constrained Online Deployment

193. Orchestrated Value Mapping for Reinforcement Learning

194. Spatial Graph Attention and Curiosity-driven Policy for Antiviral Drug Discovery

195. Exploring unfairness in Integrated Gradients based attribution methods

196. Fourier Features in Reinforcement Learning with Neural Networks

197. COptiDICE: Offline Constrained Reinforcement Learning via Stationary Distribution Correction Estimation

198. IntSGD: Adaptive Floatless Compression of Stochastic Gradients

199. Gradient Information Matters in Policy Optimization by Back-propagating through Model

200. Momentum as Variance-Reduced Stochastic Gradient

201. Optimizing Neural Networks with Gradient Lexicase Selection

202. Deep Reinforcement Learning for Equal Risk Option Pricing and Hedging under Dynamic Expectile Risk Measures

203. Depth Without the Magic: Inductive Bias of Natural Gradient Descent

204. A General Analysis of Example-Selection for Stochastic Gradient Descent

205. GrASP: Gradient-Based Affordance Selection for Planning

206. The Power of Exploiter: Provable Multi-Agent RL in Large State Spaces

207. Learning Altruistic Behaviours in Reinforcement Learning without External Rewards

208. Can Stochastic Gradient Langevin Dynamics Provide Differential Privacy for Deep Learning?

209. Feudal Reinforcement Learning by Reading Manuals

210. Offline Reinforcement Learning with Value-based Episodic Memory

211. Pareto Navigation Gradient Descent: a First Order Algorithm for Optimization in Pareto Set

212. Avoiding Overfitting to the Importance Weights in Offline Policy Optimization

213. Gradient Imbalance and solution in Online Continual learning

214. Communication-Efficient Actor-Critic Methods for Homogeneous Markov Games

215. Fully Decentralized Model-based Policy Optimization with Networked Agents

216. Combinatorial Reinforcement Learning Based Scheduling for DNN Execution on Edge

217. Reinforcement Learning with Ex-Post Max-Min Fairness

218. Semi-supervised Offline Reinforcement Learning with Pre-trained Decision Transformers

219. Evolutionary Diversity Optimization with Clustering-based Selection for Reinforcement Learning

220. Online Target Q-learning with Reverse Experience Replay: Efficiently finding the Optimal Policy for Linear MDPs

221. Gradient flows on the feature-Gaussian manifold

222. Multi-Agent Language Learning: Symbolic Mapping

223. An Equivalence Between Data Poisoning and Byzantine Gradient Attacks

224. EE-Net: Exploitation-Exploration Neural Networks in Contextual Bandits

225. Pareto Policy Adaptation

226. Differentially Private SGD with Sparse Gradients

227. A Principled Permutation Invariant Approach to Mean-Field Multi-Agent Reinforcement Learning

228. Improving zero-shot generalization in offline reinforcement learning using generalized similarity functions

229. Improving zero-shot generalization in offline reinforcement learning using generalized similarity functions

230. Decentralized Cooperative Multi-Agent Reinforcement Learning with Exploration

231. Global Convergence of Multi-Agent Policy Gradient in Markov Potential Games

232. Learning Multi-Objective Curricula for Deep Reinforcement Learning

233. COMBO: Conservative Offline Model-Based Policy Optimization

234. Provable Hierarchy-Based Meta-Reinforcement Learning

235. The Manifold Hypothesis for Gradient-Based Explanations

236. Gradient Step Denoiser for convergent Plug-and-Play

237. Model-Based Offline Meta-Reinforcement Learning with Regularization

238. Gradient-based Hyperparameter Optimization without Validation Data for Learning fom Limited Labels

239. Sample-efficient actor-critic algorithms with an etiquette for zero-sum Markov games

240. Causal Reinforcement Learning using Observational and Interventional Data

241. MOBA: Multi-teacher Model Based Reinforcement Learning

242. Charformer: Fast Character Transformers via Gradient-based Subword Tokenization

243. Surprise Minimizing Multi-Agent Learning with Energy-based Models

244. Learning Temporally-Consistent Representations for Data-Efficient Reinforcement Learning

245. Deep Ensemble Policy Learning

246. Block Contextual MDPs for Continual Learning

247. Jointly Learning Identification and Control for Few-Shot Policy Adaptation

248. Continuous Control With Ensemble Deep Deterministic Policy Gradients

249. Safe Opponent-Exploitation Subgame Refinement

250. Understanding and Preventing Capacity Loss in Reinforcement Learning

251. Adaptive Q-learning for Interaction-Limited Reinforcement Learning

252. Reinforcement Learning in Presence of Discrete Markovian Context Evolution

253. LPMARL: Linear Programming based Implicit Task Assigment for Hiearchical Multi-Agent Reinforcement Learning

254. Local Feature Swapping for Generalization in Reinforcement Learning

255. Orthogonalising gradients to speedup neural network optimisation

256. AI-SARAH: Adaptive and Implicit Stochastic Recursive Gradient Methods

257. Self-consistent Gradient-like Eigen Decomposition in Solving Schrödinger Equations

258. Gradient Explosion and Representation Shrinkage in Infinite Networks

259. CoBERL: Contrastive BERT for Reinforcement Learning

260. Koopman Q-learning: Offline Reinforcement Learning via Symmetries of Dynamics

261. Adversarial Style Transfer for Robust Policy Optimization in Reinforcement Learning

262. Gradient Broadcast Adaptation: Defending against the backdoor attack in pre-trained models

263. Shaped Rewards Bias Emergent Language

264. Learning to Coordinate in Multi-Agent Systems: A Coordinated Actor-Critic Algorithm and Finite-Time Guarantees

265. On Covariate Shift of Latent Confounders in Imitation and Reinforcement Learning

266. Knowledge is reward: Learning optimal exploration by predictive reward cashing

267. Gradient-based Counterfactual Explanations using Tractable Probabilistic Models

268. Did I do that? Blame as a means to identify controlled effects in reinforcement learning

269. Rewardless Open-Ended Learning (ROEL)

270. Offline Reinforcement Learning with In-sample Q-Learning

271. Plan Better Amid Conservatism: Offline Multi-Agent Reinforcement Learning with Actor Rectification

272. Model-Invariant State Abstractions for Model-Based Reinforcement Learning

273. Assessing Deep Reinforcement Learning Policies via Natural Corruptions at the Edge of Imperceptibility

274. Maximizing Ensemble Diversity in Deep Reinforcement Learning

275. Theoretical understanding of adversarial reinforcement learning via mean-field optimal control

276. Auto-Encoding Inverse Reinforcement Learning

277. Multi-Agent MDP Homomorphic Networks

278. DARA: Dynamics-Aware Reward Augmentation in Offline Reinforcement Learning

279. http://deeprl.neurondance.com/d/451-iclr-2022458

280. ToM2C: Target-oriented Multi-agent Communication and Cooperation with Theory of Mind

281. Mirror Descent Policy Optimization

282. Embedded-model flows: Combining the inductive biases of model-free deep learning and explicit probabilistic modeling

283. Multi-Agent Decentralized Belief Propagation on Graphs

284. On-Policy Model Errors in Reinforcement Learning

285. Learning a subspace of policies for online adaptation in Reinforcement Learning

286. Detecting Worst-case Corruptions via Loss Landscape Curvature in Deep Reinforcement Learning

287. Policy Gradients Incorporating the Future

288. Bregman Gradient Policy Optimization

289. Interacting Contour Stochastic Gradient Langevin Dynamics

290. Half-Inverse Gradients for Physical Deep Learning

291. Constrained Policy Optimization via Bayesian World Models

292. Local Patch AutoAugment with Multi-Agent Collaboration

293. Flow-based Recurrent Belief State Learning for POMDPs

294. Pessimistic Model-based Offline Reinforcement Learning under Partial Coverage

295. A Reinforcement Learning Environment for Mathematical Reasoning via Program Synthesis

296. Exploiting Minimum-Variance Policy Evaluation for Policy Optimization

297. Edge Rewiring Goes Neural: Boosting Network Resilience via Policy Gradient

298. Delayed Geometric Discounts: An alternative criterion for Reinforcement Learning

299. Multi-Agent Constrained Policy Optimisation

300. A Generalised Inverse Reinforcement Learning Framework

301. RL-DARTS: Differentiable Architecture Search for Reinforcement Learning

302. Representation Learning for Online and Offline RL in Low-rank MDPs

303. P4O: Efficient Deep Reinforcement Learning with Predictive Processing Proximal Policy Optimization

304. Equivariant Reinforcement Learning

305. Stability and Generalisation in Batch Reinforcement Learning

306. Bolstering Stochastic Gradient Descent with Model Building

307. Efficient Wasserstein and Sinkhorn Policy Optimization

308. MURO: Deployment Constrained Reinforcement Learning with Model-based Uncertainty Regularized Batch Optimization

309. Continual Learning with Recursive Gradient Optimization

310. Learning Pessimism for Robust and Efficient Off-Policy Reinforcement Learning

311. The Information Geometry of Unsupervised Reinforcement Learning

312. On Reward Maximization and Distribution Matching for Fine-Tuning Language Models

313. Task-oriented Dialogue System for Automatic Disease Diagnosis via Hierarchical Reinforcement Learning

314. NeuRL: Closed-form Inverse Reinforcement Learning for Neural Decoding

315. Mismatched No More: Joint Model-Policy Optimization for Model-Based RL

316. Evading Adversarial Example Detection Defenses with Orthogonal Projected Gradient Descent

317. TAG: Task-based Accumulated Gradients for Lifelong learning

318. An Improved Composite Functional Gradient Learning by Wasserstein Regularization for Generative adversarial networks

319. Learning to Shape Rewards using a Game of Two Partners

320. Transfer RL across Observation Feature Spaces via Model-Based Regularization

321. On Lottery Tickets and Minimal Task Representations in Deep Reinforcement Learning

322. Metrics Matter: A Closer Look on Self-Paced Reinforcement Learning

323. A Reduction-Based Framework for Conservative Bandits and Reinforcement Learning

324. Experience Replay More When It's a Key Transition in Deep Reinforcement Learning

325. Graph-Enhanced Exploration for Goal-oriented Reinforcement Learning

326. Model-Based Robust Adaptive Semantic Segmentation

327. AQUILA: Communication Efficient Federated Learning with Adaptive Quantization of Lazily-Aggregated Gradients

328. Nested Policy Reinforcement Learning for Clinical Decision Support

329. Particle Based Stochastic Policy Optimization

330. Partial Information as Full: Reward Imputation with Sketching in Bandits

331. Personalized Heterogeneous Federated Learning with Gradient Similarity

332. Evaluating Model-Based Planning and Planner Amortization for Continuous Control

333. Disentangling Sources of Risk for Distributional Multi-Agent Reinforcement Learning

334. SGDEM: stochastic gradient descent with energy and momentum

335. Mastering Visual Continuous Control: Improved Data-Augmented Reinforcement Learning

336. DAAS: Differentiable Architecture and Augmentation Policy Search

337. Towards General Function Approximation in Zero-Sum Markov Games

338. Variational oracle guiding for reinforcement learning

339. HyperDQN: A Randomized Exploration Method for Deep Reinforcement Learning

340. Uncertainty Regularized Policy Learning for Offline Reinforcement Learning

341. Towards Safe Reinforcement Learning via Constraining Conditional Value-at-Risk

342. ZeroSARAH: Efficient Nonconvex Finite-Sum Optimization with Zero Full Gradient Computations

343. EVaDE : Event-Based Variational Thompson Sampling for Model-Based Reinforcement Learning

344. DR3: Value-Based Deep Reinforcement Learning Requires Explicit Regularization

345. Divide and Explore: Multi-Agent Separate Exploration with Shared Intrinsic Motivations

346. Accelerated Gradient-Free Method for Heavily Constrained Nonconvex Optimization

347. Role Diversity Matters: A Study of Cooperative Training Strategies for Multi-Agent RL

348. CUP: A Conservative Update Policy Algorithm for Safe Reinforcement Learning

349. Novel Policy Seeking with Constrained Optimization

350. Model-based Reinforcement Learning with a Hamiltonian Canonical ODE Network

351. Greedy-based Value Representation for Efficient Coordination in Multi-agent Reinforcement Learning

352. Reward Shifting for Optimistic Exploration and Conservative Exploitation

353. RotoGrad: Gradient Homogenization in Multitask Learning

354. Sequential Reptile: Inter-Task Gradient Alignment for Multilingual Learning

355. Sample Efficient Stochastic Policy Extragradient Algorithm for Zero-Sum Markov Game

356. Which model to trust: assessing the influence of models on the performance of reinforcement learning algorithms for continuous control tasks

357. Model-based Reinforcement Learning with Ensembled Model-value Expansion

358. Generative Planning for Temporally Coordinated Exploration in Reinforcement Learning

359. The Geometry of Memoryless Stochastic Policy Optimization in Infinite-Horizon POMDPs

360. Fishr: Invariant Gradient Variances for Out-of-distribution Generalization

361. Can Reinforcement Learning Efficiently Find Stackelberg-Nash Equilibria in General-Sum Markov Games?

362. POETREE: Interpretable Policy Learning with Adaptive Decision Trees

363. Imitation Learning by Reinforcement Learning

364. Actor-Critic Policy Optimization in a Large-Scale Imperfect-Information Game

365. Hinge Policy Optimization: Rethinking Policy Improvement and Reinterpreting PPO

366. Relative Entropy Gradient Sampler for Unnormalized Distributions

367. Sequential Communication in Multi-Agent Reinforcement Learning

368. BioLCNet: Reward-modulated Locally Connected Spiking Neural Networks

369. ScheduleNet: Learn to solve multi-agent scheduling problems with reinforcement learning

370. Towards Deployment-Efficient Reinforcement Learning: Lower Bound and Optimality

371. Optimistic Policy Optimization is Provably Efficient in Non-stationary MDPs

372. The Infinite Contextual Graph Markov Model

373. Align-RUDDER: Learning From Few Demonstrations by Reward Redistribution

374. Plan Your Target and Learn Your Skills: State-Only Imitation Learning via Decoupled Policy Optimization

375. Gradient Matching for Domain Generalization

376. Interpreting Reinforcement Policies through Local Behaviors

377. DSDF: Coordinated look-ahead strategy in stochastic multi-agent reinforcement learning

378. WaveCorr: Deep Reinforcement Learning with Permutation Invariant Policy Networks for Portfolio Management

379. Self-Supervised Structured Representations for Deep Reinforcement Learning

380. Gradient-based Meta-solving and Its Applications to Iterative Methods for Solving Differential Equations

381. On Multi-objective Policy Optimization as a Tool for Reinforcement Learning: Case Studies in Offline RL and Finetuning

382. Dropout Q-Functions for Doubly Efficient Reinforcement Learning

383. How memory architecture affects learning in a simple POMDP: the two-hypothesis testing problem

384. Meta Attention For Off-Policy Actor-Critic

385. A General Theory of Relativity in Reinforcement Learning

386. An Attempt to Model Human Trust with Reinforcement Learning

387. Neural Markov Controlled SDE: Stochastic Optimization for Continuous-Time Data

388. Boosted Curriculum Reinforcement Learning

389. Understanding the Generalization Gap in Visual Reinforcement Learning

390. Towards Learning to Speak and Hear Through Multi-Agent Communication over a Continuous Acoustic Channel

391. Reinforcement Learning for Adaptive Mesh Refinement

392. Offline Meta-Reinforcement Learning with Online Self-Supervision

393. Gradient Importance Learning for Incomplete Observations

394. Model-Based Opponent Modeling

395. Online Tuning for Offline Decentralized Multi-Agent Reinforcement Learning

396. Hindsight Foresight Relabeling for Meta-Reinforcement Learning

397. On the Global Convergence of Gradient Descent for multi-layer ResNets in the mean-field regime

398. Directional Bias Helps Stochastic Gradient Descent to Generalize in Nonparametric Model

399. Online Hyperparameter Meta-Learning with Hypergradient Distillation

400. FedPAGE: A Fast Local Stochastic Gradient Method for Communication-Efficient Federated Learning

401. Evolution Strategies as an Alternate Learning method for Hierarchical Reinforcement Learning

402. Gradient-Guided Importance Sampling for Learning Discrete Energy-Based Models

403. Global Convergence and Stability of Stochastic Gradient Descent

404. Learning Controllable Elements Oriented Representations for Reinforcement Learning

405. Off-Policy Reinforcement Learning with Delayed Rewards

406. TempoRL: Temporal Priors for Exploration in Off-Policy Reinforcement Learning

407. Efficient Reinforcement Learning Experimentation in PyTorch

408. Learning Synthetic Environments and Reward Networks for Reinforcement Learning

409. ACCTS: an Adaptive Model Training Policy for Continuous Classification of Time Series

410. Polyphonic Music Composition: An Adversarial Inverse Reinforcement Learning Approach

411. Trust Region Policy Optimisation in Multi-Agent Reinforcement Learning

412. Self Reward Design with Fine-grained Interpretability

413. Self Reward Design with Fine-grained Interpretability

414. MT-GBM: A Multi-Task Gradient Boosting Machine with Shared Decision Trees

415. Gradient Boosting Neural Networks: GrowNet

416. Towards Scheduling Federated Deep Learning using Meta-Gradients for Inter-Hospital Learning

417. Learning Explicit Credit Assignment for Multi-agent Joint Q-learning

418. Offline Decentralized Multi-Agent Reinforcement Learning

419. Physical Gradients for Deep Learning

420. Policy improvement by planning with Gumbel

421. Batch size-invariance for policy optimization

422. Divergence-Regularized Multi-Agent Actor-Critic

423. Generalized Maximum Entropy Reinforcement Learning via Reward Shaping

424. Your value network can't evaluate every policy: A case of divergence in TD learning

425. Finite-Time Performance of Two-Timescale Networked Actor-Critic in Multi-Agent Reinforcement Learning

426. Quasi-periodic attractors carry stable gradients

427. Unsupervised fashion for controllable generations: A manifold control of latent feature using contrastive alignment and self-organizing rewards

428. Recommending Actions to Improve Engagement for Diabetes Management using Off-Policy Learning

429. Policy Advantage Networks

430. Offline Workflows for Offline Reinforcement Learning Algorithms

431. On the convergence of stochastic extragradient for bilinear games using restarted iteration averaging

432. A Multi-Agent Koopman Operator Approach for Distributed Representation Learning of Networked Dynamical Systems

433. https://openreview.net/forum?id=IsXm6pxytcz

434. Modeling and Understanding Multi-Agent Behavior with Transformers

435. Gap-Dependent Unsupervised Exploration for Reinforcement Learning

436. Modeling the program of the world in reinforcement learning

437. Exploring Reward Surfaces in Reinforcement Learning Environments

438. TENGraD: Time-Efficient Natural Gradient Descent with Exact Fisher-block Inversion

439. Eliminating Estimation Bias in Deep Deterministic Policy Gradient By Co-Regularization

440. Peering Beyond the Gradient Veil with Efficient Distributed Auto Differentiation

441. Federated Reinforcement Learning for Task Offloading in Mobile Edge Computing Networks

442. Controlling Generalization Error via Gaussian Width Gradient Complexity

443. Few-Shot Learning by Dimensionality Reduction in Gradient Space

444. Risk-sensitive Multi-agent Reinforcement Learning over adaptive networks

445. Coordinated Policy Factorization

446. What About Inputing Policy in Value Function: Policy Representation and Policy-extended Value Function Approximator

447. Modal-balanced training strategy by gradient adjustment and artificial Gauss noise enhancement

448. Foresight Constrained Subgoal Space for Hierarchical Reinforcement Learning via Local Imagination and Evolutionary Probing

449. RETHINKING GRADIENT WEIGHT’S INFLUENCE OVER SALIENCY MAP ESTIMATION

450. Lifelong Learning of Skills with Fast and Slow Reinforcement Learning

451. Model-Based Offline RL with Online Adaptation

452. Action-Transferable Policy Guided by Natural Language

453. Example-Based Offline Reinforcement Learning without Rewards

454. \title{Multi-Agent Reinforcement Learning Double \ Auction Design for Online Advertising Recommender Systems}

455. Research on fusion algorithm of multi-attribute decision making and reinforcement learning based on intuitionistic fuzzy number in wargame environment

456. Greedy Multi-Step Off-policy Reinforcement Learning

457. Corruption Robust Reinforcement Learning with General Function Approximation

458. Revisiting the Monotonicity Constraint in Cooperative Multi-Agent Reinforcement Learning

原文来源:http://deeprl.neurondance.com/d/451-iclr-2022458

推荐阅读

专注深度强化学习前沿技术干货,论文,框架,学习路线等,欢迎关注微信公众号。

更多深度强化学习精选知识请关注深度强化学习实验室专栏,投稿请联系微信 1946738842.