上篇文章,我们对Tengine的API做了源码解读

文章很长,大部分读者是不愿意花大量时间看的,这些我也都清楚。不过当我们在实际部署的时候难免会遇到各种各样问题,能提前对底层实现有所了解,遇到问题能够及时发现问题来源,还能提issue,和虫叔讨论,我觉得也是很不错的学习路线。

此外,在debug的时候,除了参考官方提供的文档资源,还可以参考本系列文章的讲解,当作额外的说明文档,也是不错的选择。

我决定在源码阅读的学习路线结束之后,再出几篇部署实践的文章,落实理论的学习。这次一定,不鸽。

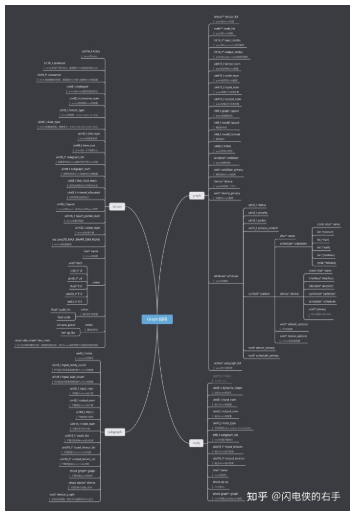

言归正传,还是先贴上关于Graph相关的结构体的思维导图,graph、node以及tensor。 学过数据结构的同学,从字面上应该都能猜出来,graph是由很多的node组成的,每个node作为各种算子对tensor进行加工和处理,tensor是我们网络的输入和输出,整个的网络是一个graph,而部署在加速设备上,可能会被引擎切开成多个子图,不同的子图在不同的设备上加速效果不同。辛苦Tengine团队了!

下面放上相关的比较重要的api及其实现代码

Tensor相关

- init_ir_tensor:初始化tensor的所有属性

- create_ir_tensor:为graph创建一个名为tensor_name的tensor

- create_ir_tensor_name_from_index:通过指定id创建tensor名,核心就是return "tensor_" + str(index)

- get_ir_tensor_index_from_name:通过tensor的名字 查找tensor在graph中的index

- dump_ir_tensor:调试 输出tensor信息

set_ir_tensor_consumer:设置tensor的consumer

// 初始化tensor的所有属性 void init_ir_tensor(ir_tensor_t* ir_tensor, int tensor_index, int data_type) { ir_tensor->index = tensor_index; // tensor的id ir_tensor->producer = -1; // tensor的producer初始为-1 // 为consumer开辟足够大的空间,每一个consumer的id为-1 ir_tensor->consumer = (int16_t*)sys_malloc(sizeof(int16_t) * TE_MAX_CONSUMER_NUM); for (int i = 0; i < TE_MAX_CONSUMER_NUM; i++) { ir_tensor->consumer[i] = -1; } // 初始化tensor的其他属性 ir_tensor->reshaped = 0; ir_tensor->consumer_num = 0; ir_tensor->tensor_type = TENSOR_TYPE_VAR; ir_tensor->data_type = data_type; ir_tensor->dim_num = 0; ir_tensor->elem_size = get_tenser_element_size(data_type); ir_tensor->subgraph_num = 0; ir_tensor->free_host_mem = 0; ir_tensor->internal_allocated = 1; ir_tensor->layout = TENGINE_LAYOUT_NCHW; ir_tensor->quant_param_num = 0; ir_tensor->elem_num = 0; for (int i = 0; i < MAX_SHAPE_DIM_NUM; i++) { ir_tensor->dims[i] = 0; } ir_tensor->data = NULL; ir_tensor->name = NULL; ir_tensor->scale_list = NULL; ir_tensor->zp_list = NULL; ir_tensor->dev_mem = NULL; ir_tensor->subgraph_list = NULL; } // 为graph创建一个名为tensor_name的tensor ir_tensor_t* create_ir_tensor(ir_graph_t* ir_graph, const char* tensor_name, int data_type) { // 首先为tensor开辟空间 ir_tensor_t* ir_tensor = (ir_tensor_t*)sys_malloc(sizeof(ir_tensor_t)); if (NULL == ir_tensor) { return NULL; } // 初始化tensor的所有属性,tensor的id为graph->tensor_num init_ir_tensor(ir_tensor, ir_graph->tensor_num, data_type); // tensor的layout 应随着graph的layout设置 ir_tensor->layout = ir_graph->graph_layout; // 为graph的tensor列表开辟新的空间 ir_tensor_t** new_tensor_list = (ir_tensor_t**)sys_realloc(ir_graph->tensor_list, sizeof(ir_tensor_t*) * (ir_graph->tensor_num + 1)); if (NULL == new_tensor_list) { sys_free(ir_tensor); return NULL; } // 如果制定了tensor_name,需要为tensor_name开辟空间,让tensor->name指向这个空间 if (NULL != tensor_name) { const int str_length = align((int)strlen(tensor_name) + 1, TE_COMMON_ALIGN_SIZE); ir_tensor->name = (char*)sys_malloc(str_length); if (NULL == ir_tensor->name) { sys_free(ir_tensor); return NULL; } // 将tensor_name 拷贝到tensor->name的空间中 memset(ir_tensor->name, 0, str_length); strcpy(ir_tensor->name, tensor_name); } // 新创建的tensor存储到新的tensor列表中的最后的位置 new_tensor_list[ir_graph->tensor_num] = ir_tensor; // graph的tensor列表指向tensor,并且tensor列表的元素数+1 ir_graph->tensor_list = new_tensor_list; ir_graph->tensor_num++; return ir_tensor; } // 通过指定id创建tensor名,核心就是return "tensor_" + str(index) char* create_ir_tensor_name_from_index(int index) { char* name = (char*)sys_malloc(TE_COMMON_ALIGN_SIZE * 2); if (NULL == name) { return NULL; } sprintf(name, "tensor_%7d", index); return name; } // 通过tensor的名字 查找tensor在graph中的index int get_ir_tensor_index_from_name(ir_graph_t* graph, const char* tensor_name) { // 如果tensor_name为"tensor_2", last_symbol_ptr就是"_2" const char* last_symbol_ptr = strrchr(tensor_name, '_'); // 如果tensor_name是"tensor_"+str(index)的这种形式,就能通过这种split的方式找到index if (NULL != last_symbol_ptr) { // 那么index为2 const int index = atoi(++last_symbol_ptr); // 如果index不越界 if (0 <= index && index < graph->tensor_num) { // 从graph的tensor列表中索引 const ir_tensor_t* const tensor = graph->tensor_list[index]; // 如果该tensor的名字是我们要查找的tensor_name,就返回index if (NULL != tensor->name && 0 == strcmp(tensor->name, tensor_name)) { return index; } } } // 如果tensor_name不是"tensor_"+str(index)这种形式,那就要遍历所有的tensor判断 for (int i = 0; i < graph->tensor_num; i++) { const ir_tensor_t* const tensor = graph->tensor_list[i]; if (tensor->name && 0 == strcmp(tensor->name, tensor_name)) { return i; } } return -1; } // 打印tensor的信息,在调试的时候可以用 void dump_ir_tensor(ir_graph_t* g, ir_tensor_t* t) { // data_type: { int8, uint8, fp32, fp16, int32 } // tensor_type: { const, input, var, dep } if (NULL != t->name) { // 如果tensor->name指定了,打印name,data_type,以及tensor_type TLOG_INFO("%s type: %s/%s", t->name, get_tensor_data_type_string(t->data_type), get_tensor_type_string(t->tensor_type)); } else { // 没有指定name,打印index,data_type以及tensor_type TLOG_INFO("tensor_%d type: %s/%s", t->index, get_tensor_data_type_string(t->data_type), get_tensor_type_string(t->tensor_type)); } // 打印tensor的shape if (0 < t->dim_num) { char shape_buf[64]; sprintf(shape_buf, " shape: ["); for (int i = 0; i < t->dim_num - 1; i++) { sprintf(shape_buf + strlen(shape_buf), "%d,", t->dims[i]); } sprintf(shape_buf + strlen(shape_buf), "%d]", t->dims[t->dim_num - 1]); TLOG_INFO("%s", shape_buf); } else { TLOG_INFO(" shape: []"); } // 打印tensor的producer和consumer if (0 <= t->producer) { ir_node_t* node = g->node_list[t->producer]; TLOG_INFO(" from node: %d", node->index); } if (t->consumer_num > 0) TLOG_INFO(" (consumer: %d)", t->consumer_num); TLOG_INFO("\n"); } // 设置tensor的consumer int set_ir_tensor_consumer(ir_tensor_t* ir_tensor, const int index) { // 如果tensor的consumer数量超过预先分配的最大数量 if (TE_MAX_CONSUMER_NUM <= ir_tensor->consumer_num) { // 重新分配更大的内存空间,之前有的元素也填入进去 int16_t* new_consumer = (int16_t*)sys_realloc(ir_tensor->consumer, sizeof(int16_t) * (ir_tensor->consumer_num + 1)); if (NULL == new_consumer) { return -1; } // 让tensor的consumer指向新分配的空间 ir_tensor->consumer = new_consumer; } // 将新的consumer index填入consumer的最后一位 ir_tensor->consumer[ir_tensor->consumer_num] = index; // consumer的数量+1 ir_tensor->consumer_num++; return 0; }Node相关

- init_ir_node:初始化node的属性

- create_ir_node:给graph创建名为node_name的节点

- set_ir_node_input_tensor:设置node的第index个tensor

// 初始化node,给node属性赋默认值

static void init_ir_node(ir_node_t* ir_node, int op_type, int op_version, int node_index)

{

ir_node->index = node_index;

ir_node->dynamic_shape = 0;

ir_node->input_num = 0;

ir_node->output_num = 0;

ir_node->node_type = TE_NODE_TYPE_INTER;

ir_node->input_tensors = NULL;

ir_node->output_tensors = NULL;

ir_node->name = NULL;

ir_node->op.type = op_type;

ir_node->op.version = op_version;

ir_node->op.same_shape = 1;

ir_node->op.param_size = 0;

ir_node->op.param_mem = NULL;

ir_node->op.infer_shape = NULL;

ir_node->subgraph_idx = -1;

}

// 给graph创建名为node_name的节点

ir_node_t* create_ir_node(struct graph* ir_graph, const char* node_name, int op_type, int op_version)

{

// 为node分配内存空间

ir_node_t* node = (ir_node_t*)sys_malloc(sizeof(ir_node_t));

if (NULL == node)

{

return NULL;

}

// 初始化node属性,节点id为graph的节点个数

init_ir_node(node, op_type, op_version, ir_graph->node_num);

// 根据op类型找到method

ir_method_t* method = find_op_method(op_type, op_version);

if ((NULL != method) && (NULL != method->init) && (method->init(&node->op) < 0)) ?????????

{

sys_free(node);

return NULL;

}

if ((NULL == method) || (NULL == method->init) || (method->init(&node->op) < 0))

{

sys_free(node);

return NULL;

}

// 为graph的node列表重新分配更大的内存空间,之前的node列表重新填充进去

ir_node_t** new_node_list = (ir_node_t**)sys_realloc(ir_graph->node_list, sizeof(ir_node_t*) * (ir_graph->node_num + 1));

if (NULL == new_node_list)

{

return NULL;

}

// node的相关graph指向当前指定的graph

node->graph = ir_graph;

// 如果指定了node name, 则为node->name开辟空间,将node_name拷贝进去

if (NULL != node_name)

{

node->name = strdup(node_name);

}

// 新创建的node列表的最后一位设置为新创建的节点

new_node_list[ir_graph->node_num] = node;

// graph的node列表指向新的节点列表,node的数量加1

ir_graph->node_list = new_node_list;

ir_graph->node_num++;

return node;

}

// 设置node的第idx个输入tensor

int set_ir_node_input_tensor(ir_node_t* node, int input_idx, ir_tensor_t* tensor)

{

// 如果idx大于node的输入数,就重新分配更大的空间,将旧的输入tensors分配进去

if (input_idx >= node->input_num)

{

int16_t* new_tensor = (int16_t*)sys_realloc(node->input_tensors, sizeof(int16_t) * (input_idx + 1));

if (NULL == new_tensor)

{

return -1;

}

// 后开辟出来的内存的id 初始化为-1

for (int i = node->input_num; i < input_idx + 1; i++)

{

new_tensor[i] = -1;

}

// node的输入tensors指向新开辟的空间,输入数重新赋值

node->input_tensors = (uint16_t*)new_tensor;

node->input_num = input_idx + 1;

}

// 第idx个tensor的index赋值为tensor的id

node->input_tensors[input_idx] = tensor->index;

// 设置tensor的consumer

if (set_ir_tensor_consumer(tensor, node->index) < 0)

{

return -1;

}

return 0;

}Graph相关

- create_ir_graph:创建graph,context参数与device相关

- init_ir_graph:初始化graph的属性

- set_ir_graph_input_node:设置graph的输入node的index列表

- get_ir_graph_tensor:返回graph的第index个tensor

- get_ir_graph_node:返回graph的第index个node

- get_ir_graph_subgraph:返回graph的第index个subgraph

dump_ir_graph:调试 输出graph信息

// 使用context创建graph // context中包含device的信息,所以在使用非cpu设备的时候一定要定义context ir_graph_t* create_ir_graph(struct context* context) { // 给graph和graph的attribute分配空间 ir_graph_t* ir_graph = (ir_graph_t*)sys_malloc(sizeof(ir_graph_t)); if (NULL == ir_graph) { return NULL; } ir_graph->attribute = (struct attribute*)sys_malloc(sizeof(struct attribute)); // 初始化graph init_ir_graph(ir_graph, context); return ir_graph; } // 初始化graph,给graph的属性赋初值 void init_ir_graph(ir_graph_t* graph, struct context* context) { graph->tensor_list = NULL; graph->node_list = NULL; graph->input_nodes = NULL; graph->output_nodes = NULL; graph->tensor_num = 0; graph->node_num = 0; graph->input_num = 0; graph->output_num = 0; graph->subgraph_list = create_vector(sizeof(struct subgraph*), NULL); graph->graph_layout = TENGINE_LAYOUT_NCHW; graph->model_layout = TENGINE_LAYOUT_NCHW; graph->model_format = MODEL_FORMAT_TENGINE; graph->serializer = NULL; graph->serializer_privacy = NULL; graph->device = NULL; graph->device_privacy = NULL; graph->status = GRAPH_STAT_CREATED; init_attribute(graph->attribute, context); } // 给graph设置输入节点设置id // 如果graph输入节点已经有值,则覆盖掉 int set_ir_graph_input_node(ir_graph_t* graph, int16_t input_nodes[], int input_number) { if (0 >= input_number) { return -1; } // 为新的输入节点的index列表分配内存空间 int16_t* new_input_nodes = (int16_t*)sys_malloc(input_number * sizeof(int16_t)); if (NULL == new_input_nodes) { return -1; } // 如果graph中以及有输入节点的index列表了,就释放掉 if (NULL != graph->input_nodes) { sys_free(graph->input_nodes); graph->input_nodes = NULL; } // 将graph的输入节点的index列表指向新分配的空间 graph->input_nodes = new_input_nodes; graph->input_num = input_number; for (int i = 0; i < input_number; i++) { // 获取graph中的每一个输入节点,设置类型为输入类型,graph的输入节点的index设置为新的index ir_node_t* node = get_ir_graph_node(graph, input_nodes[i]); node->node_type = TE_NODE_TYPE_INPUT; graph->input_nodes[i] = input_nodes[i]; } return 0; } // 返回graph的tensor列表的第index个tensor struct tensor* get_ir_graph_tensor(ir_graph_t* graph, int index) { return graph->tensor_list[index]; } // 返回graph的node列表的第index个node struct node* get_ir_graph_node(ir_graph_t* graph, int index) { return graph->node_list[index]; } // 返回graph的子图列表的第index个子图 struct subgraph* get_ir_graph_subgraph(ir_graph_t* graph, int index) { return *(struct subgraph**)get_vector_data(graph->subgraph_list, index); } int infer_ir_graph_shape(ir_graph_t* graph) { const int node_num = graph->node_num; // 遍历graph的每一个node for (int i = 0; i < node_num; i++) { // 获取到graph的node列表的第i个node ir_node_t* node = get_ir_graph_node(graph, i); // 获取node的op ir_op_t* op = &node->op; if (node->input_num == 0) continue; if (node->dynamic_shape) { // populate the dynamic_shape int output_num = node->output_num; for (int j = 0; j < output_num; j++) { ir_tensor_t* tensor = get_ir_graph_tensor(graph, j); for (int l = 0; l < tensor->consumer_num; l++) { ir_node_t* child_node = get_ir_graph_node(graph, l); child_node->dynamic_shape = 1; } } continue; } // 如果op不改变维度,就是把当前node的输入维度等信息直接拷贝给输出上 if (0 != op->same_shape) { ir_tensor_t* input = get_ir_graph_tensor(graph, node->input_tensors[0]); ir_tensor_t* output = get_ir_graph_tensor(graph, node->output_tensors[0]); output->dim_num = input->dim_num; output->elem_num = input->elem_num; memcpy(output->dims, input->dims, sizeof(int32_t) * input->dim_num); } else { if (0 != op->infer_shape(node)) { TLOG_ERR("Tengine FATAL: Infer node(id: %d, op: %s) shape failed.\n", node->index, get_op_name_from_type(node->op.type)); return -1; } } for (int j = 0; j < node->output_num; j++) { ir_tensor_t* tensor = get_ir_graph_tensor(graph, node->output_tensors[j]); tensor->reshaped = 0; } } return 0; }

原文链接:https://zhuanlan.zhihu.com/p/399854346

作者:闪电侠的右手

推荐阅读