微信公众号:OpenCV学堂

关注获取更多计算机视觉与深度学习知识

编译OpenCV最新4.5.x版本

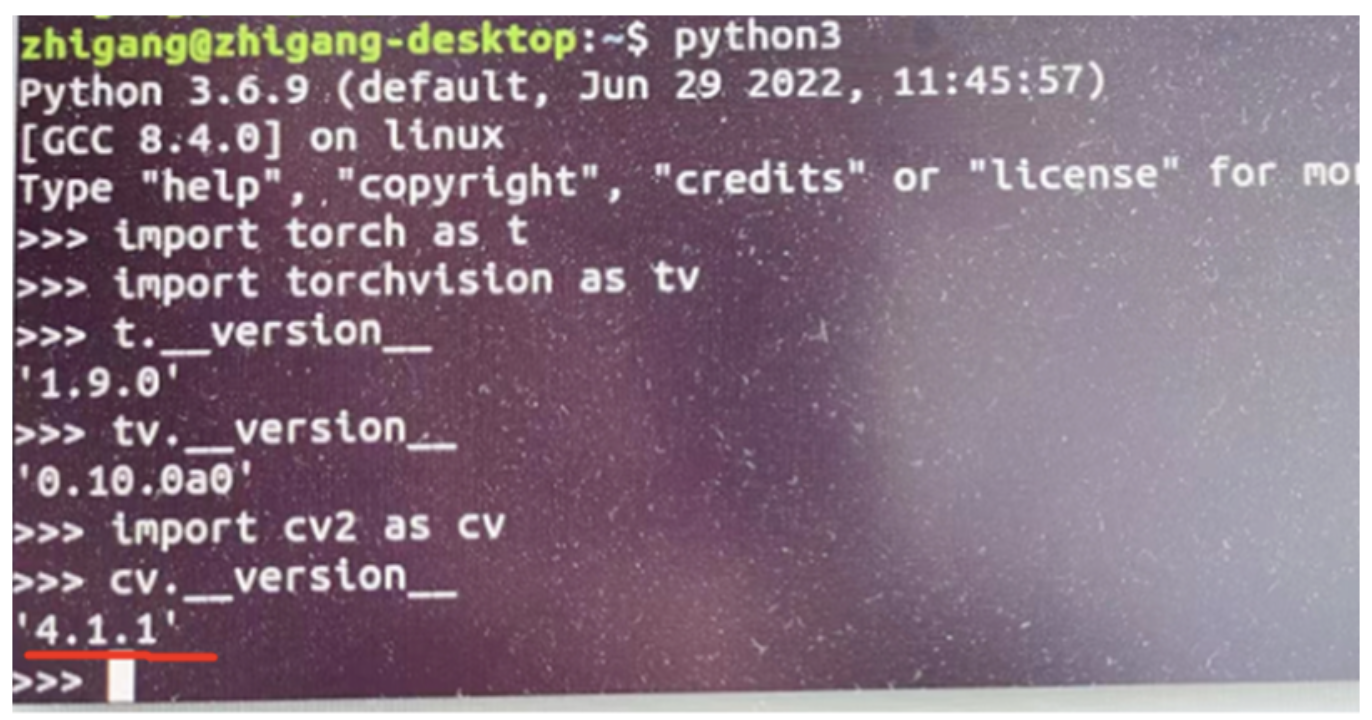

Jetson Nano自带的OpenCV版本比较低,Jetpack4.6对应的OpenCV版本为4.1的,有图为证:

而OpenCV当前最新版本已经到了4.5跟4.6了,4.5.x中OpenCV DNN支持了很多新的模型推理跟新的特性都无法在OpenCV4.1上演示,所以我决定从源码编译OpenCV升级版本到 4.5.4,然后我发一个非常好的网站,提供了完整的脚本,于是我直接运行了该脚本就完成了安装,整个安装过程需要等待几个小时,耐心点。这个完整的脚本下载地址如下:

https://github.com/Qengineering/Install-OpenCV-Jetson-Nano关于脚本每一个步骤的解释与说明如下:

https://qengineering.eu/install-opencv-4.5-on-jetson-nano.html这里我也搬运了一下,选择OpenCV4.5.4版本完成编译与安装,对应完整的脚本如下:

#!/bin/bash

set -e

echo "Installing OpenCV 4.5.4 on your Jetson Nano"

echo "It will take 2.5 hours !"

# reveal the CUDA location

cd ~

sudo sh -c "echo '/usr/local/cuda/lib64' >> /etc/ld.so.conf.d/nvidia-tegra.conf"

sudo ldconfig

# install the dependencies

sudo apt-get install -y build-essential cmake git unzip pkg-config zlib1g-dev

sudo apt-get install -y libjpeg-dev libjpeg8-dev libjpeg-turbo8-dev libpng-dev libtiff-dev

sudo apt-get install -y libavcodec-dev libavformat-dev libswscale-dev libglew-dev

sudo apt-get install -y libgtk2.0-dev libgtk-3-dev libcanberra-gtk*

sudo apt-get install -y python-dev python-numpy python-pip

sudo apt-get install -y python3-dev python3-numpy python3-pip

sudo apt-get install -y libxvidcore-dev libx264-dev libgtk-3-dev

sudo apt-get install -y libtbb2 libtbb-dev libdc1394-22-dev libxine2-dev

sudo apt-get install -y gstreamer1.0-tools libv4l-dev v4l-utils qv4l2

sudo apt-get install -y libgstreamer-plugins-base1.0-dev libgstreamer-plugins-good1.0-dev

sudo apt-get install -y libavresample-dev libvorbis-dev libxine2-dev libtesseract-dev

sudo apt-get install -y libfaac-dev libmp3lame-dev libtheora-dev libpostproc-dev

sudo apt-get install -y libopencore-amrnb-dev libopencore-amrwb-dev

sudo apt-get install -y libopenblas-dev libatlas-base-dev libblas-dev

sudo apt-get install -y liblapack-dev liblapacke-dev libeigen3-dev gfortran

sudo apt-get install -y libhdf5-dev protobuf-compiler

sudo apt-get install -y libprotobuf-dev libgoogle-glog-dev libgflags-dev

# remove old versions or previous builds

cd ~

sudo rm -rf opencv*

# download the latest version

wget -O opencv.zip https://github.com/opencv/opencv/archive/4.5.4.zip

wget -O opencv_contrib.zip https://github.com/opencv/opencv_contrib/archive/4.5.4.zip

# unpack

unzip opencv.zip

unzip opencv_contrib.zip

# some administration to make live easier later on

mv opencv-4.5.4 opencv

mv opencv_contrib-4.5.4 opencv_contrib

# clean up the zip files

rm opencv.zip

rm opencv_contrib.zip

# set install dir

cd ~/opencv

mkdir build

cd build

# run cmake

cmake -D CMAKE_BUILD_TYPE=RELEASE \

-D CMAKE_INSTALL_PREFIX=/usr \

-D OPENCV_EXTRA_MODULES_PATH=~/opencv_contrib/modules \

-D EIGEN_INCLUDE_PATH=/usr/include/eigen3 \

-D WITH_OPENCL=OFF \

-D WITH_CUDA=ON \

-D CUDA_ARCH_BIN=5.3 \

-D CUDA_ARCH_PTX="" \

-D WITH_CUDNN=ON \

-D WITH_CUBLAS=ON \

-D ENABLE_FAST_MATH=ON \

-D CUDA_FAST_MATH=ON \

-D OPENCV_DNN_CUDA=ON \

-D ENABLE_NEON=ON \

-D WITH_QT=OFF \

-D WITH_OPENMP=ON \

-D BUILD_TIFF=ON \

-D WITH_FFMPEG=ON \

-D WITH_GSTREAMER=ON \

-D WITH_TBB=ON \

-D BUILD_TBB=ON \

-D BUILD_TESTS=OFF \

-D WITH_EIGEN=ON \

-D WITH_V4L=ON \

-D WITH_LIBV4L=ON \

-D OPENCV_ENABLE_NONFREE=ON \

-D INSTALL_C_EXAMPLES=OFF \

-D INSTALL_PYTHON_EXAMPLES=OFF \

-D BUILD_opencv_python3=TRUE \

-D OPENCV_GENERATE_PKGCONFIG=ON \

-D BUILD_EXAMPLES=OFF ..

# run make

FREE_MEM="$(free -m | awk '/^Swap/ {print $2}')"

# Use "-j 4" only swap space is larger than 5.5GB

if [[ "FREE_MEM" -gt "5500" ]]; then

NO_JOB=4

else

echo "Due to limited swap, make only uses 1 core"

NO_JOB=1

fi

make -j ${NO_JOB}

sudo rm -r /usr/include/opencv4/opencv2

sudo make install

sudo ldconfig

# cleaning (frees 300 MB)

make clean

sudo apt-get update

echo "Congratulations!"

echo "You've successfully installed OpenCV 4.5.4 on your Jetson Nano"

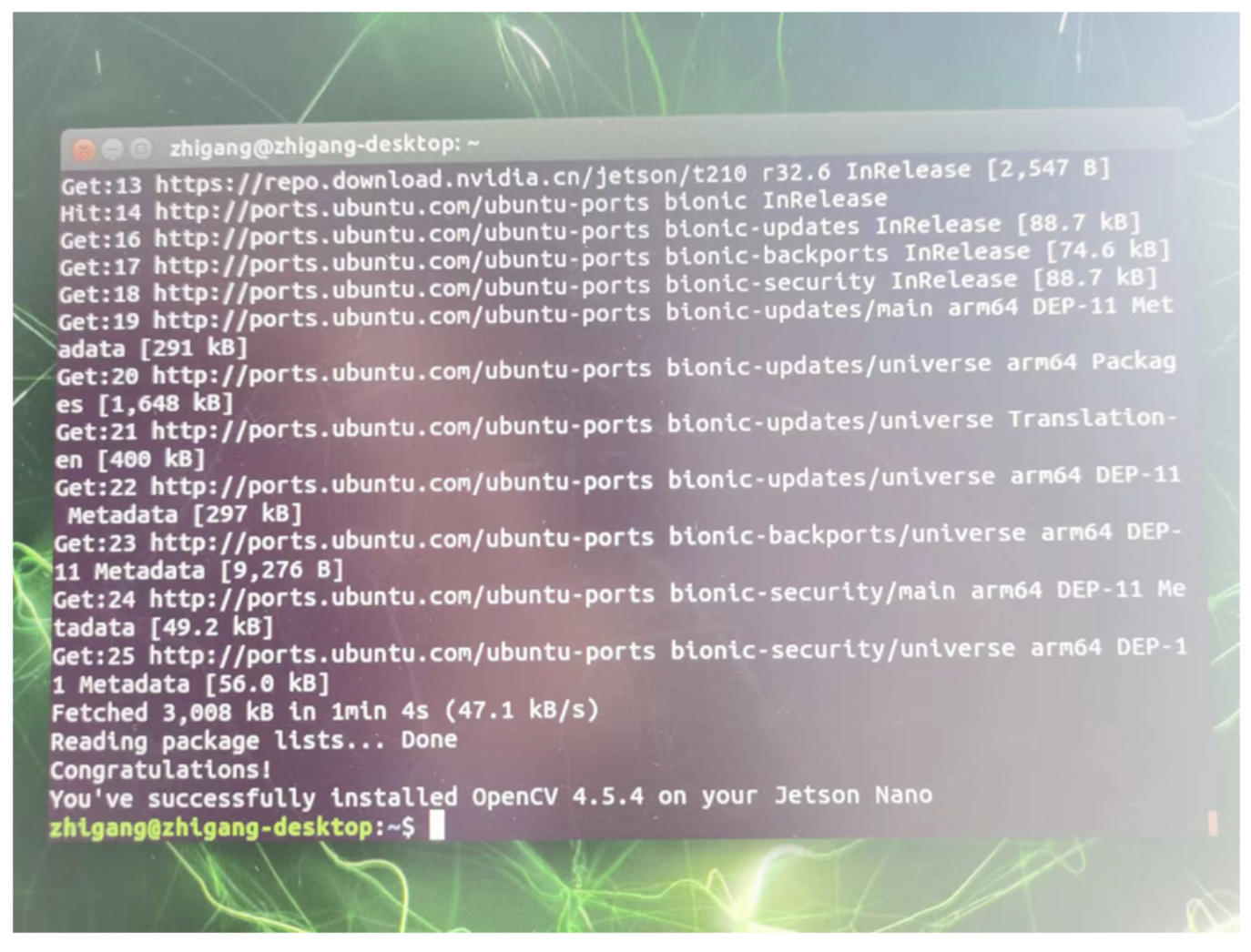

直接在终端命令行中执行下载下来得脚本文件就可以完成安装了。我安装完整之后得显示如下:

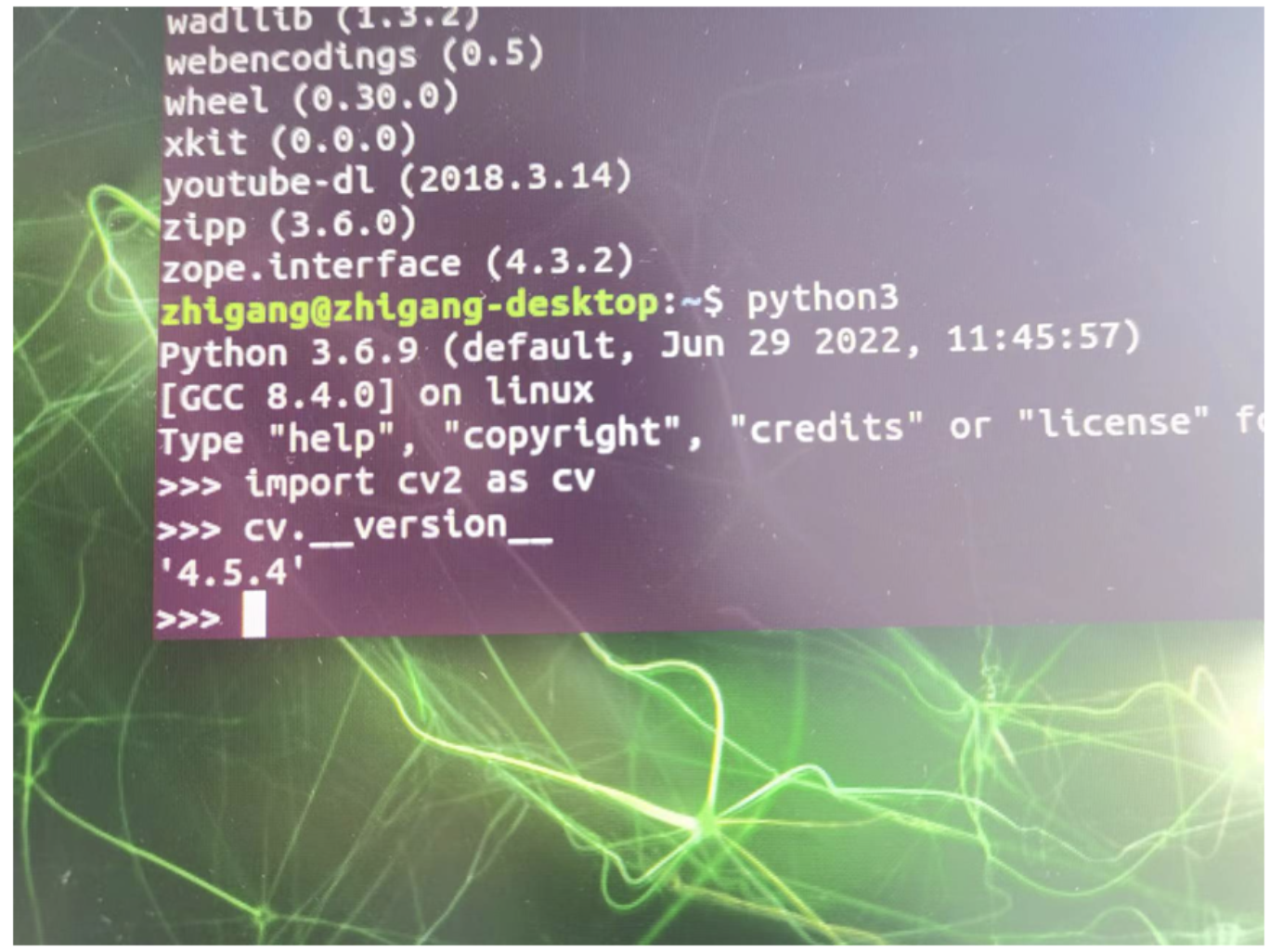

验证与导入安装好之后的OpenCV4.5.4版本

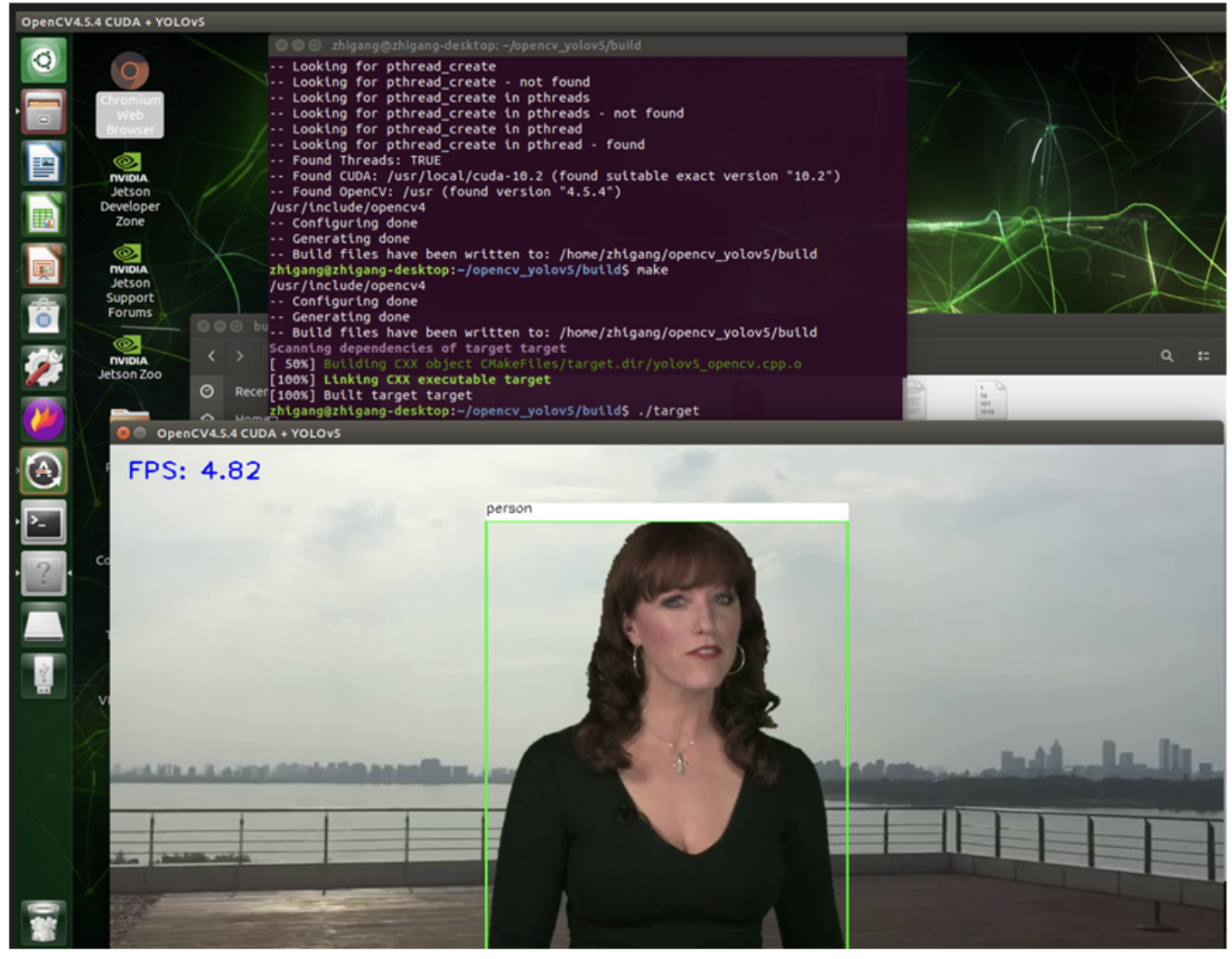

OpenCV C++程序编译与演示

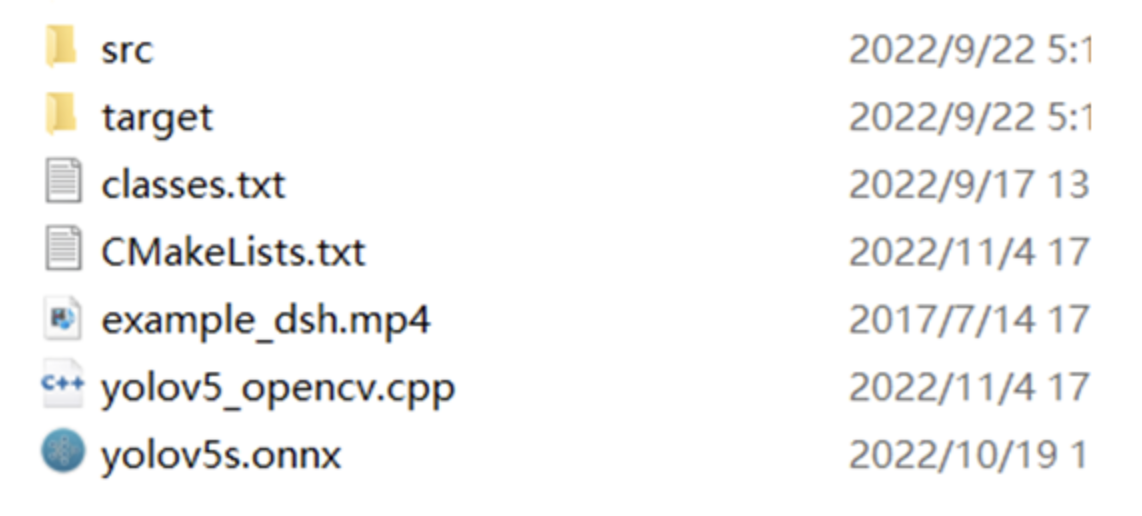

OpenCV YOLOv5跟人脸检测的演示C++程序是我以前写好的,直接拿过来,然后构建了一个项目目录如下:

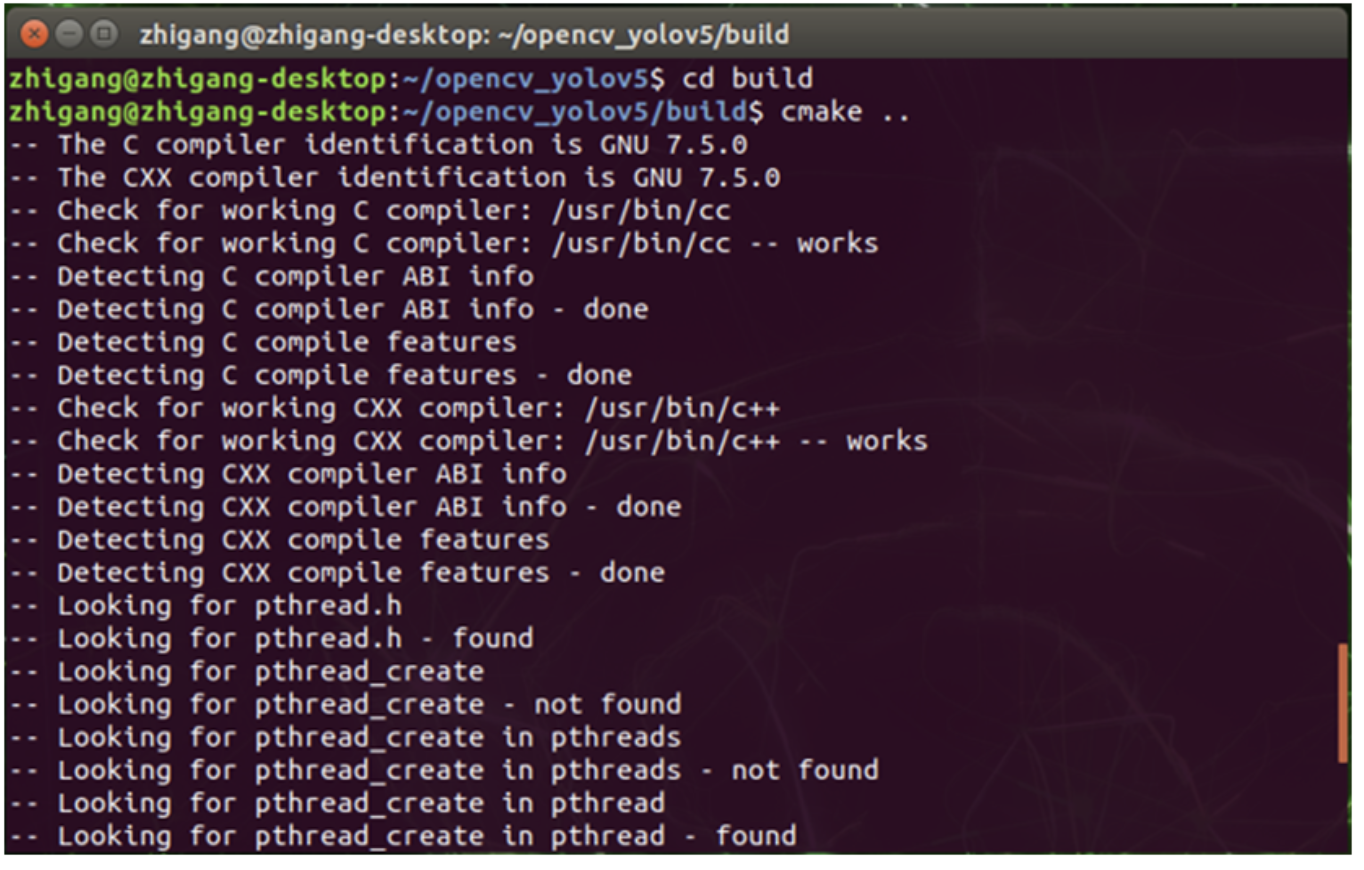

拷贝到jetson的home目录下,cmake编译

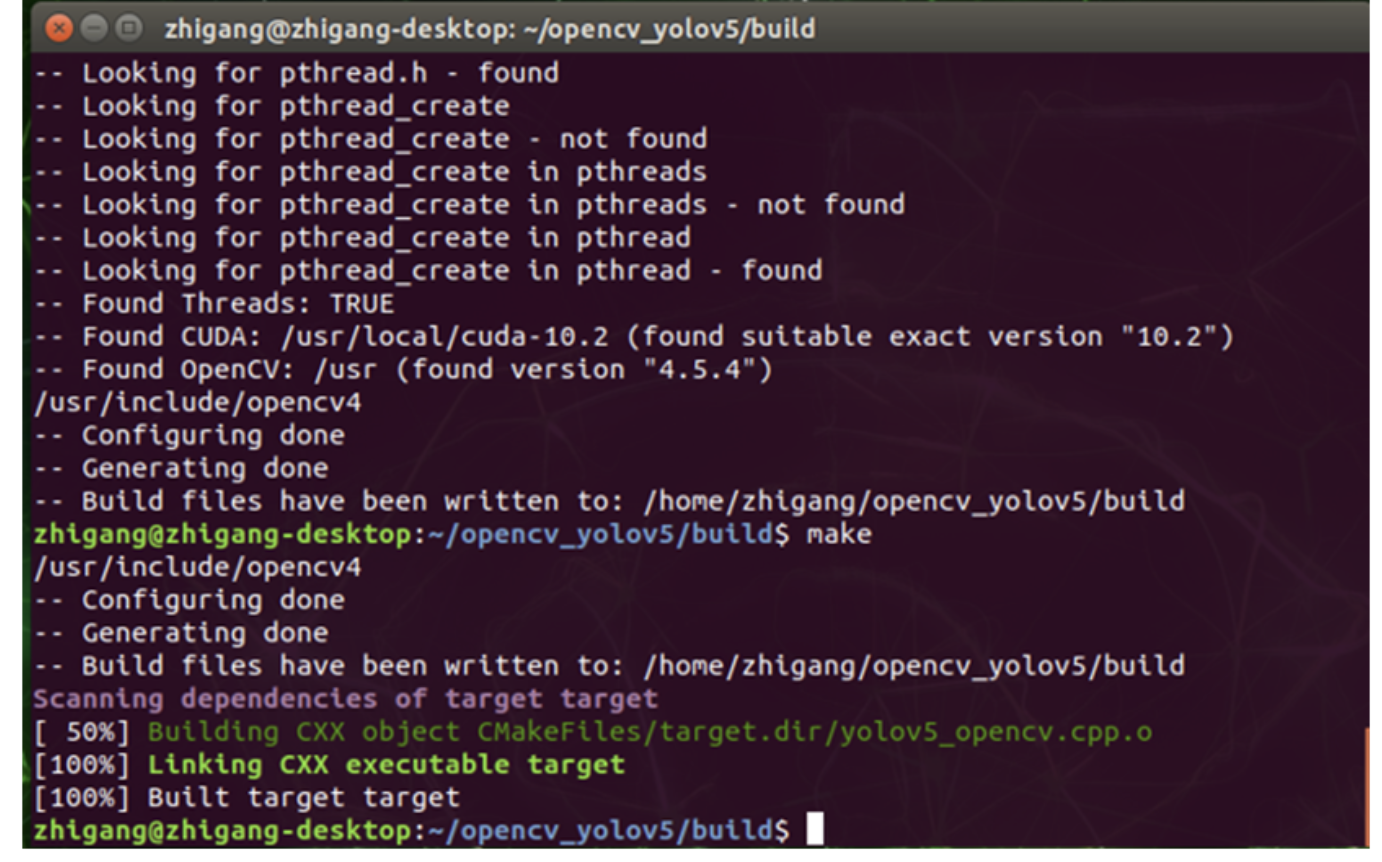

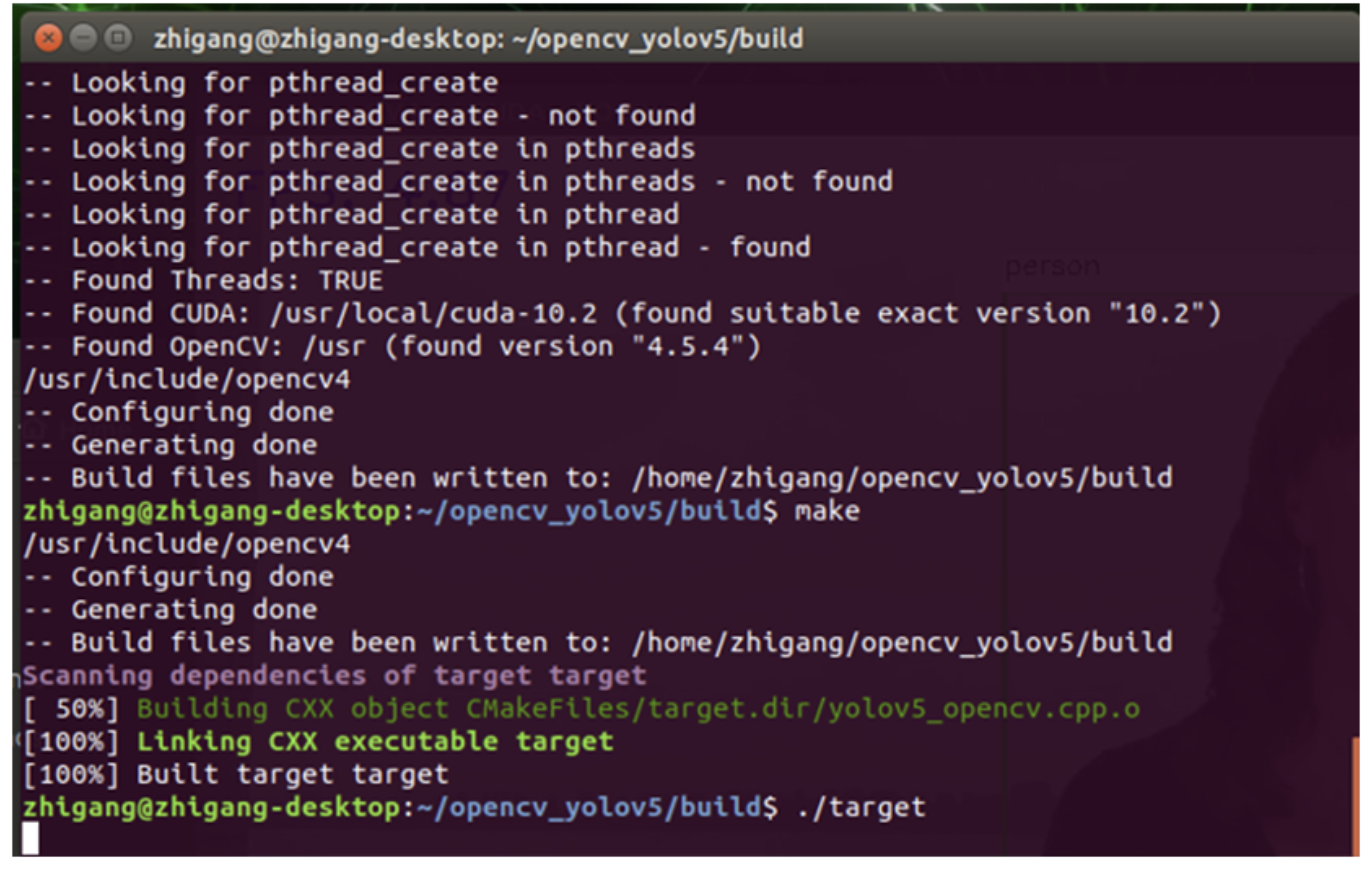

然后make生成执行文件:

运行target

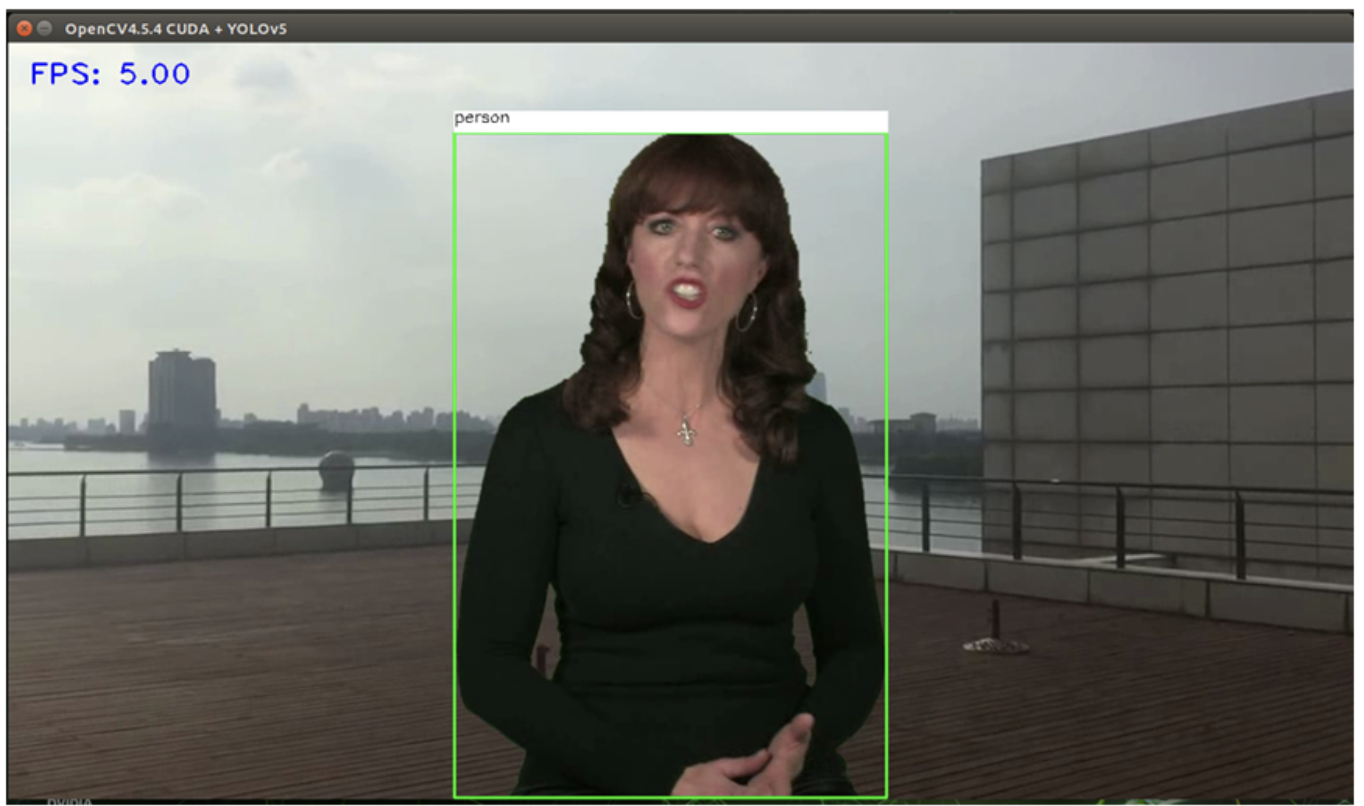

显示运行界面如下:

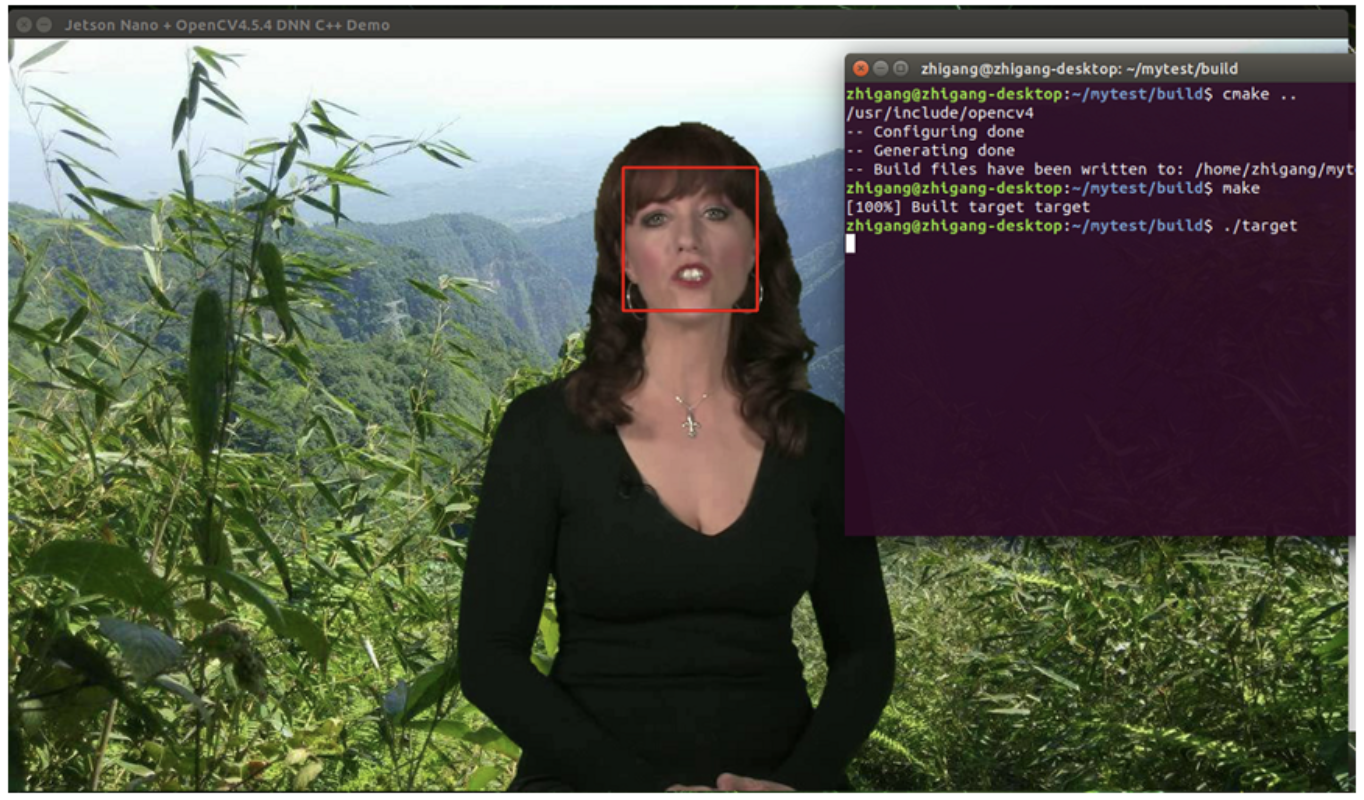

OpenCV DNN人脸检测演示:

CMakeLists.txt文件里面得内容如下:

cmake_minimum_required( VERSION 2.8 )

# 声明一个 cmake 工程

project(yolov5_opencv_demo)

# 设置编译模式

#set( CMAKE_BUILD_TYPE "Debug" )

#添加OPENCV库

#指定OpenCV版本,代码如下

#find_package(OpenCV 4.5.4 REQUIRED)

#如果不需要指定OpenCV版本,代码如下

find_package(OpenCV REQUIRED)

include_directories(

./src/)

#添加OpenCV头文件

include_directories(${OpenCV_INCLUDE_DIRS})

#显示OpenCV_INCLUDE_DIRS的值

message(${OpenCV_INCLUDE_DIRS})

FILE(GLOB_RECURSE TEST_SRC

src/*.cpp

)

# 添加一个可执行程序

# 语法:add_executable( 程序名 源代码文件 )

add_executable(target yolov5_opencv.cpp ${TEST_SRC})

# 将库文件链接到可执行程序上

target_link_libraries(target ${OpenCV_LIBS})OpenCV + YOLOv5,CUDA加速支持的源码

#include <opencv2/opencv.hpp>

#include <iostream>

#include <fstream>

std::string label_map = "classes.txt";

int main(int argc, char** argv) {

std::vector<std::string> classNames;

std::ifstream fp(label_map);

std::string name;

while (!fp.eof()) {

getline(fp, name);

if (name.length()) {

classNames.push_back(name);

}

}

fp.close();

std::vector<cv::Scalar> colors;

colors.push_back(cv::Scalar(0, 255, 0));

colors.push_back(cv::Scalar(0, 255, 255));

colors.push_back(cv::Scalar(255, 255, 0));

colors.push_back(cv::Scalar(255, 0, 0));

colors.push_back(cv::Scalar(0, 0, 255));

std::string onnxpath = "yolov5s.onnx";

auto net = cv::dnn::readNetFromONNX(onnxpath);

net.setPreferableBackend(cv::dnn::DNN_BACKEND_CUDA);

net.setPreferableTarget(cv::dnn::DNN_TARGET_CUDA);

cv::VideoCapture capture("example_dsh.mp4");

cv::Mat frame;

while (true) {

bool ret = capture.read(frame);

if (frame.empty()) {

break;

}

int64 start = cv::getTickCount();

// 图象预处理 - 格式化操作

int w = frame.cols;

int h = frame.rows;

int _max = std::max(h, w);

cv::Mat image = cv::Mat::zeros(cv::Size(_max, _max), CV_8UC3);

cv::Rect roi(0, 0, w, h);

frame.copyTo(image(roi));

float x_factor = image.cols / 640.0f;

float y_factor = image.rows / 640.0f;

// 推理

cv::Mat blob = cv::dnn::blobFromImage(image, 1 / 255.0, cv::Size(640, 640), cv::Scalar(0, 0, 0), true, false);

net.setInput(blob);

cv::Mat preds = net.forward();

// 后处理, 1x25200x85

cv::Mat det_output(preds.size[1], preds.size[2], CV_32F, preds.ptr<float>());

float confidence_threshold = 0.5;

std::vector<cv::Rect> boxes;

std::vector<int> classIds;

std::vector<float> confidences;

for (int i = 0; i < det_output.rows; i++) {

float confidence = det_output.at<float>(i, 4);

if (confidence < 0.25) {

continue;

}

cv::Mat classes_scores = det_output.row(i).colRange(5, preds.size[2]);

cv::Point classIdPoint;

double score;

minMaxLoc(classes_scores, 0, &score, 0, &classIdPoint);

// 置信度 0~1之间

if (score > 0.25)

{

float cx = det_output.at<float>(i, 0);

float cy = det_output.at<float>(i, 1);

float ow = det_output.at<float>(i, 2);

float oh = det_output.at<float>(i, 3);

int x = static_cast<int>((cx - 0.5 * ow) * x_factor);

int y = static_cast<int>((cy - 0.5 * oh) * y_factor);

int width = static_cast<int>(ow * x_factor);

int height = static_cast<int>(oh * y_factor);

cv::Rect box;

box.x = x;

box.y = y;

box.width = width;

box.height = height;

boxes.push_back(box);

classIds.push_back(classIdPoint.x);

confidences.push_back(score);

}

}

// NMS

std::vector<int> indexes;

cv::dnn::NMSBoxes(boxes, confidences, 0.25, 0.50, indexes);

for (size_t i = 0; i < indexes.size(); i++) {

int index = indexes[i];

int idx = classIds[index];

cv::rectangle(frame, boxes[index], colors[idx%5], 2, 8);

cv::rectangle(frame, cv::Point(boxes[index].tl().x, boxes[index].tl().y - 20),

cv::Point(boxes[index].br().x, boxes[index].tl().y), cv::Scalar(255, 255, 255), -1);

cv::putText(frame, classNames[idx], cv::Point(boxes[index].tl().x, boxes[index].tl().y - 10), cv::FONT_HERSHEY_SIMPLEX, .5, cv::Scalar(0, 0, 0));

}

float t = (cv::getTickCount() - start) / static_cast<float>(cv::getTickFrequency());

putText(frame, cv::format("FPS: %.2f", 1.0 / t), cv::Point(20, 40), cv::FONT_HERSHEY_PLAIN, 2.0, cv::Scalar(255, 0, 0), 2, 8);

char c = cv::waitKey(1);

if (c == 27) {

break;

}

cv::imshow("OpenCV4.5.4 CUDA + YOLOv5", frame);

}

cv::waitKey(0);

cv::destroyAllWindows();

return 0;

}作者:gloomyfish

文章来源: OpenCV学堂

推荐阅读

更多嵌入式AI干货请关注 嵌入式AI 专栏。欢迎添加极术小姐姐微信(id:aijishu20)加入技术交流群,请备注研究方向。