虫叔说第三个要学的是预运行,但我没准确的找到预运行是在哪,只找到scheduler里有预运行相关的函数,而且scheduler也是Tengine的四大核心模块之一,于是就看了看这部分的。

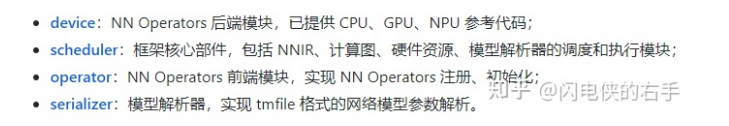

截图来源Tengine-lite的github

看了之后极为震惊,代码力太强,反反复复看了好多遍才看懂。看懂之后,只说了一个字 妙啊~

这部分的代码还是挺少的,做的笔记倒是有很多,因为思路比较绕,我怕看了之后过几天就忘了,所以写的详细一些。进入正题

scheduler由四个函数组成:

- sched_prerun:调度器预运行,调用每一个subgraph的device接口,执行prerun操作,后设置状态为ready

- sched_run:调度器执行,主要是根据每一个subgraph的所有输入是否都准备就绪了,就绪了就可以执行该子图,子图执行后,子图的输出tensor就绪,与该子图相连的子图的输入tensor也就绪

- sched_wait:调度器等待

sched_postrun:调度器执行后清理,调用每一个subgraph的device接口,设置subgraph的状态为done

// 调度器预运行 static int sched_prerun(ir_scheduler_t* scheduler, ir_graph_t* ir_graph) { // 获取graph的子图个数 int subgraph_num = get_vector_num(ir_graph->subgraph_list); for (int i = 0; i < subgraph_num; i++) { // 遍历获取每一个子图 struct subgraph* subgraph = get_ir_graph_subgraph(ir_graph, i); // 获取子图的device ir_device_t* device = subgraph->device; void* opt = NULL; // 获取graph的context的设备名 char* default_name = *(char**)(ir_graph->attribute->context->default_options); // 设置opt if (0 == strcmp(device->name, default_name)) { opt = ir_graph->attribute->context->default_options; } else { opt = ir_graph->attribute->context->device_options; } // 调用设备的预执行接口 int ret = device->interface->pre_run(device, subgraph, opt); if (0 != ret) { subgraph->status = GRAPH_STAT_ERROR; TLOG_ERR("Tengine Fatal: Pre-run subgraph(%d) on %s failed.\n", subgraph->index, device->name); return -1; } // 设置当前的子图状态为准备就绪 subgraph->status = GRAPH_STAT_READY; } return 0; } // 调度器执行 static int sched_run(ir_scheduler_t* scheduler, ir_graph_t* ir_graph, int block) { // 同步scheduler不支持非阻塞模式 if (block == 0) { TLOG_DEBUG("sync scheduler does not support non block run\n"); return -1; } // 创建等待队列 struct vector* wait_list = create_vector(sizeof(struct subgraph*), NULL); if (NULL == wait_list) { return -1; } // 获取graph的子图数量 int subgraph_num = get_vector_num(ir_graph->subgraph_list); /* insert all subgraph into wait list */ for (int i = 0; i < subgraph_num; i++) { // 遍历每一个子图 struct subgraph* subgraph = get_ir_graph_subgraph(ir_graph, i); // 子图入队 push_vector_data(wait_list, &subgraph); } // 分配就绪队列的内存空间 int* ready_list = (int*)sys_malloc(sizeof(int) * subgraph_num); if (ready_list == NULL) { return -1; } // 大循环,不断地轮询 while (1) { // 初始化就绪子图的数量和等待子图的数量 int ready_num = 0; int wait_num = get_vector_num(wait_list); // 大循环,轮询等待队列,如果队列中没有等待子图,则break if (wait_num == 0) break; for (int i = 0; i < wait_num; i++) { // 遍历每一个等待子图 struct subgraph* subgraph = *(struct subgraph**)get_vector_data(wait_list, i); // 这里需要结合切图的代码去看,input_ready是初始为0的, // 而如果子图中有输入tensor的类型为VAR可变类型,则其input_wait就要加1 // 即,子图的输入tensor中不存在VAR类型的tensor,read就会和wait相等 // ready_list中依次填入就绪子图的idx,在后面遍历就绪子图的时候,只需要遍历ready_num次 if (subgraph->input_ready_count == subgraph->input_wait_count) ready_list[ready_num++] = i; } if (ready_num == 0) { TLOG_ERR("no subgraph is ready, but there are still %d subgraphs in wait_list\n", wait_num); return -1; } for (int i = 0; i < ready_num; i++) { // 遍历每一个就绪子图 struct subgraph* subgraph = *(struct subgraph**)get_vector_data(wait_list, ready_list[i]); // 获取遍历子图的device ir_device_t* nn_dev = subgraph->device; // 设置就绪子图的状态为running subgraph->status = GRAPH_STAT_RUNNING; // 调用device的执行接口 if (nn_dev->interface->run(nn_dev, subgraph) < 0) { TLOG_ERR("run subgraph %d error!\n", subgraph->index); sys_free(ready_list); release_vector(wait_list); subgraph->status = GRAPH_STAT_ERROR; return -1; } for (int j = 0; j < get_vector_num(ir_graph->subgraph_list); j++) { // 遍历graph的每一个子图 struct subgraph* waiting_sub_graph = *(struct subgraph**)get_vector_data(ir_graph->subgraph_list, j); for (int k = 0; k < waiting_sub_graph->input_num; k++) { // 遍历子图的每一个输入张量idx uint16_t waiting_input_idx = waiting_sub_graph->input_tensor_list[k]; for (int m = 0; m < subgraph->output_num; m++) { // 遍历就绪子图的每一个输出张量idx int16_t current_output_idx = subgraph->output_tensor_list[m]; // 这边比较有意思,当就绪子图的当前输出张量idx和当前子图的输入张量idx相等时, // 让当前子图的input_ready数量+1, // 就是说,我现在知道了所有的就绪子图的情况,执行完了就绪子图后, // 那就绪子图的输出tensor(简写成ready tensor)也是就绪的, // 那么ready tensord消费者节点或者子图的input也是就绪的,所以要让这个子图的input ready数量+1, // 可以使VAR的tensor子图得以判断为就绪 if (subgraph->input_ready_count == subgraph->input_wait_count) ready_list[ready_num++] = i; if (current_output_idx == waiting_input_idx) { waiting_sub_graph->input_ready_count++; } } } } // 设置当前就绪子图状态为ready subgraph->status = GRAPH_STAT_READY; } // 遍历每一个就绪子图结束 /* remove executed subgraph from list, should from higher idx to lower idx */ // 将就绪子图从等待子图队列中删除掉,剩下等待子图 for (int i = ready_num - 1; i >= 0; i--) { remove_vector_via_index(wait_list, ready_list[i]); } } /* reset the ready count */ for (int i = 0; i < subgraph_num; i++) { struct subgraph* subgraph = get_ir_graph_subgraph(ir_graph, i); subgraph->input_ready_count = 0; } sys_free(ready_list); release_vector(wait_list); return 0; } static int sched_wait(ir_scheduler_t* scheduler, ir_graph_t* ir_graph) { return -1; } // 执行后的清理工作 static int sched_postrun(ir_scheduler_t* scheduler, ir_graph_t* ir_graph) { // 获取graph的子图数量 int subgraph_num = get_vector_num(ir_graph->subgraph_list); int has_error = 0; for (int i = 0; i < subgraph_num; i++) { // 遍历每一个子图 struct subgraph* subgraph = get_ir_graph_subgraph(ir_graph, i); // 获取子图的device ir_device_t* nn_dev = subgraph->device; // 设置子图的状态为Done subgraph->status = GRAPH_STAT_DONE; // 调用device的后处理的接口 if (nn_dev->interface->post_run(nn_dev, subgraph) < 0) { subgraph->status = GRAPH_STAT_ERROR; has_error = 1; TLOG_ERR("sched %d prerun failed\n", subgraph->index); } } if (has_error) return -1; else return 0; } static ir_scheduler_t sync_scheduler = { .name = "sync", .prerun = sched_prerun, .run = sched_run, .wait = sched_wait, .postrun = sched_postrun, .release = NULL, }; ir_scheduler_t* find_default_scheduler(void) { return &sync_scheduler; }

原文链接:https://zhuanlan.zhihu.com/p/400716426

作者:闪电侠的右手

推荐阅读

更多Tengine相关内容请关注Tengine-边缘AI推理框架专栏。